We introduce existing datasets for Human Trajectory Prediction (HTP) task, and also provide tools to load, visualize and analyze datasets. So far multiple datasets are supported.

| Sample | Name | Description | Ref |

|---|---|---|---|

|

ETH | 2 top view scenes containing walking pedestrians #Traj:[Peds=750] Coord=world-2D FPS=2.5 |

website paper |

|

UCY | 3 scenes (Zara/Arxiepiskopi/University). Zara and University close to top view. Arxiepiskopi more inclined. #Traj:[Peds=786] Coord=world-2D FPS=2.5 |

website paper |

|

PETS 2009 | different crowd activities #Traj:[?] Coord=image-2D FPS=7 |

website paper |

|

SDD | 8 top view scenes recorded by drone contains various types of agents #Traj:[Bikes=4210 Peds=5232 Skates=292 Carts=174 Cars=316 Buss=76 Total=10,300] Coord=image-2D FPS=30 |

website paper dropbox |

|

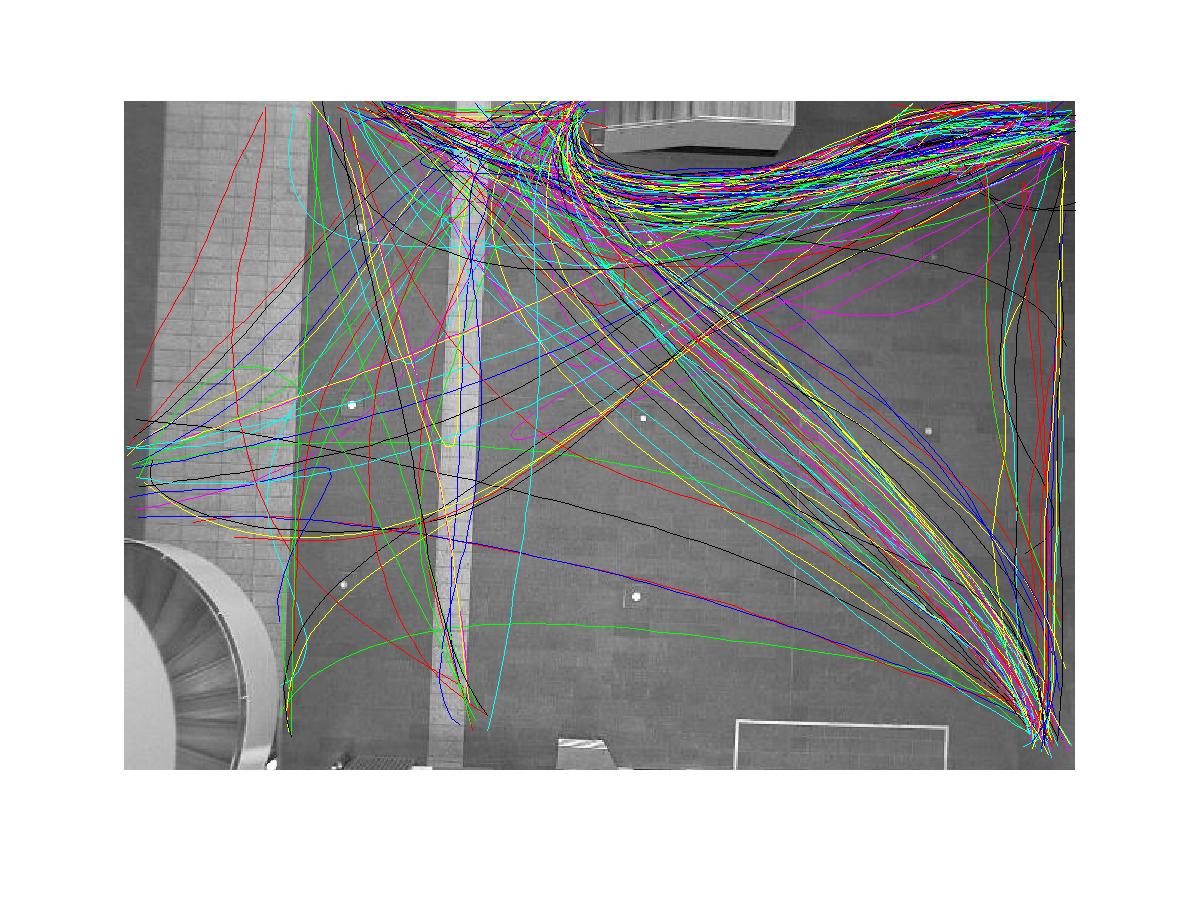

GC | Grand Central Train Station Dataset: 1 scene of 33:20 minutes of crowd trajectories #Traj:[Peds=12,684] Coord=image-2D FPS=25 |

dropbox paper |

|

HERMES | Controlled Experiments of Pedestrian Dynamics (Unidirectional and bidirectional flows) #Traj:[?] Coord=world-2D FPS=16 |

website data |

|

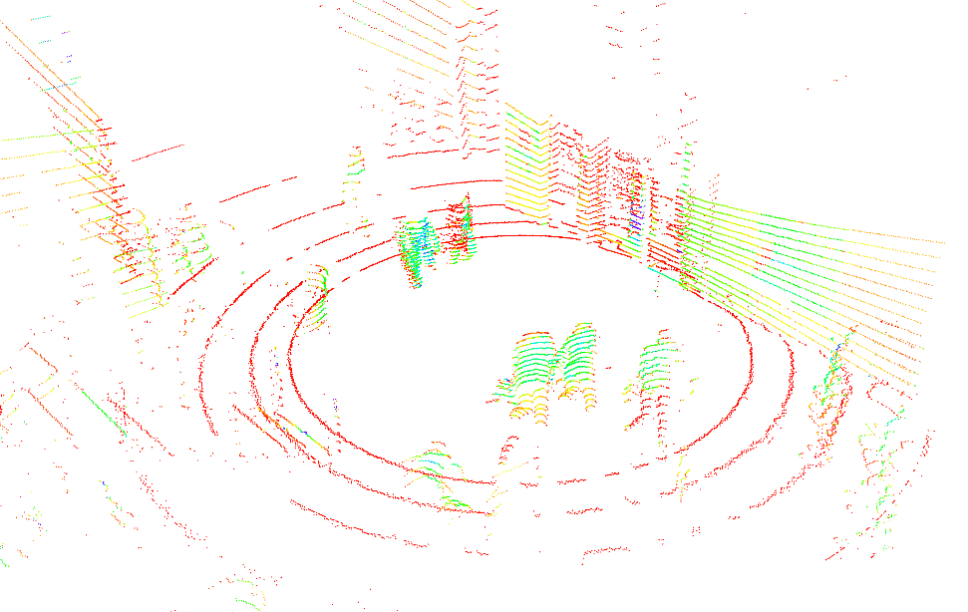

Waymo | High-resolution sensor data collected by Waymo self-driving cars #Traj:[?] Coord=2D and 3D FPS=? |

website github |

|

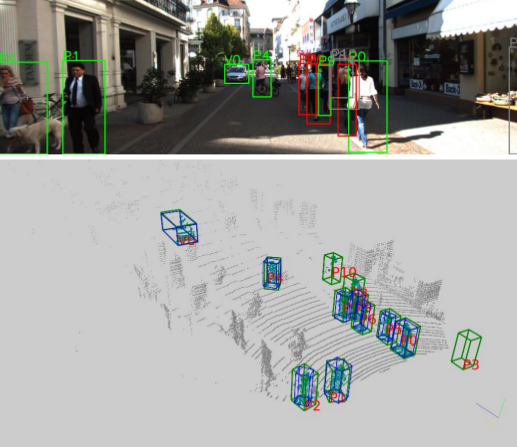

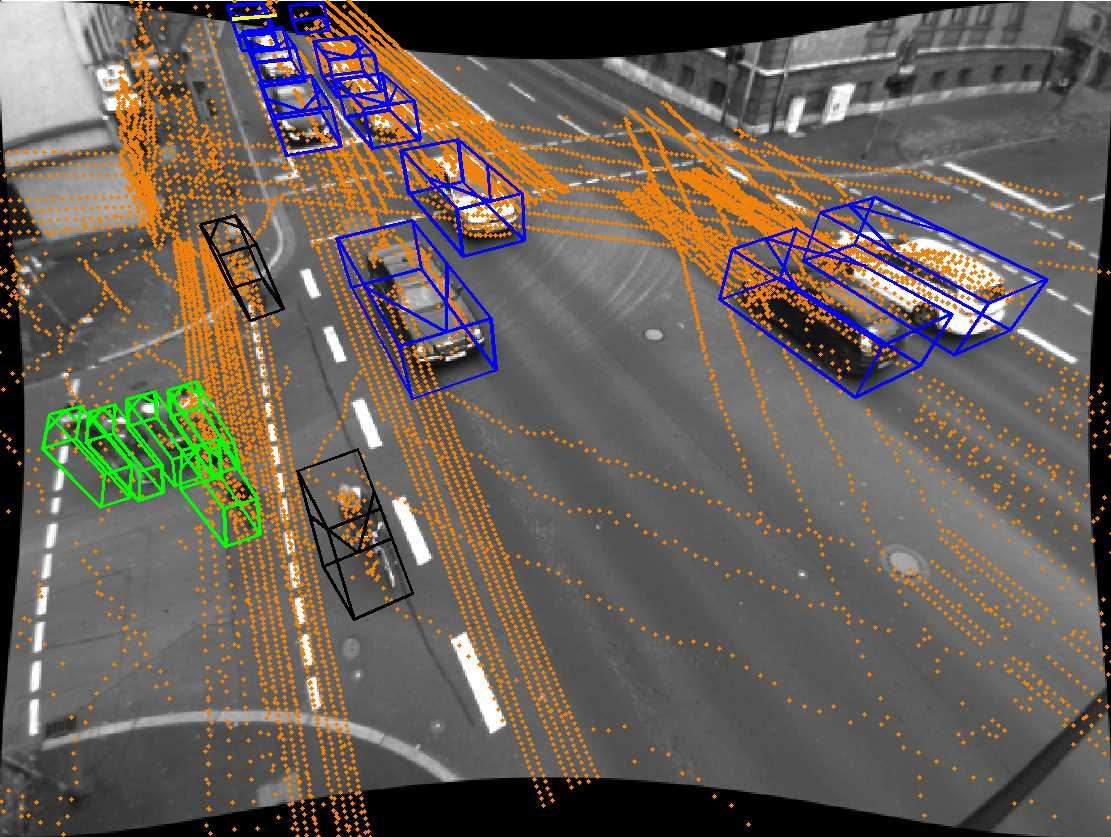

KITTI | 6 hours of traffic scenarios. various sensors #Traj:[?] Coord=image-3D + Calib FPS=10 |

website |

|

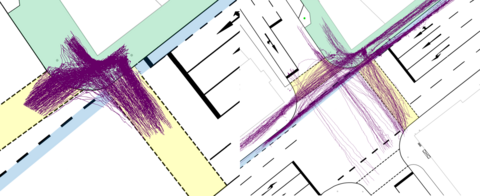

inD | Naturalistic Trajectories of Vehicles and Vulnerable Road Users Recorded at German Intersections #Traj:[Total=11,500] Coord=world-2D FPS=25 |

website paper |

|

L-CAS | Multisensor People Dataset Collected by a Pioneer 3-AT robot #Traj:[?] Coord=0 FPS=0 |

website |

|

VIRAT | Natural scenes showing people performing normal actions #Traj:[?] Coord=0 FPS=0 |

website |

|

VRU | consists of pedestrian and cyclist trajectories, recorded at an urban intersection using cameras and LiDARs #Traj:[peds=1068 Bikes=464] Coord=World (Meter) FPS=25 |

website |

|

Edinburgh | People walking through the Informatics Forum (University of Edinburgh) #Traj:[ped=+92,000] FPS=0 |

website |

|

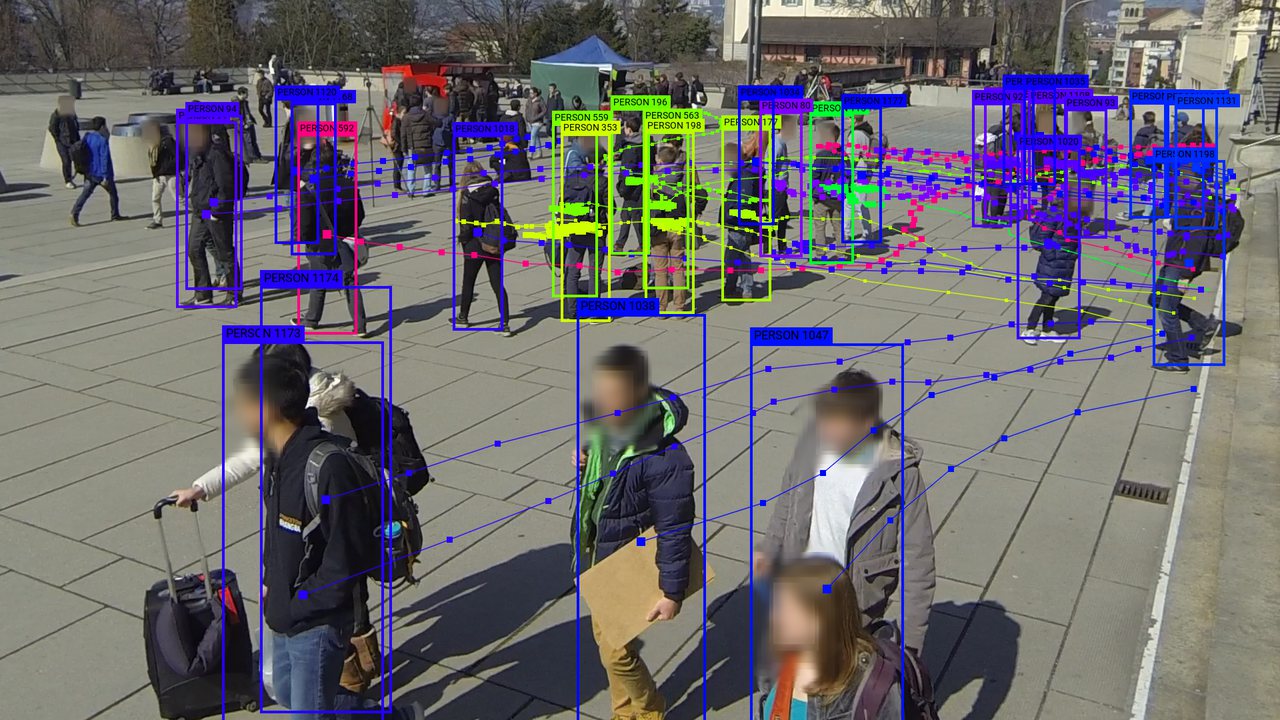

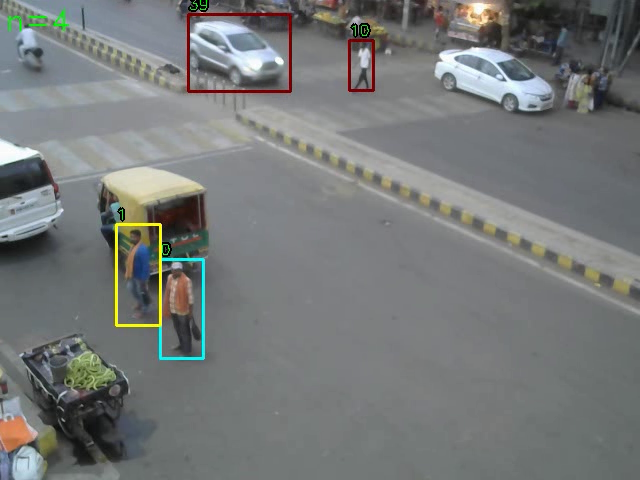

Town Center | CCTV video of pedestrians in a busy downtown area in Oxford #Traj:[peds=2,200] Coord=0 FPS=0 |

website |

|

ATC | 92 days of pedestrian trajectories in a shopping center in Osaka, Japan #Traj:[?] Coord=world-2D + Range data |

website |

|

City Scapes | 25,000 annotated images (Semantic/ Instance-wise/ Dense pixel annotations) #Traj:[?] |

website |

|

Forking Paths Garden | Multi-modal Synthetic dataset, created in CARLA (3D simulator) based on real world trajectory data, extrapolated by human annotators #Traj:[?] |

website github paper |

|

nuScenes | Large-scale Autonomous Driving dataset #Traj:[peds=222,164 vehicles=662,856] Coord=World + 3D Range Data FPS=2 |

website |

|

Argoverse | 320 hours of Self-driving dataset #Traj:[objects=11,052] Coord=3D FPS=10 |

website |

|

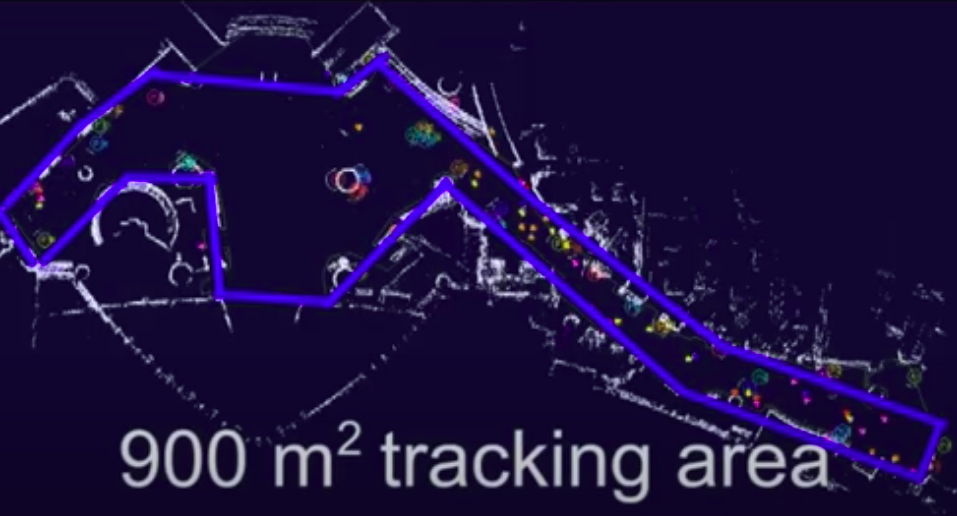

Wild Track | surveillance video dataset of students recorded outside the ETH university main building in Zurich. #Traj:[peds=1,200] |

website |

|

DUT | Natural Vehicle-Crowd Interactions in crowded university campus #Traj:[Peds=1,739 vehicles=123 Total=1,862] Coord=world-2D FPS=23.98 |

github paper |

|

CITR | Fundamental Vehicle-Crowd Interaction scenarios in controlled experiments #Traj:[Peds=340] Coord=world-2D FPS=29.97 |

github paper |

|

Ko-PER | Trajectories of People and vehicles at Urban Intersections (Laserscanner + Video) #Traj:[peds=350] Coord=world-2D |

paper |

|

TRAF | small dataset of dense and heterogeneous traffic videos in India (22 footages) #Traj:[Cars=33 Bikes=20 Peds=11] Coord=image-2D FPS=10 |

website gDrive paper |

|

ETH-Person | Multi-Person Data Collected from Mobile Platforms | website |

- MOT-Challenge: Multiple Object Tracking Benchmark

- Trajnet: Trajectory Forecasting Challenge

- Trajnet++: Trajectory Forecasting Challenge

- JackRabbot: Detection And Tracking Dataset and Benchmark

To download the toolkit, separately in a zip file click here)

Using python files in parser dir, you can load a dataset into a dataset object. This object then can be used to retrieve the trajectories, with different queries (by id, timestamp, ...).

A simple script with a pyqt GUI is developed play.py, and can be used to visualize a given dataset (the ones whose parsers are available):

- An Awesome list of Trajectory Prediction references can be found here

Are you interested in collaboration on OpenTraj? Send an email to me titled OpenTraj.