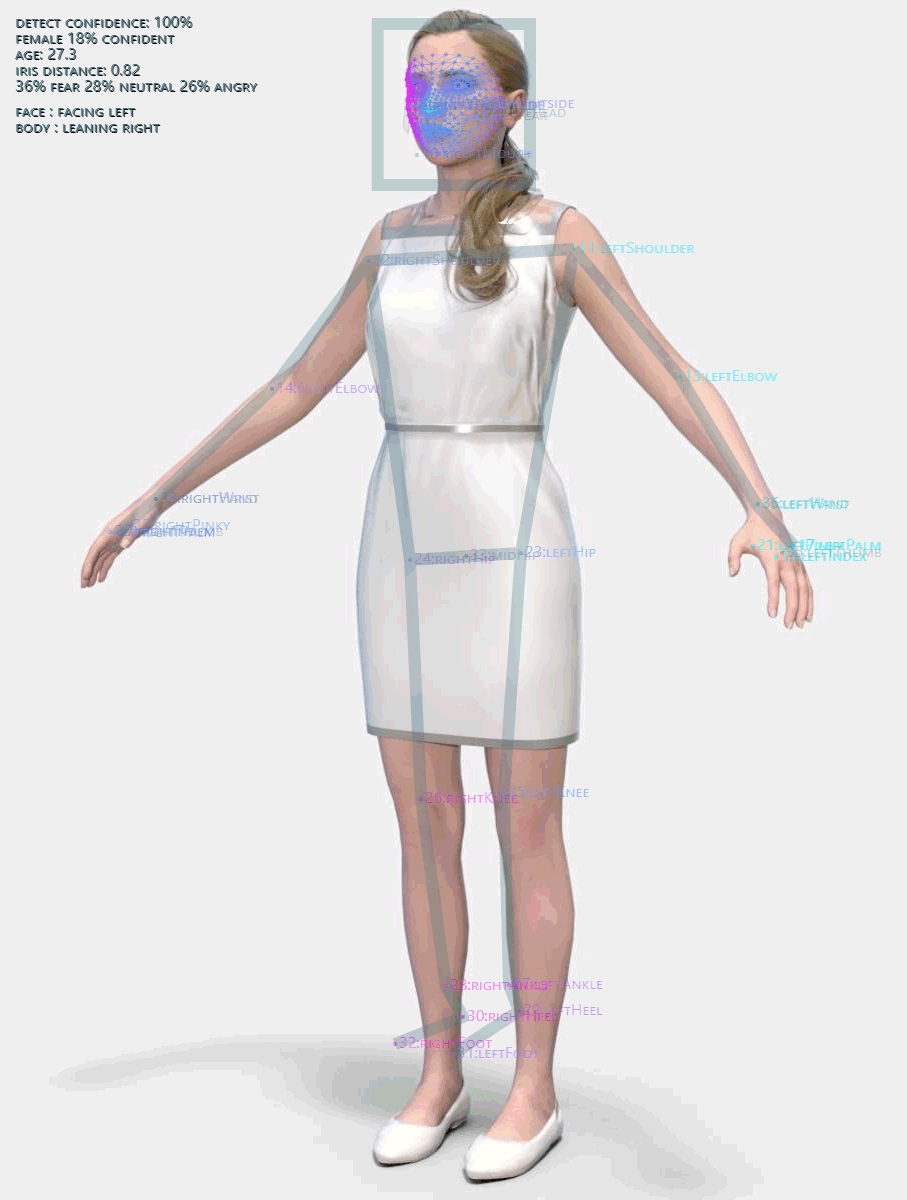

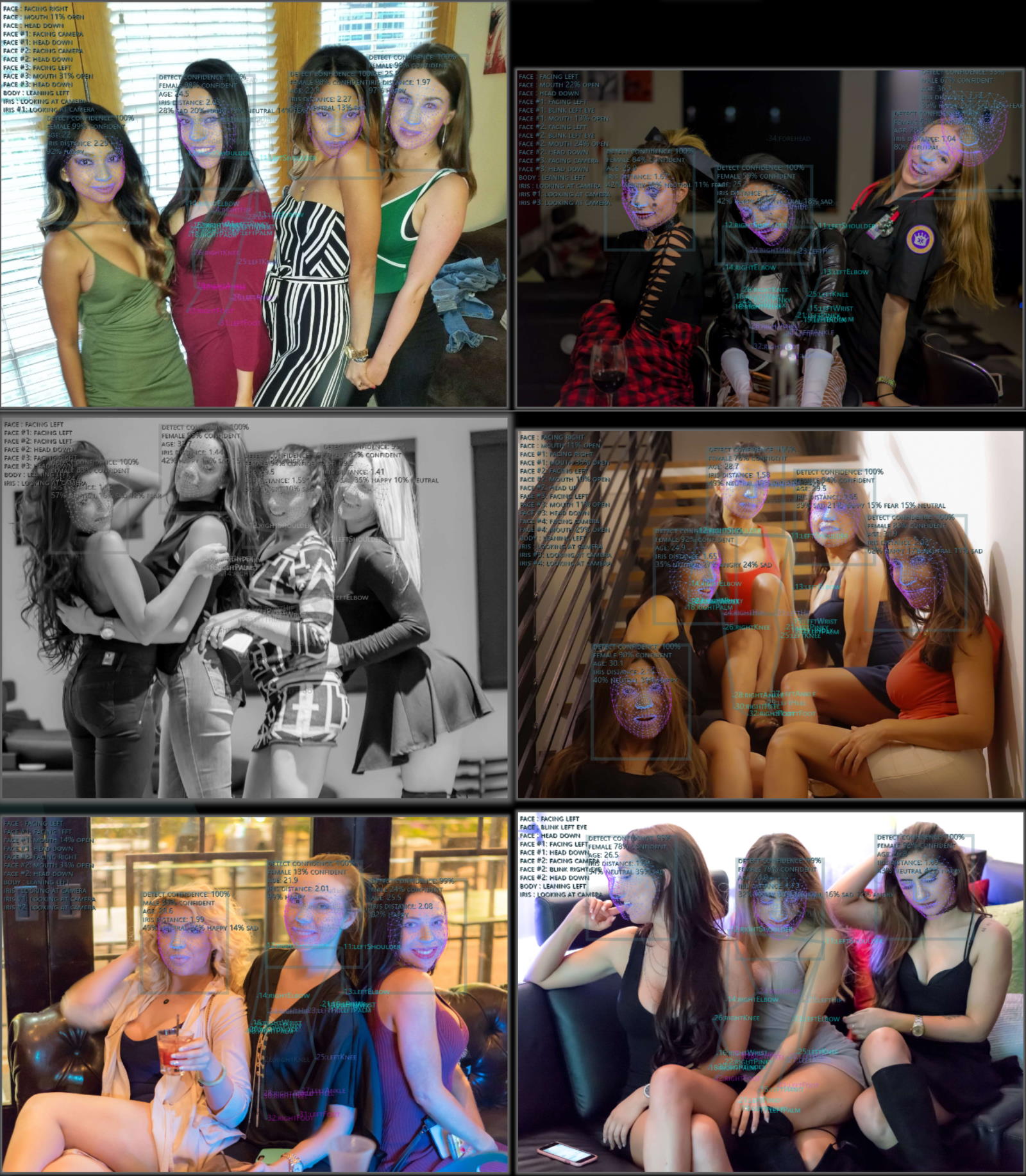

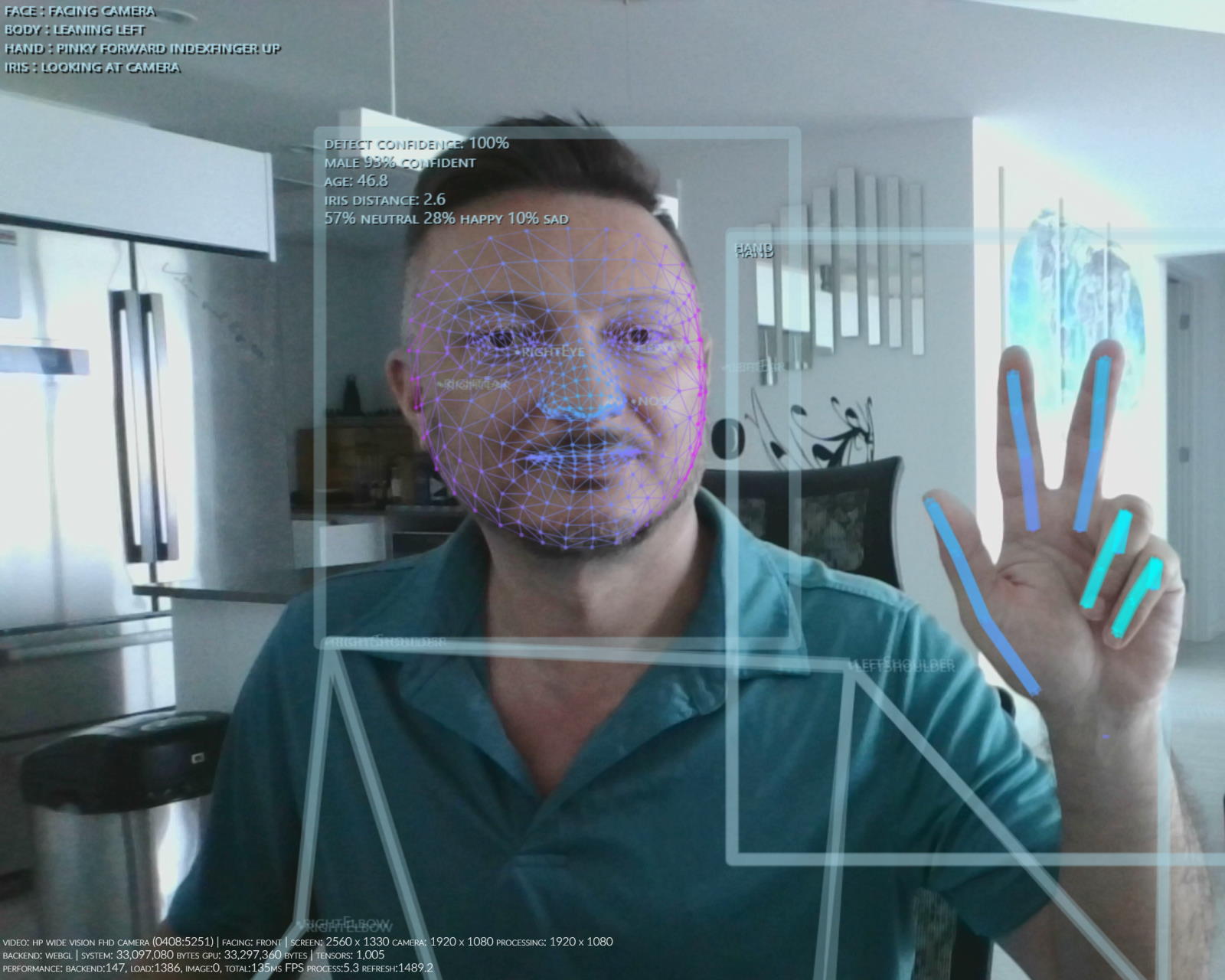

AI-powered 3D Face Detection & Rotation Tracking, Face Description & Recognition,

Body Pose Tracking, 3D Hand & Finger Tracking, Iris Analysis,

Age & Gender & Emotion Prediction, Gesture Recognition

JavaScript module using TensorFlow/JS Machine Learning library

- Browser:

Compatible with both desktop and mobile platforms

Compatible with CPU, WebGL, WASM backends

Compatible with WebWorker execution - NodeJS:

Compatible with both software tfjs-node and

GPU accelerated backends tfjs-node-gpu using CUDA libraries

Check out Live Demo for processing of live WebCam video or static images

- Main Application

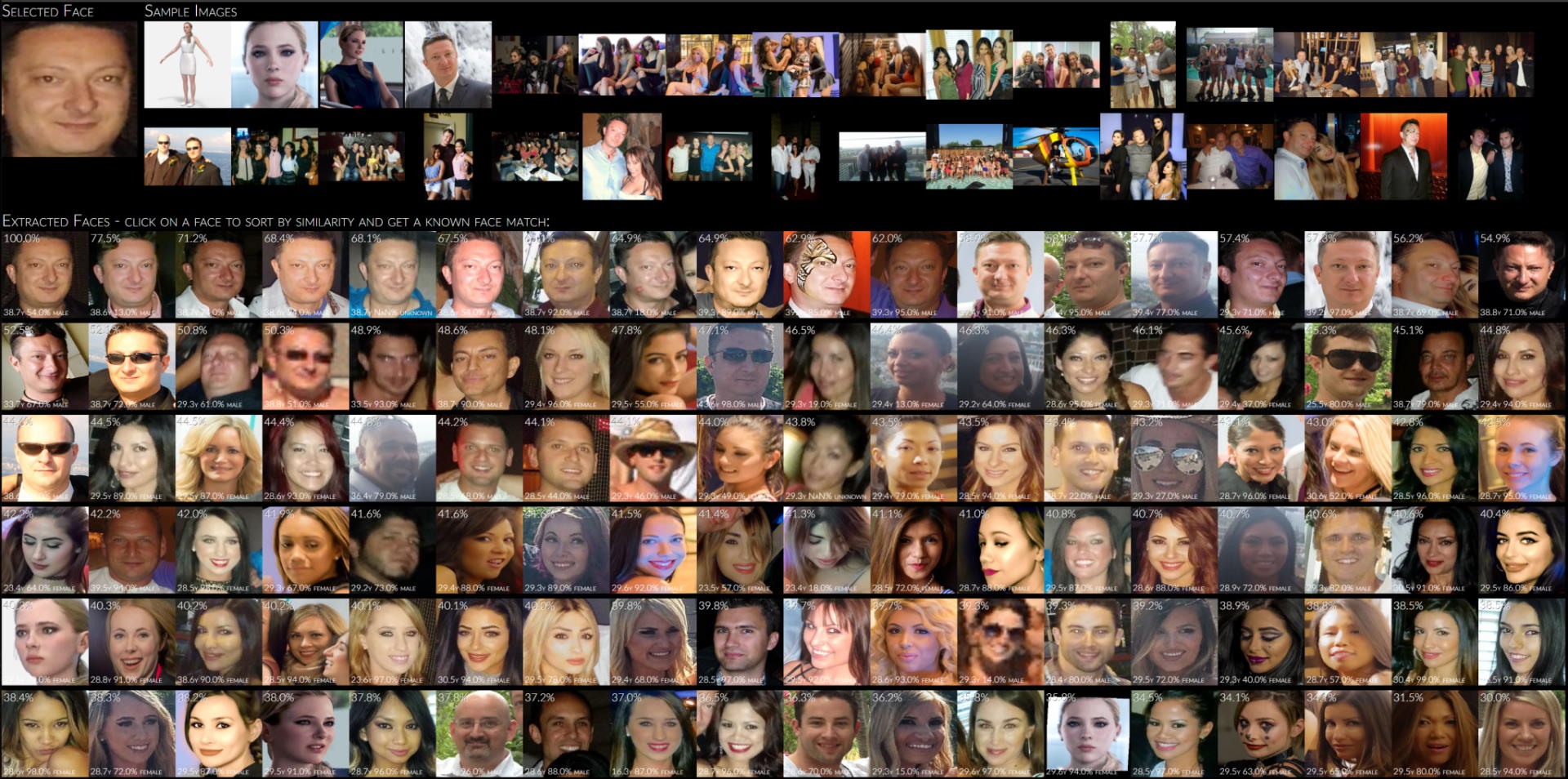

- Face Extraction, Description, Identification and Matching

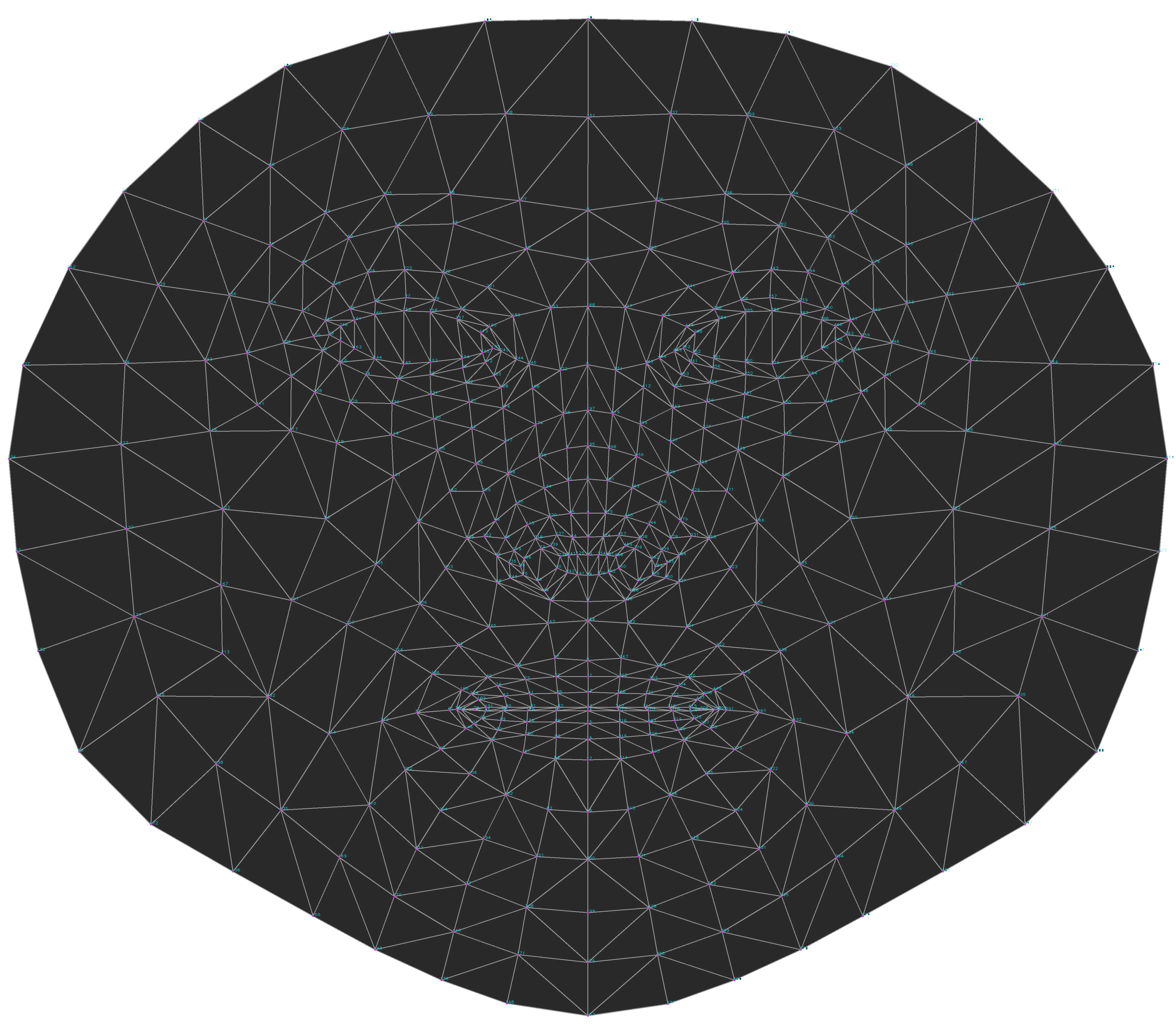

- Face Extraction and 3D Rendering

- Details on Demo Applications

- Code Repository

- NPM Package

- Issues Tracker

- TypeDoc API Specification: Human

- TypeDoc API Specification: Root

- Change Log

- Home

- Installation

- Usage & Functions

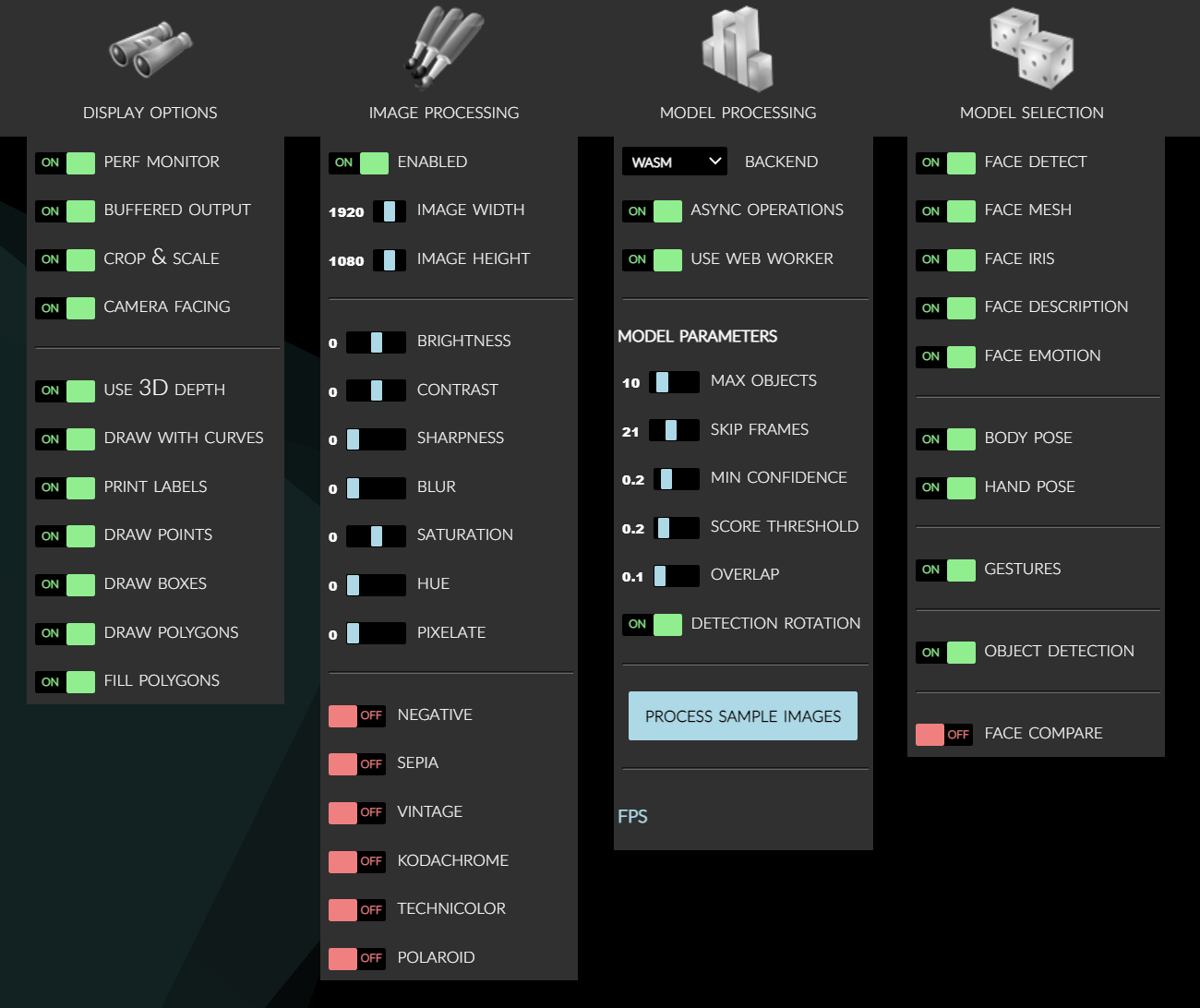

- Configuration Details

- Output Details

- Face Recognition & Face Description

- Gesture Recognition

- Common Issues

- Background and Benchmarks

- Notes on Backends

- Development Server

- Build Process

- Performance Notes

- Performance Profiling

- Platform Support

- List of Models & Credits

See issues and discussions for list of known limitations and planned enhancements

Suggestions are welcome!

As presented in the demo application...

Training image:

Using static images:

Live WebCam view:

Face Similarity Matching:

Face3D OpenGL Rendering:

468-Point Face Mesh Defails:

(view in full resolution to see keypoints)

Simply load Human (IIFE version) directly from a cloud CDN in your HTML file:

(pick one: jsdelirv, unpkg or cdnjs)

<script src="https://cdn.jsdelivr.net/npm/@vladmandic/human/dist/human.js"></script>

<script src="https://unpkg.dev/@vladmandic/human/dist/human.js"></script>

<script src="https://cdnjs.cloudflare.com/ajax/libs/human/1.4.1/human.js"></script>For details, including how to use Browser ESM version or NodeJS version of Human, see Installation

Human library can process all known input types:

Image,ImageData,ImageBitmap,Canvas,OffscreenCanvas,Tensor,HTMLImageElement,HTMLCanvasElement,HTMLVideoElement,HTMLMediaElement

Additionally, HTMLVideoElement, HTMLMediaElement can be a standard <video> tag that links to:

- WebCam on user's system

- Any supported video type

For example:.mp4,.avi, etc. - Additional video types supported via HTML5 Media Source Extensions

Live streaming examples:- HLS (HTTP Live Streaming) using

hls.js - DASH (Dynamic Adaptive Streaming over HTTP) using

dash.js

- HLS (HTTP Live Streaming) using

- WebRTC media track

Example simple app that uses Human to process video input and

draw output on screen using internal draw helper functions

// create instance of human with simple configuration using default values

const config = { backend: 'webgl' };

const human = new Human(config);

function detectVideo() {

// select input HTMLVideoElement and output HTMLCanvasElement from page

const inputVideo = document.getElementById('video-id');

const outputCanvas = document.getElementById('canvas-id');

// perform processing using default configuration

human.detect(inputVideo).then((result) => {

// result object will contain detected details

// as well as the processed canvas itself

// so lets first draw processed frame on canvas

human.draw.canvas(result.canvas, outputCanvas);

// then draw results on the same canvas

human.draw.face(outputCanvas, result.face);

human.draw.body(outputCanvas, result.body);

human.draw.hand(outputCanvas, result.hand);

human.draw.gesture(outputCanvas, result.gesture);

// and loop immediate to the next frame

requestAnimationFrame(detectVideo);

});

}

detectVideo();or using async/await:

// create instance of human with simple configuration using default values

const config = { backend: 'webgl' };

const human = new Human(config);

async function detectVideo() {

const inputVideo = document.getElementById('video-id');

const outputCanvas = document.getElementById('canvas-id');

const result = await human.detect(inputVideo);

human.draw.all(outputCanvas, result);

requestAnimationFrame(detectVideo);

}

detectVideo();Default models in Human library are:

- Face Detection: MediaPipe BlazeFace (Back version)

- Face Mesh: MediaPipe FaceMesh

- Face Description: HSE FaceRes

- Face Iris Analysis: MediaPipe Iris

- Emotion Detection: Oarriaga Emotion

- Body Analysis: PoseNet (AtomicBits version)

Note that alternative models are provided and can be enabled via configuration

For example, PoseNet model can be switched for BlazePose model depending on the use case

For more info, see Configuration Details and List of Models

Human library is written in TypeScript 4.2

Conforming to JavaScript ECMAScript version 2020 standard

Build target is JavaScript EMCAScript version 2018

For details see Wiki Pages

and API Specification