This repository is the official PyTorch implementation of the paper "Combating Noisy Labels through Fostering Selfand Neighbor-Consistency".

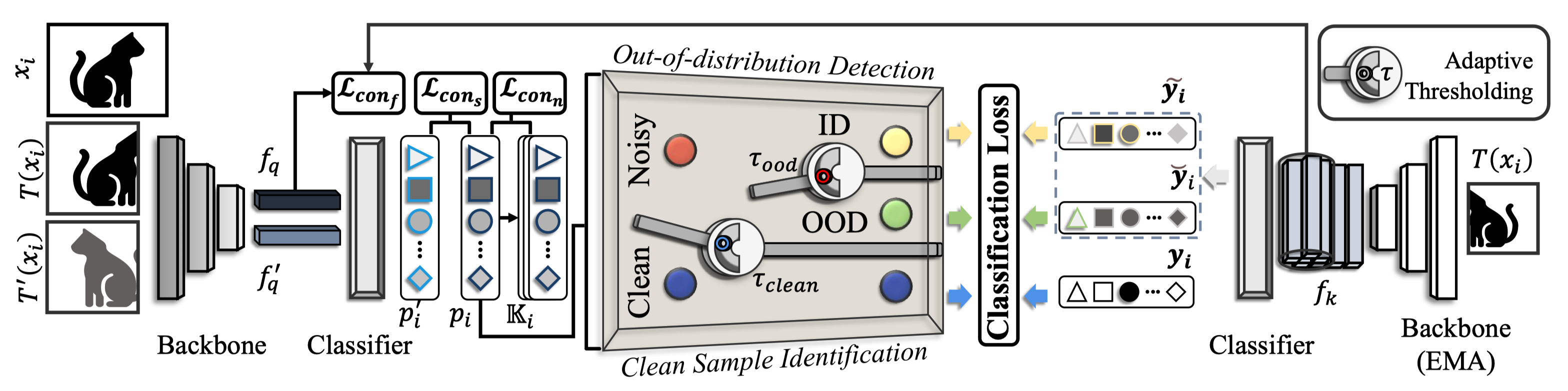

Label noise is pervasive in various real-world scenarios, posing challenges in supervised deep learning. Deep networks are vulnerable to such label-corrupted samples due to the memorization effect. Previous methods typically concentrate on identifying clean data for training. However, these methods often neglect imbalances in label noise across different mini-batches and devote insufficient attention to out-of-distribution noisy data. To this end, we propose a noise-robust method named Jo-SNC (Joint sample selection and model regularization based on Self- and Neighbor-Consistency). Specifically, we propose to employ the Jensen-Shannon divergence to measure the “likelihood” of a sample being clean or out-of-distribution. This process factors in the nearest neighbors of each sample to reinforce the reliability of clean sample identification. We design a self-adaptive, data-driven thresholding scheme to adjust per-class selection thresholds. While clean samples undergo conventional training, detected in-distribution and out-of-distribution noisy samples are trained following partial label learning and negative learning, respectively. Finally, we advance the model performance further by proposing a triplet consistency regularization that promotes self-prediction consistency, neighbor-prediction consistency, and feature consistency. Extensive experiments on various benchmark datasets and comprehensive ablation studies demonstrate the effectiveness and superiority of our approach over existing state-of-the-art methods.

The workflow of Jo-SNC.

- python 3.9

- torch == 1.12.1

- torchvision == 0.13.1

- CUDA 12.1

After creating a virtual environment of python 3.9, run pip install -r requirements.txt to install all dependencies

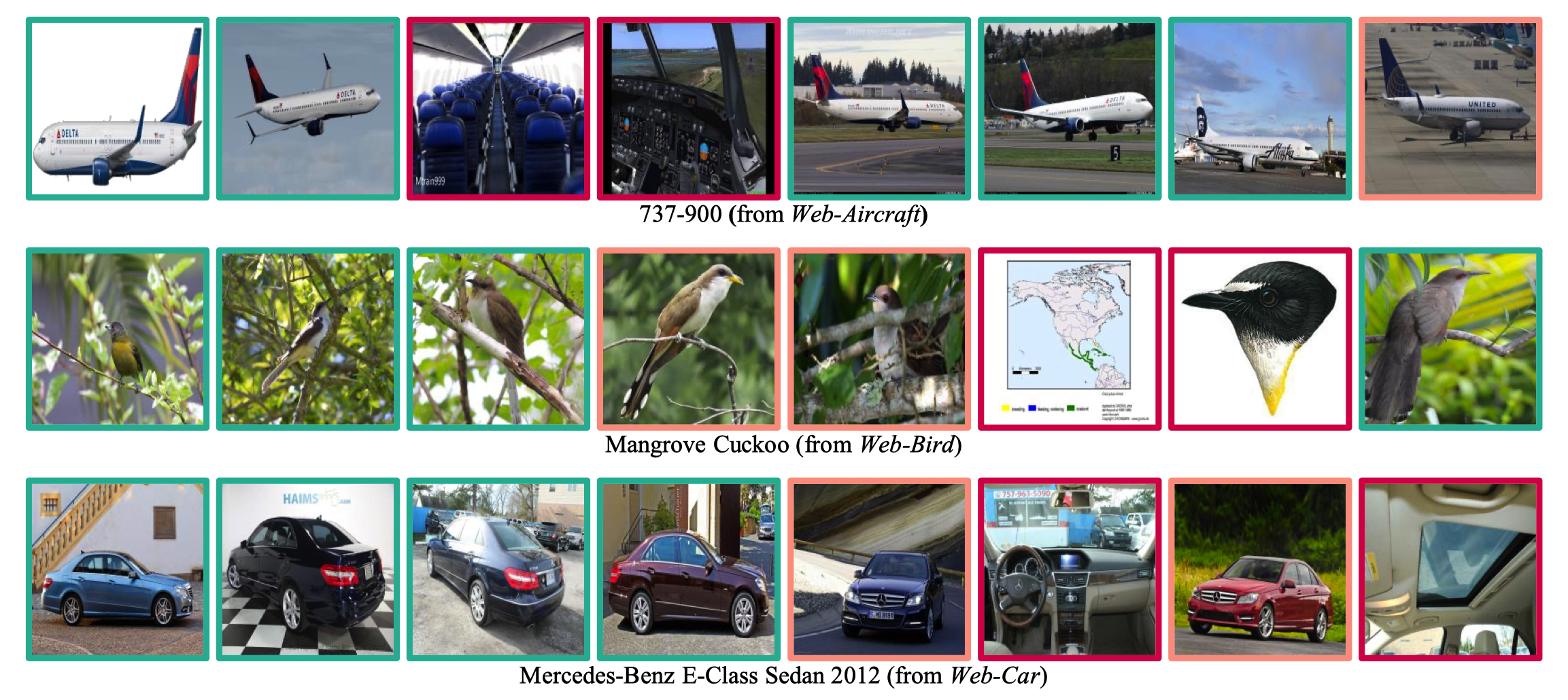

| Dataset | Backbone | Model |

|---|---|---|

| Web-Aircraft | ResNet50 | model |

| Web-Bird | ResNet50 | model |

| Web-Car | ResNet50 | model |

| Animal-10N | VGG19 | model |

| miniWebVision | ResNet50 | model |

| miniWebVision | Inception-ResNet-v2 | model |

| Food101N | ResNet50 | model |

- Create

../datasetsfolder - Download the dataset into

../datasetsfolder - Please make sure the project directory is organized as following

<project_dir>

|-- results

|-- datasets

|-- web-aircraft

|-- cifar100

|-- animal10n

|-- ...

|-- code

|-- config

|-- main.py

|-- ...

- Prepare data

- Activate the virtual environment (e.g., conda) and run the training command with specified

GPU_IDlike following

bash scripts/aircraft.sh ${GPU_ID}

Note: More training scripts are in the scripts directory

- Prepare data

- Download trained models from the above model zoo.

- Activate the virtual environment (e.g., conda) and run the testing command with specified

GPU_IDlike following

python demo.py --cfg config/aircraft.yaml --model-path web_aircraft.pth --gpu ${GPU_ID}