Abdulla Jasem Ahmed Jaber Almansoori, Talal Abdullah Fadhl Algumaei, Muhammad Hamza Sharif

The repository contains the implementation of "Improving Latent Space of GANs". In this project, we are combining three learning paradigms: generative learning, contrastive learning, and generative-contrastive learning. Our main objective is to train GAN to learn disentangled representations. For that purpose, we proposed two frameworks.

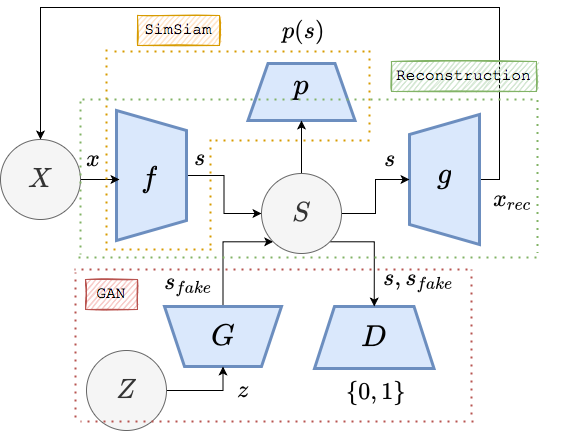

In the first proposed framework, we combined GANs, SimSam Network and Autoenconder. The architecture of proposed framework is shown as:

The limitations we faced from this design made us rethink the framework, which led us to the second framework detailed below.

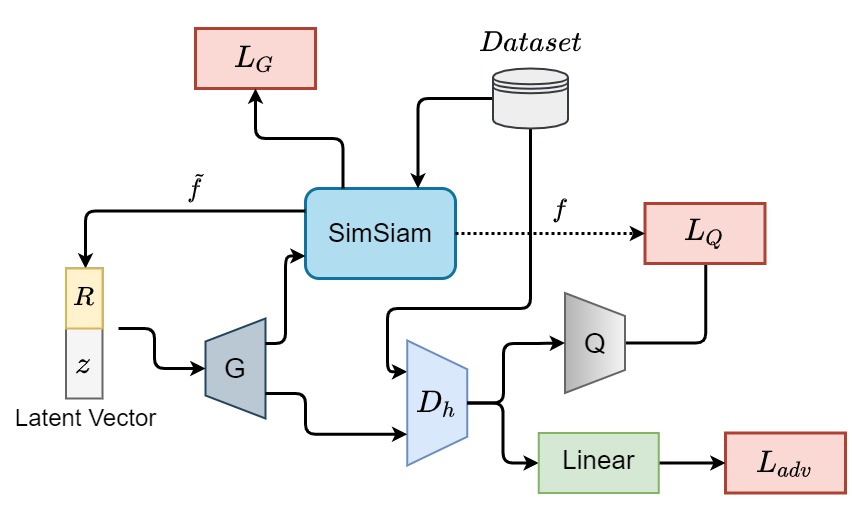

In the second proposed framework, we used an AC-GAN-style training algorithm for conditioning the generator on the representations from SimSiam. The model design is as follows:

The second framework performs more closely to our expectations, so we ran most of our experiments using it.- Ubuntu based machine with NVIDIA GPU or Google Colab is recommended for running the training and evaluation script.

- Python 3.8.

- Pytorch 1.10.0 and corresponding torchvision version.

We recommend creating a conda environment when not using Google Colab. The installation steps are as follows:

$ conda create -n ConGAN python=3.8

$ conda activate ConGAN

$ pip install -r requirements.txtSteps for training the model from scratch:

- Download the desired pretrained SimSiam model from this link.

- Specify the path to the pretrained model and the main directory in the "Header/Imports" section.

- Run the cells until "Training/Args" section.

- Specify the arguments and hyperparameters for your experiment.

- Run the cells until "Training/Run".

- Define your training run. For example, you can save the model every 5 epochs, or you might want to increase some hyperparameter linearly every epoch.

- Run the experiment for the desired number of epochs.

- Check generator's performance for a variety of representation sampling methods in the "Training/Results" section.

- Evaluate the performance of the generator by running the cells in the "GAN Metrics" section.

We provide links to download the model components for two experiment runs:

- We set the consistency coefficients initially set to 0, then 0.1 after 30 epochs, then 1.0 after 30 epochs, and then trained for 30 epochs more (total 90 epochs).

- Consistency coefficients set to 0 and the generator is not conditioned on the representation. In other words, we train the same architecture using without conditioning.

We report the results and links for downloading the model components in the following table:

| Run | IS | FID | model.pth.tar | latent_transform.pth.tar | Q.pth.tar |

|---|---|---|---|---|---|

| 1 | 5.86 | 67.74 | link | link | link |

| 2 | 5.44 | 72.23 | link | link | link |

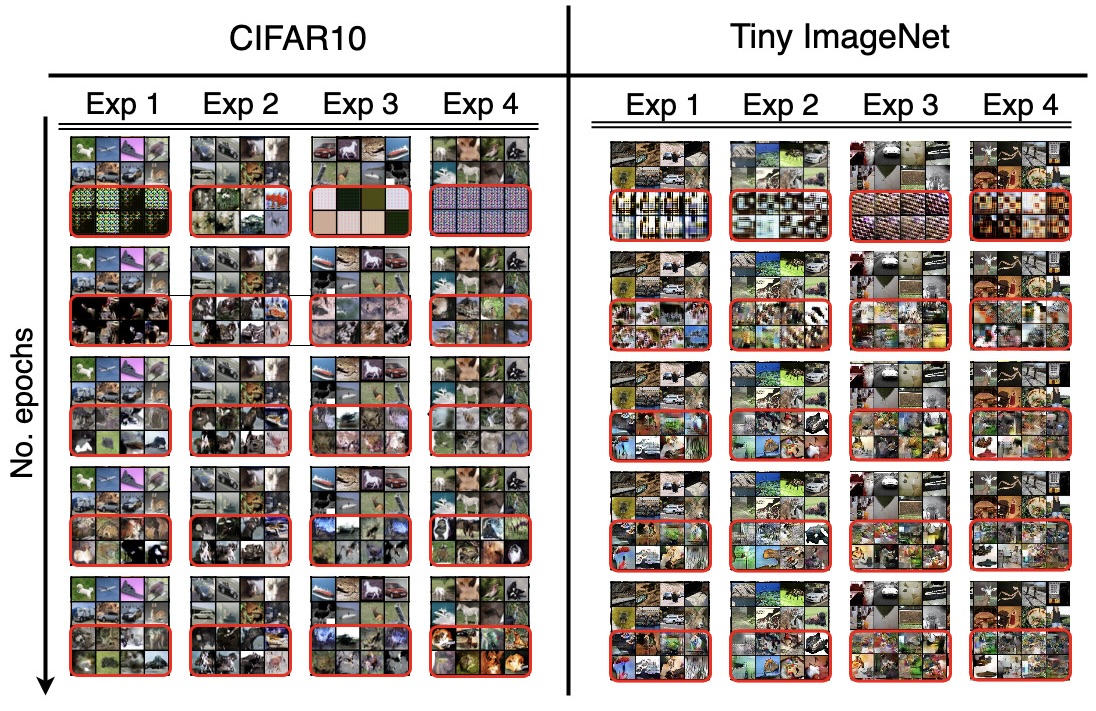

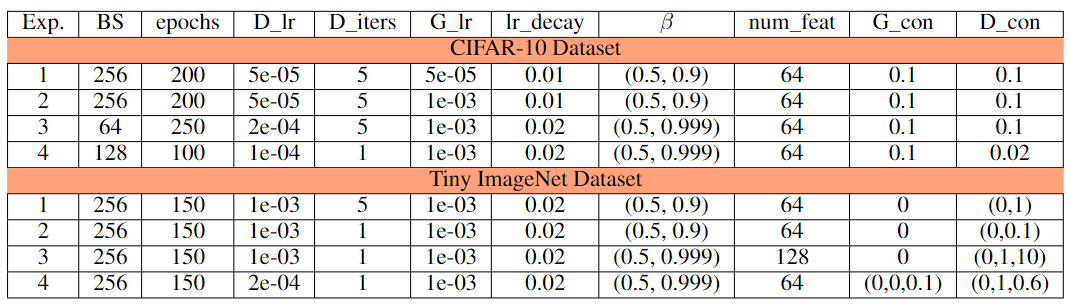

All the experiments are performed on CIFAR-10 and tiny imagenet datasets with the different parameter settings.

Should you have any question, please contact at Abdulla.Almansoori@mbzuai.ac.ae, Talal.Algumaei@mbzuai.ac.ae, Muhammad.Sharif@mbzuai.ac.ae