This repository contains source code for abdominal multi-organ segmentation in computed tomography (CT). The technical paper that describes the method is titled Recurrent Feature Propagation and Edge Skip-Connections for Automatic Abdominal Organ Segmentation [arXiv].

This repository contains source code for abdominal multi-organ segmentation in computed tomography (CT). The technical paper that describes the method is titled Recurrent Feature Propagation and Edge Skip-Connections for Automatic Abdominal Organ Segmentation [arXiv].

| Dataset | Description | Release date |

|---|---|---|

| DenseVNet [website] | This dataset includes 90 CT images, 47 images from the BTCV dataset and 43 images from the TCIA dataset. Eight organ annotations and rib cage bounding boxes are provided. Annotated organs includes the spleen, left kidney, gallbladder, esophagus, liver, stomach, pancreas and duodenum. | 2018 |

| WORD [website] | WORD contains 150 CT images in total. Among them, 100 images is for training, 20 images is for validation, and 30 images is for testing. WORD extensively provides 16 annotated structures, including the liver, spleen, left kidney, right kidney, stomach, gallbladder, esophagus, pancreas, duodenum, colon, intensine, adrenal, rectum, bladder, head of left femur, head of right femur. | 2022 |

We use the following steps to preprocess the datasets.

- Align CT scans to the same orientation.

- Crop CT scans to the regions of interest (ROIs) if the rib cage bounding boxes are available.

- Resample CT scans to the size of 160X160X64.

- Clip voxel intensities to the range of [-250, 200] HU.

- Normalize voxel intensities to zero mean and unit variance.

- Split CT scans into 4 folds for cross-validation.

Note that the second step (cropping CT scans to ROIs) is optional and the resampled size can be larger or smaller depending on how much GPU memory one can access to. Given the input size of 160X160X64, the intermediate features maps of our network occupy 10846 MB of GPU memory.

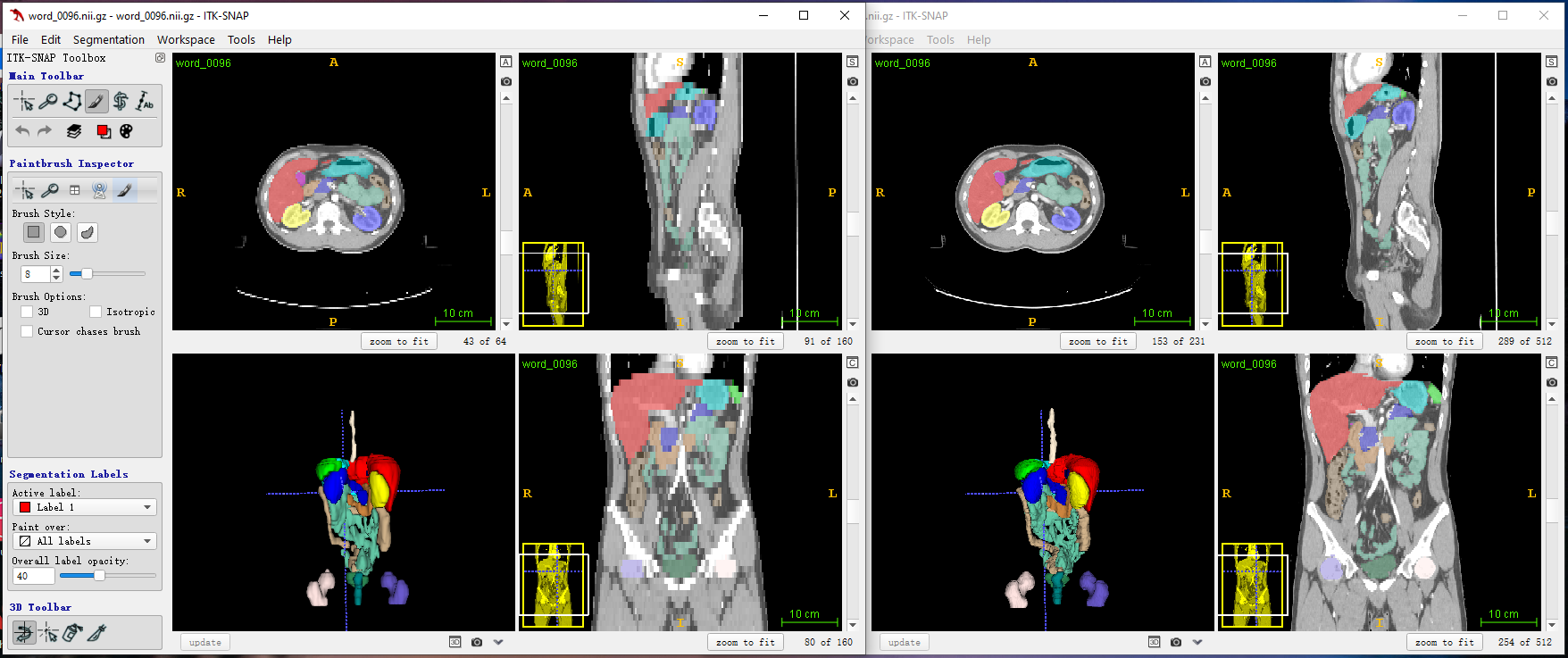

Case 96 in the WORD training set. Left panel: resampled images and right panel: original images.

We train segmentation models on the DenseVNet dataset and use the WORD dataset as an external validation dataset to see generalizability.

We store data in the parent directory /data/yzf/dataset/organct. Shown below is the tree structure of the DenseVNet data folder. The edge, preproc_img and preproc_label folder contains edge maps, images, and ground truth labels respectively. The cv_high_resolution.jason file records cross-validation splits.

/data/yzf/dataset/organct

├── cv_high_resolution.json

├── btcv_high_resolution

│ ├── edge

│ ├── preproc_img

│ └── preproc_label

└── tcia_high_resolution

├── edge

├── preproc_img

└── preproc_label

Show below is the tree structure of the WORD data folder. The imagesVal and labelsVal folders contain original images and labels. The preprocessedval folder contains preprocessed data as it is.

/data/yzf/dataset/organct/external

├── imagesVal

├── labelsVal

└── preprocessedval

├── image

└── label

We implement two models in this codebase, the baseline model and our proposed model. train.py is for the training of the baseline model. The following commands run the training program.

python train.py --gpu=0 --fold=0 --num_class=9 --cv_json=/path/to/DenseVNet/cross_validation.json

train_fullscheme.py is for our proposed model. Commands to run the program are as follows.

python train_fullscheme.py --gpu=0 --fold=0 --num_class=9 --cv_json=/path/to/DenseVNet/cross_validation.json

This section describes our implementation of external validation experiments. We use our previously trained model to initialize model parameters and fine-tune the parameters on the WORD training set. We then inference results on the WORD validation set. finetune.py and finetune_fullscheme.py are for the fine-tuning of the UNet model and our proposed model respectively. Commands are as follows.

python finetune.py --gpu=0 --net=unet_l9_ds --num_epoch=20 --pretrainedckp=/path/to/pretrained/checkpoint.pth.tar

python finetune_fullscheme.py --gpu=0 --net=unet_deep_sup_full_scheme --num_epoch=20 --pretrainedckp=/path/to/pretrained/checkpoint.pth.tar

Three metrics are used to quantify segmentation predictions. They are the Dice similarity coefficient (DSC), average symmetry surface distance (ASSD), and the 95*-th* percentile of Hausdorff distance (HD95). inference.py and inference_external.py predict validation segmentation volumes and compute evaluation metrics on the DenseVNet dataset and the external WORD dataset respectively.

python inference.py --gpu=0 --fold=0 --net=unet_l9_ds --num_class=9 --cv_json='/path/to/DenseVNet/cross_validation.json'

python inference_external.py --gpu=0 --fold=0 --net=unet_l9_ds --num_class=9 --cv_json='/path/to/WORD/cross_validation.json'

./models/utils_graphical_model.py defines classes that construct 2D recurrent neural networks based on directed acyclic graphs.

@article{yang2022recurrent,

title={Recurrent Feature Propagation and Edge Skip-Connections for Automatic Abdominal Organ Segmentation},

author={Yang, Zefan and Lin, Di and Wang, Yi},

journal={arXiv preprint arXiv:2201.00317},

year={2022}

}