you'll need a docker engine and docker-compose

Clone this repo

add an .env file at the root

CLUSTER_NAME=the_name_of_your_cluster

ADMIN_NAME=your_name

ADMIN_PASSWORD=def@ultP@ssw0rd

INSTALL_PYTHON=true # whether you want python or not (to run hadoop streaming)

INSTALL_SQOOP=true

start the stack

docker-compose up -d --build

stop it

docker-compose down

See logs

docker-compose logs -t -f

alternatively, you can also create user and import data stored in supports/data in HDFS.

chmod +x setup.sh

./setup.sh

access hdfs through the name node

sudo docker exec -it namenode bash

- hadoop streaming

/opt/hadoop-3.1.1/share/hadoop/tools/lib/hadoop-streaming-3.1.1.jar

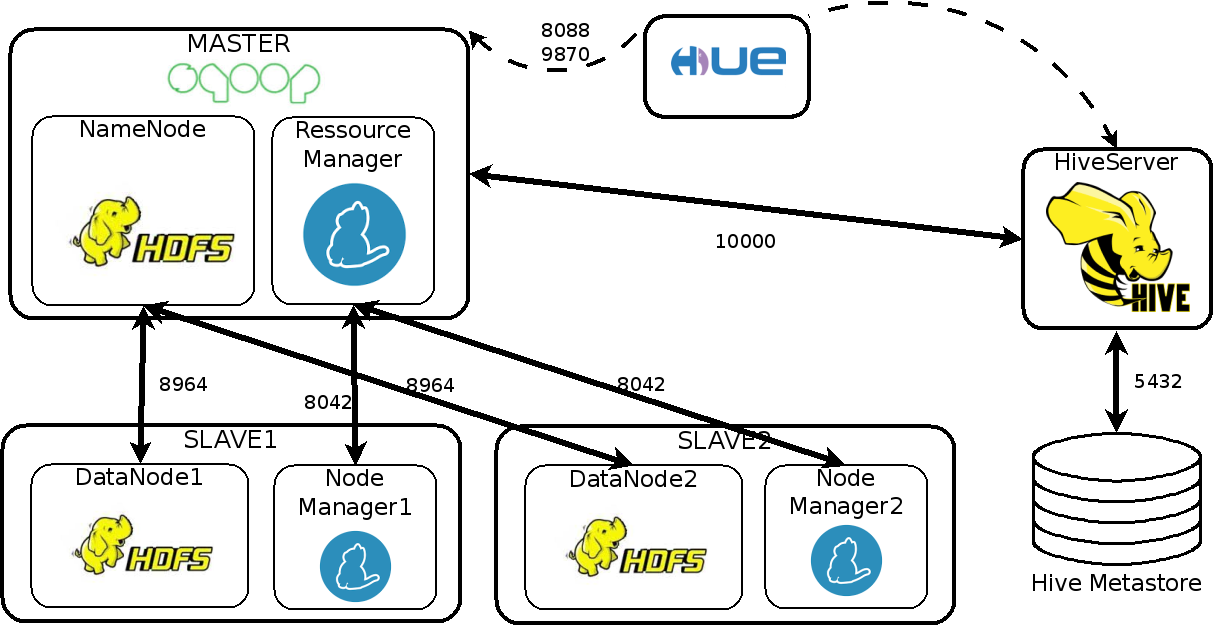

Most sources were gathered from big-data-europe's repos main repos base of the docker-compose parts added hue hiveserver2