This project is an example for deploying ML models using Python 3 with Docker containers and unit testing. This README explains the project with a tutorial that should help the reader to learn how to deploy ML models in the cloud. This project was developed in Python 3.6.8 using PyCharm.

To deploy a ML model we essentially need two components: a trained ML model and an API to communicate with this model. These two components can then be containerized in a docker container and later deployed in the cloud. Therefore, the process of deploying a ML model can be explained in these 4 steps:

- Developing a ML Model.

- Designing an API for the ML Model (with Unit Testing).

- Build a Docker Image that Includes both the Model and the API.

- Uploading the Docker Image in the Cloud for Deployment.

For now, the selected ML model is deployed in Google Cloud Platform (GCP). However, in future, other cloud platforms will be included such as Microsoft Azure and Amazon Web Services (AWS).

To get started with this project, you need first to clone the repository to your working directory using git:

git clone https://github.com/zakgrin/Deploy_ML_Model.git

Note: if you find this project useful in anyway, please do not forget to give it a start.

The project contains the following list:

- dnn_model: tensorflow model folder.

- images: documentation images.

- input: input data to test the model.

- .dockerignore: files to ignore in docker image building.

- .gitignore: files to ignore in git.

- app.py: API application (main python file).

- Dockerfile: docker file to build a docker image.

- notebook.ipynb: a notebook for a short example for model loading and prediction.

- README.md: this documentation.

- requirements.txt: python venv requirements.

- requirements_docker.txt: docker image requirements.

- test_app.py: unit testing for the API application.

Developing a ML model is not the focus of this tutorial and for this reason a pretrained model was selected. In this project, a tensorflow DNN model to predict Auto MPG was used (Basic regression: Predict fuel efficiency, this tutorial shows how to get started with tensorflow to develop a basic deep neural network (DNN) regression model). The same procedure can be followed to train and save tensorflow models using model.save('model').

Before deploying or using any ML model, it is strongly recommended to understand the data input and output of the model. For our simple model, notebook provides the minimum understanding to perform predictions.

Deploying a model is the focus of this documentation as well be explained in the following sections. This process starts with the API design (Step 2) and ends with the model deployment in the cloud (Step 4). The same steps can be repeated to maintain or improve the model (Step 5).

To deploy a model, we need to establish a way of communication with this model using an API as shown in app.py. This file includes a simple flask application with restfull API with a single entry point. Since we are deploying a ML model the entry point is called predict.

The predict entry point provides two http requests which are get and post. The first (get) provides a text output with a 200 as an OK http code to confirm the communication with the model. The second (post) is used to send the input to the model and provide the prediction as an output.

Now that we have both a model and an API, we are ready to run and test them. At the beginning, we need to test our API in python to make sure that everything is in place before we can move further to docker (Step 3) and finally to GCP (Step 4).

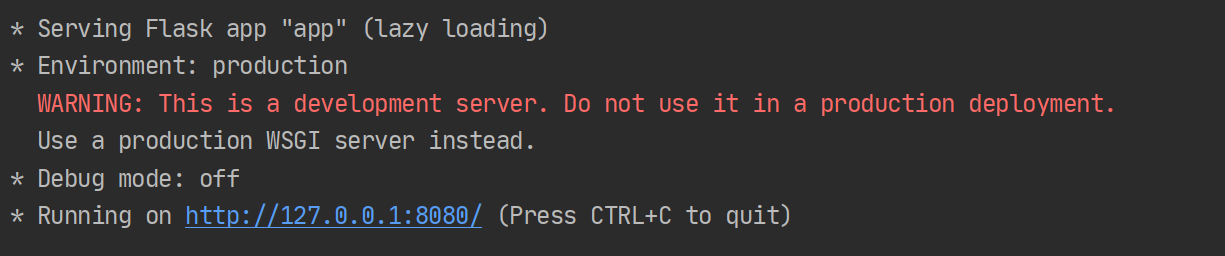

If you want to run the API application in your local machine using python, navigate to the project folder and create a new python venv. After that, you can follow these steps:

-

Run pip install command:

pip install -r requirements.txt -

Run the python API app:

python app.py -

To test

/predictentry point, open the url in a web browser (ends with/predict):http://127.0.0.1:8080/predict- (This show that the API can successfully respond to http get requests).

-

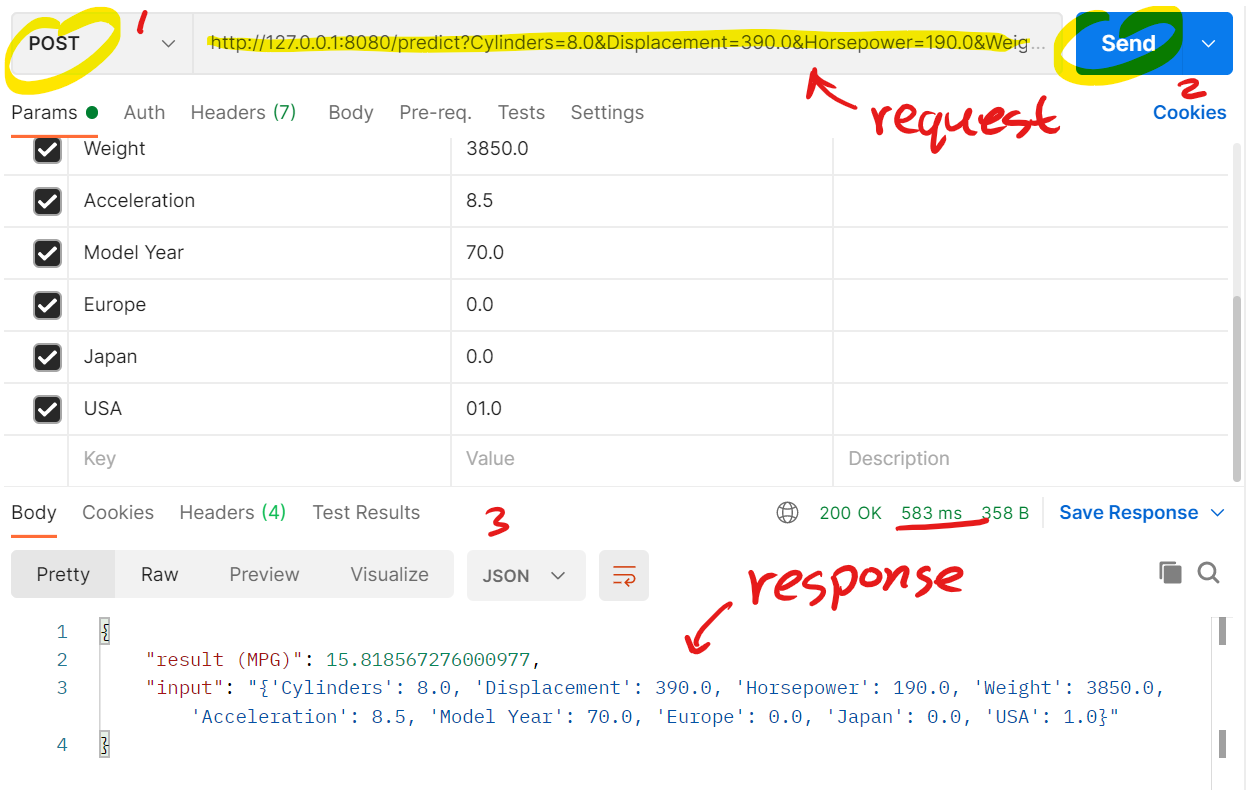

This API is meant to provide predictions to http post requests. The post request should be in the following format as shown in this example:

-

http://127.0.0.1:8080/predict?Cylinders=8.0&Displacement=390.0&Horsepower=190.0&Weight=3850.0&Acceleration=8.5&Model Year=70.0&Europe=0.0&Japan=0.0&USA=01.0 -

To send a post request, you can use Postman or

requestmodule in Python. The following image shows a post request by Postman with the API response:

- (It can be noted that it took about 583ms for the API to provide a response).

-

-

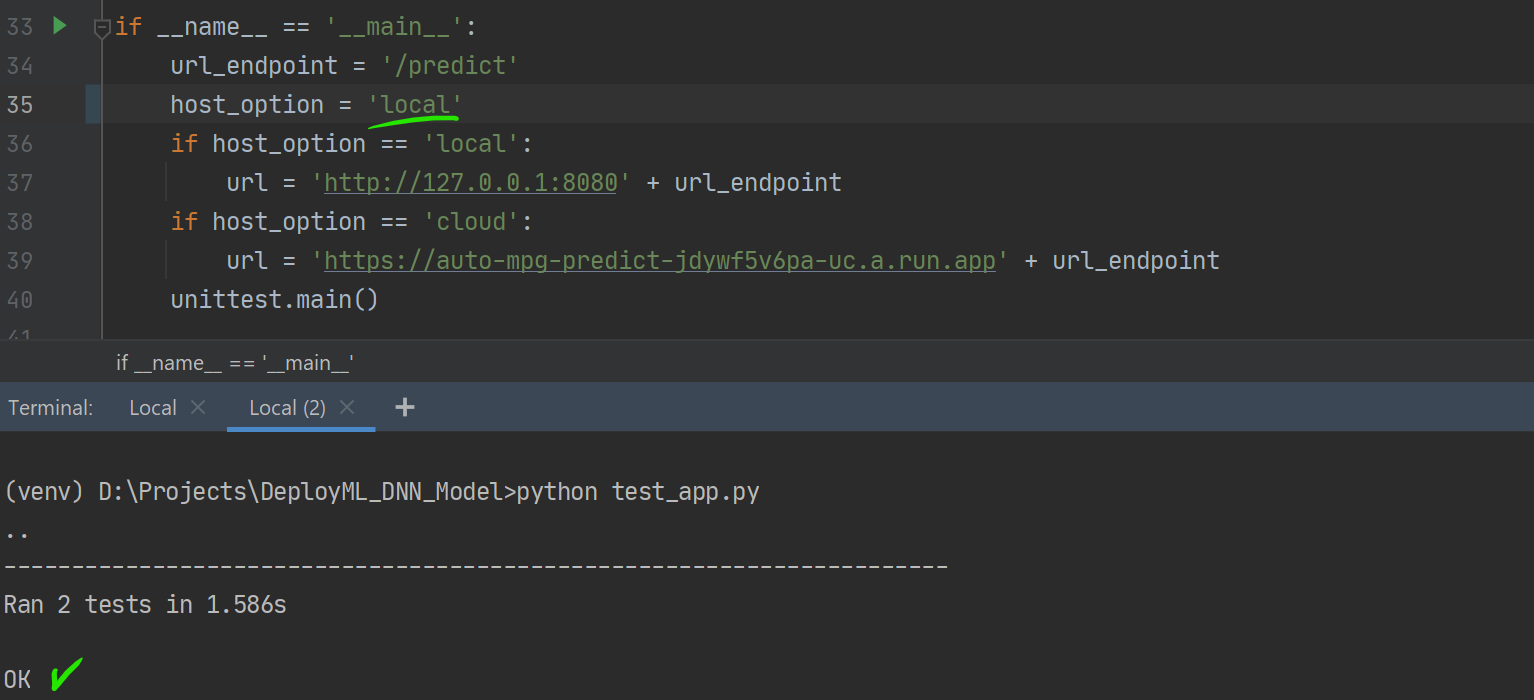

The unit testing for this API is based on

requestmodule in python. To run the API unit test:-

check test_app.py and make sure that

host_option = 'local' -

run the test using

python test_app.py -

make sure that you keep

app.pyworking to be able to test it!- (This shows that the API responded as expected to http

getandpostrequests).

- (This shows that the API responded as expected to http

-

to see the unit testing program, read test_app.py.

-

It is important to replicate the API using a docker container which will allow us to deploy it in the cloud. To confirm that a docker container can replicate the expected procedure, then it should pass the same test as we did directly with python. Therefore, the test_app.py was designed to test both options using local as a host option. Note that the container uses the same url and port number as when you run the application directly with python.

You can use Dockerfile to build a docker image with docker build command. The following commands were used:

docker build -t auto-mpg-docker .: to build the docker image.docker imagesordocker image ls -a: to show current docker images.docker rmi deploy-auto-mpgordocker image rm deploy-auto-mpg: to remove a docker image.- (You can use the first few letters in the image ID instead of the name).

docker run -p 8080:8080 --name predict -d auto-mpg-docker: to run the docker image using the same port as the host.docker ps -aordocker container ls -a: to show all current docker processes.docker stop predictordocker container stop predict: to stop the docker process.docker start predictordocker container start predict: to start the docker process.docker rm predictordocker container rm predict: to remove the docker process.

After building the image and running the container under predict name, we can run test_app.py using python as we did before. Make sure that host_option='local'before you run the test. To confirm that the model is running as expected within the container, python test_app.py should also provide OK test. Now that our container is ready, we can deploy it in the cloud.

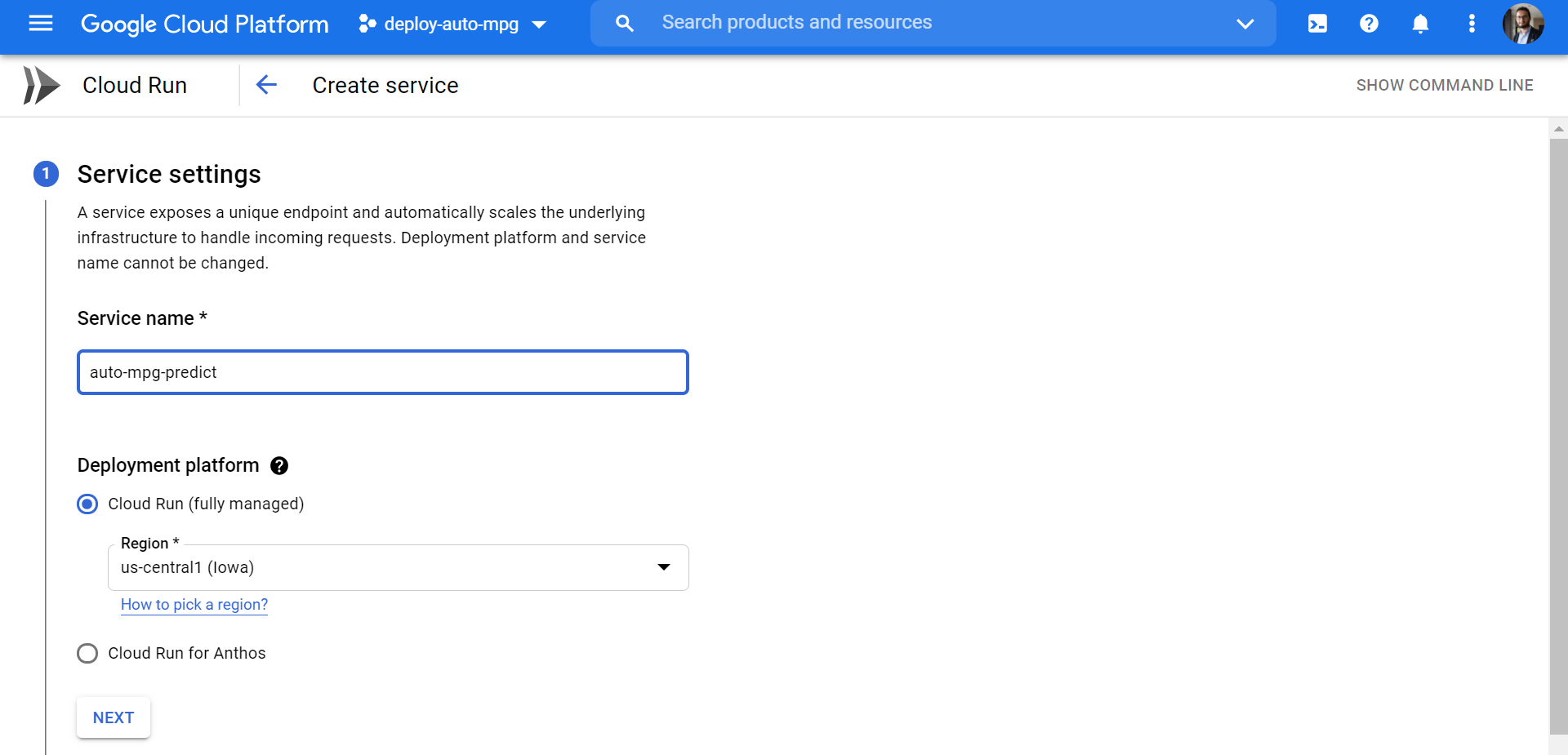

To be able to use GCP, a google account is needed to create a new project. The following 5 steps show the process from starting a new project to running the model in GCP and performing a unit testing from a local machine.

-

Creating a new project in GCP:

- Open GCP Console.

- Create a new project with the following name:

deploy-auto-mpg. - Enable API for the project in GCP Enable API

-

Google Cloud SDK Setup:

- Install GCP SDK and then run the Google Cloud SDK shell. Follow the instruction to authenticate your Google account and also select your GCP project.

- You can also add docker configuration based on the region (or the registry name).

- This file is located [C:\Users<UserName>.docker\config.json] can can be configured with

gcloud auth configure-docker. - It is recommended to include the region name otherwise all GCP regions will be configured with docker.

- To configure europe:

gcloud auth configure-docker gcr.io. - Other regions include:

{ "credHelpers": { "gcr.io": "gcloud", "us.gcr.io": "gcloud", "eu.gcr.io": "gcloud", "asia.gcr.io": "gcloud", "staging-k8s.gcr.io": "gcloud", "marketplace.gcr.io": "gcloud" } }.

- This file is located [C:\Users<UserName>.docker\config.json] can can be configured with

-

Uploading Docker Image to GCP:

- Use the following command in Google Cloud SDK Shell at the project directory were Dockerfile is located:

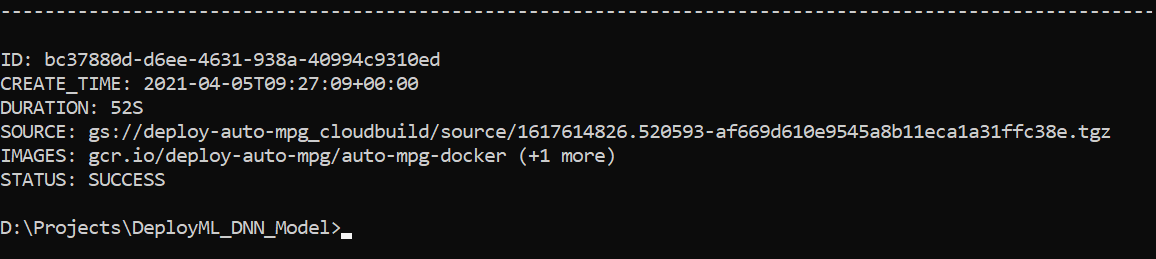

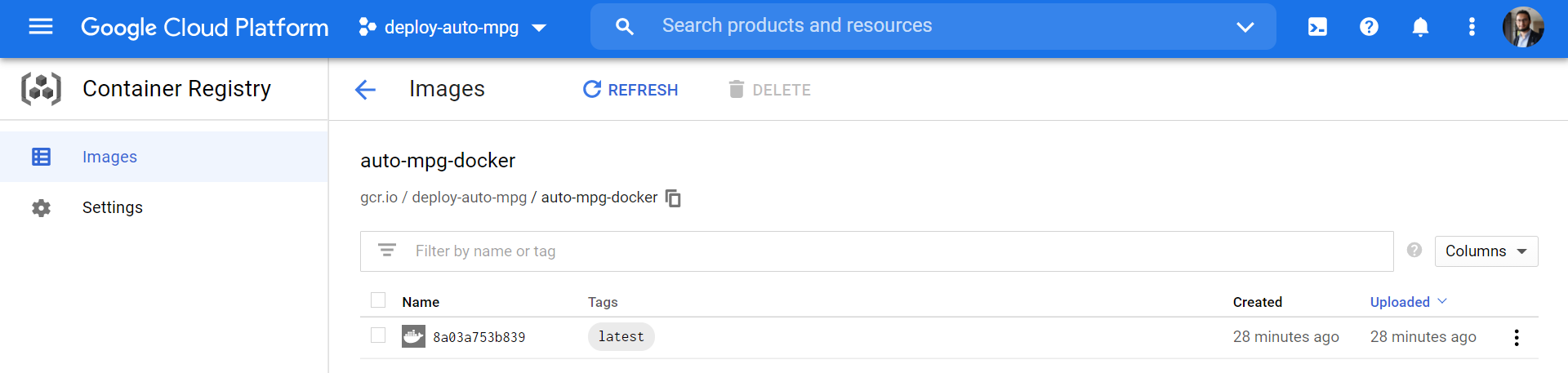

gcloud builds submit --tag gcr.io/deploy-auto-mpg/auto-mpg-docker. This command will build a docker Image and submit it togcr.ioregistry underdeploy-auto-mpgproject and using theauto-mpg-dockerdocker container. - After a successfully running the previous command, the following message should appear:

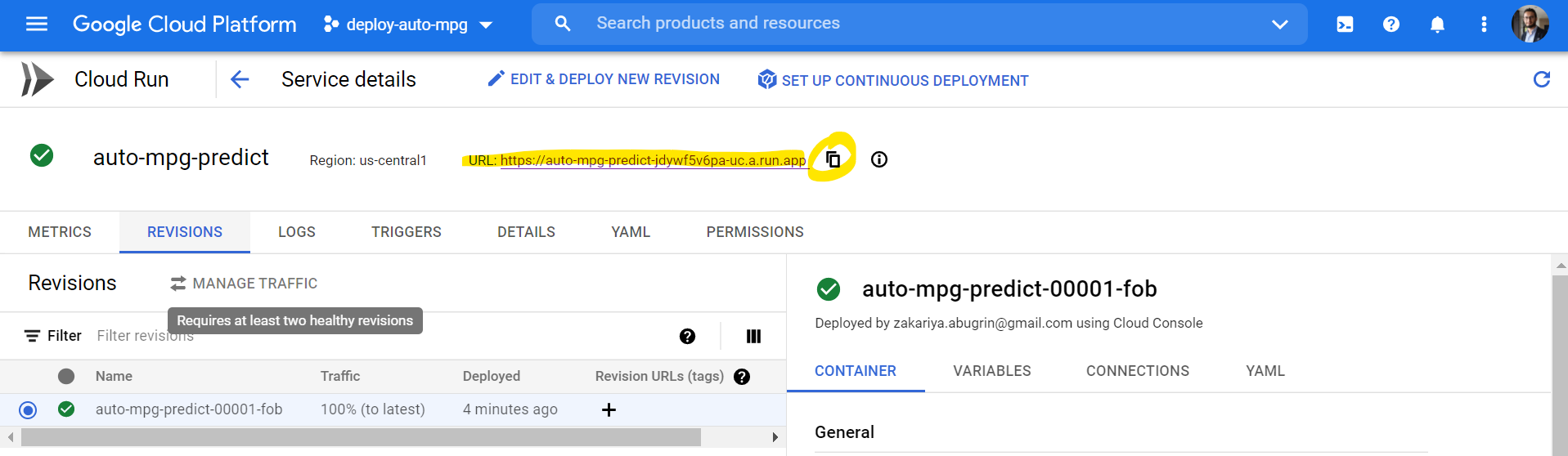

- Go to GCP Console to show this new Docker image added under Container Registry:

- Use the following command in Google Cloud SDK Shell at the project directory were Dockerfile is located:

-

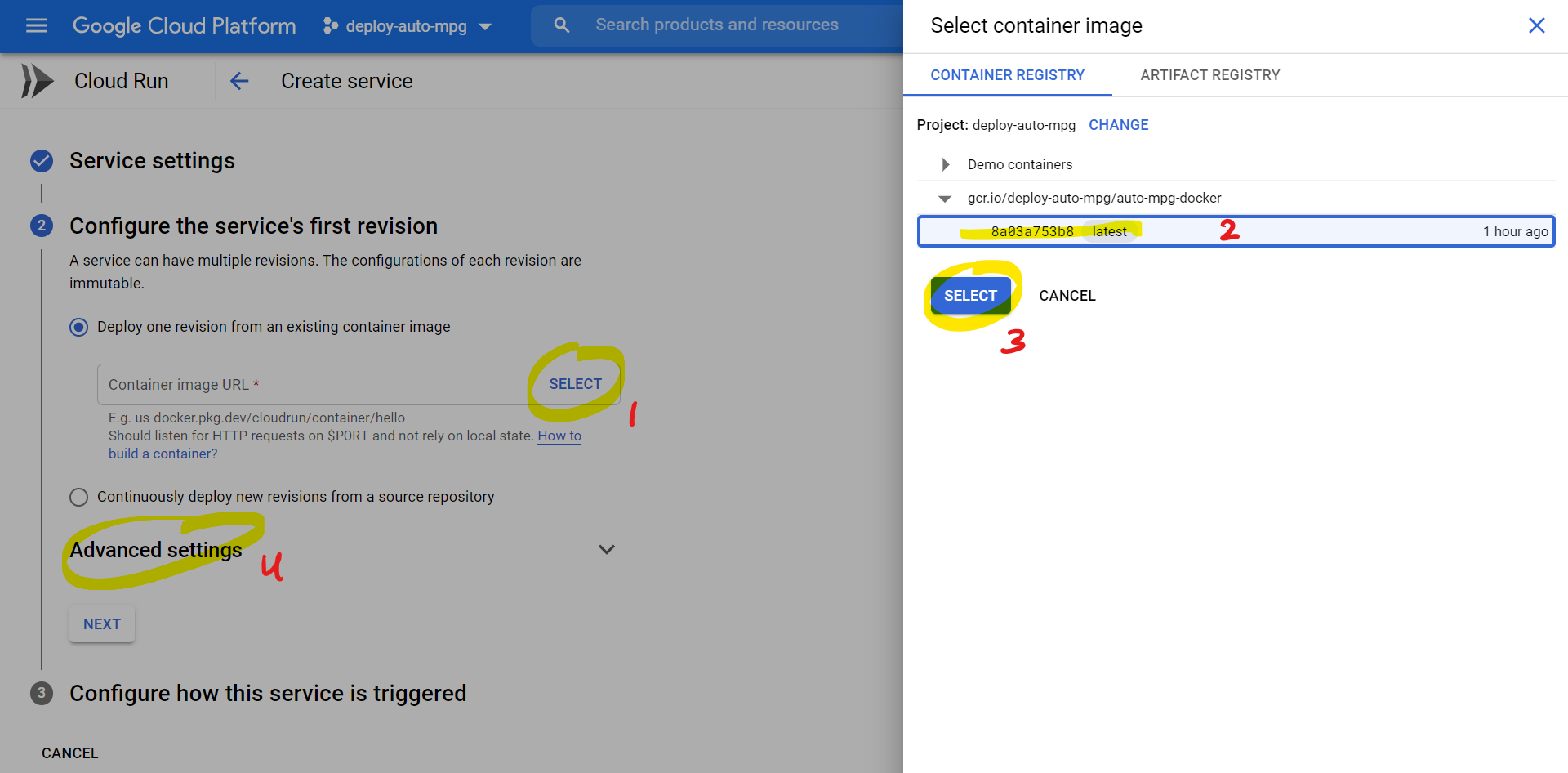

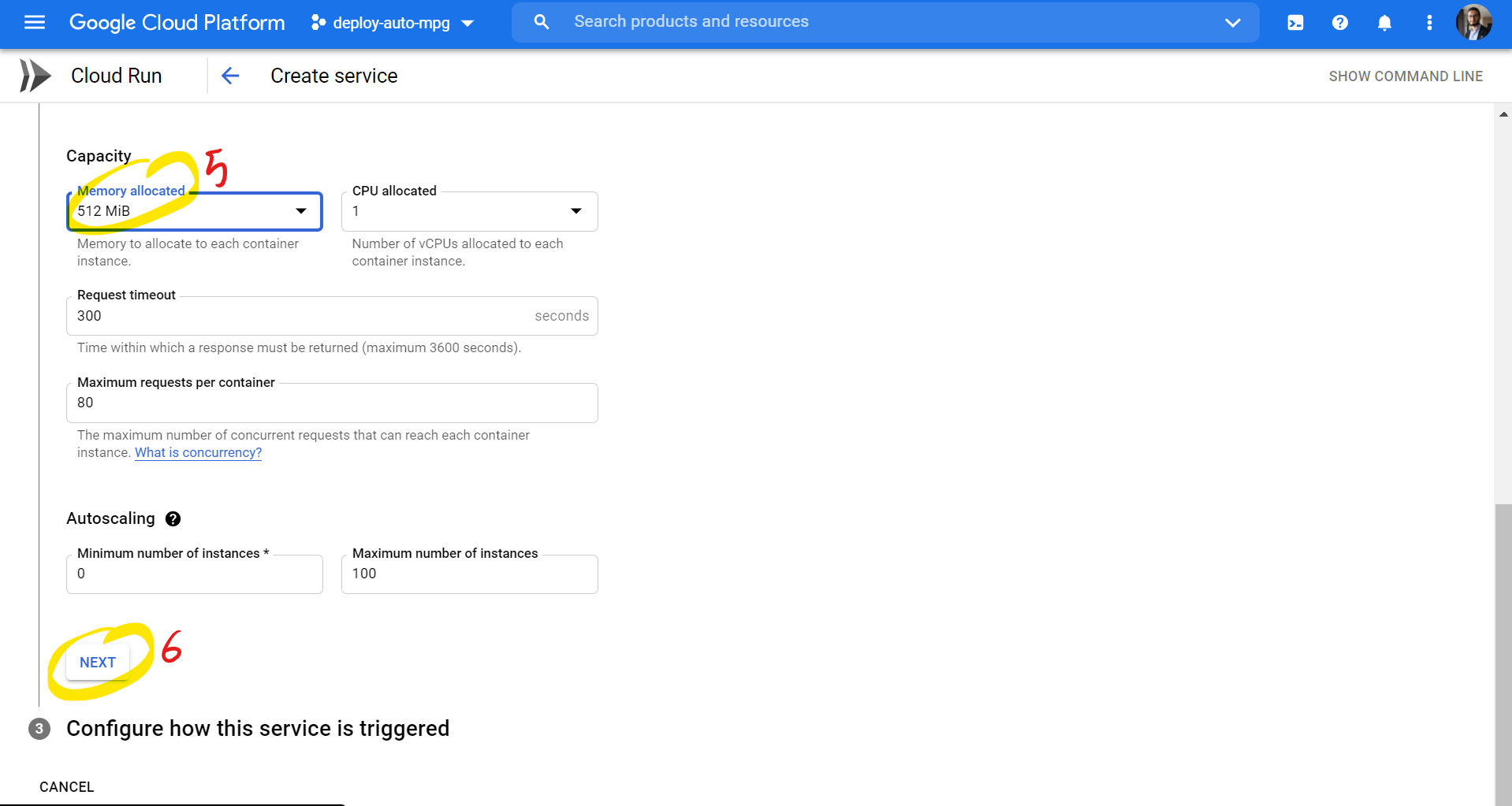

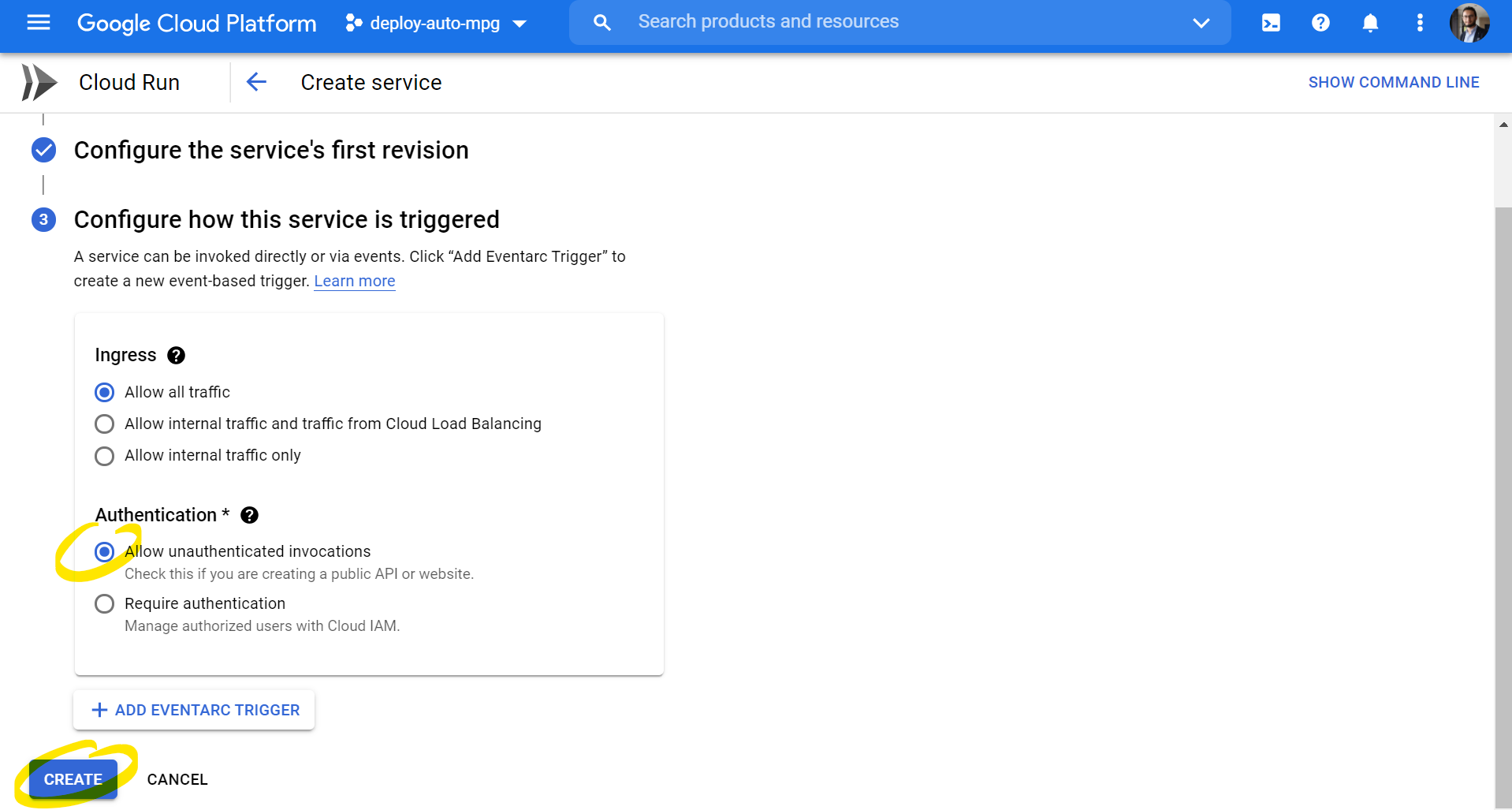

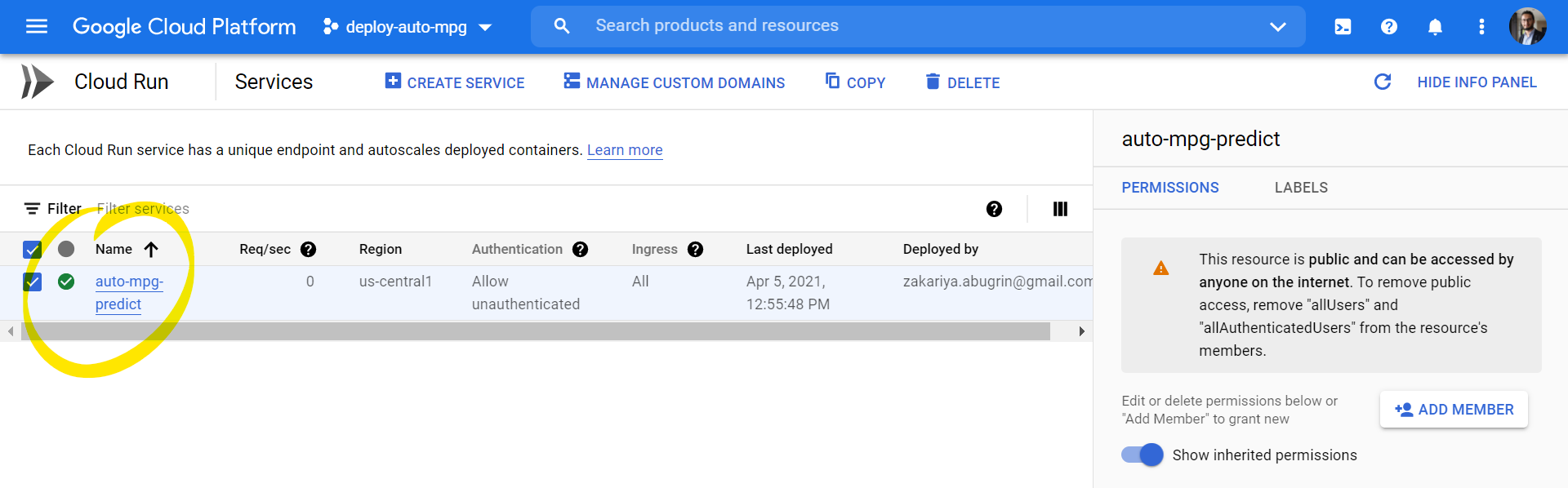

Creating a Service in GCP:

-

Test the model:

- To test the get request, simply copy the model url and add

/predict:

(This shows a successful get request)

(This shows a successful get request) - You can use the unit test test_app.py to test the model but you need to update

urland to set thehost option='cloud: (This shows a successful unit test for the model in GCP)

(This shows a successful unit test for the model in GCP)

- To test the get request, simply copy the model url and add

- Add AWS example.

- Add Azure example.

- Tensorflow Tutorial:

- Python API Tutorials:

- Python Unit Testing:

- Docker Tutorials: