Official PyTorch implementation of "Robust Online Tracking with Meta-Updater". (IEEE TPAMI)

This work is extended and improved from our preliminary work published in CVPR 2020, entitled “High-Performance Long-Term Tracking with Meta-Updater” (Oral && Best Paper Nomination). We also refer to the sample-level optimization strategy from our another work, published in ACM MM 2020, entitled "Online Filtering Training Samples for Robust Visual Tracking”.

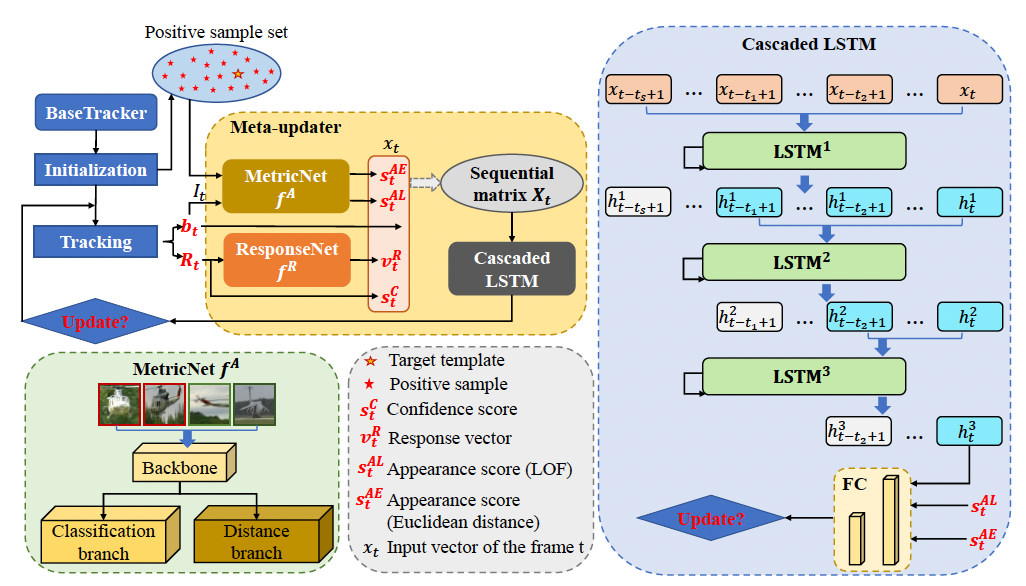

In this work, we propose an upgraded meta-updater (MU), where the appearance information is strengthened in two aspects. First, the local outlier factor is introduced to enrich the representation of appearance information. Second, we redesign the network to strengthen the role of appearance cues in guiding samples’ classification. Furthermore, sample optimization strategies are introduced for particle-based trackers (e.g., MDNet and RTMDNet) to obtain a better performance.

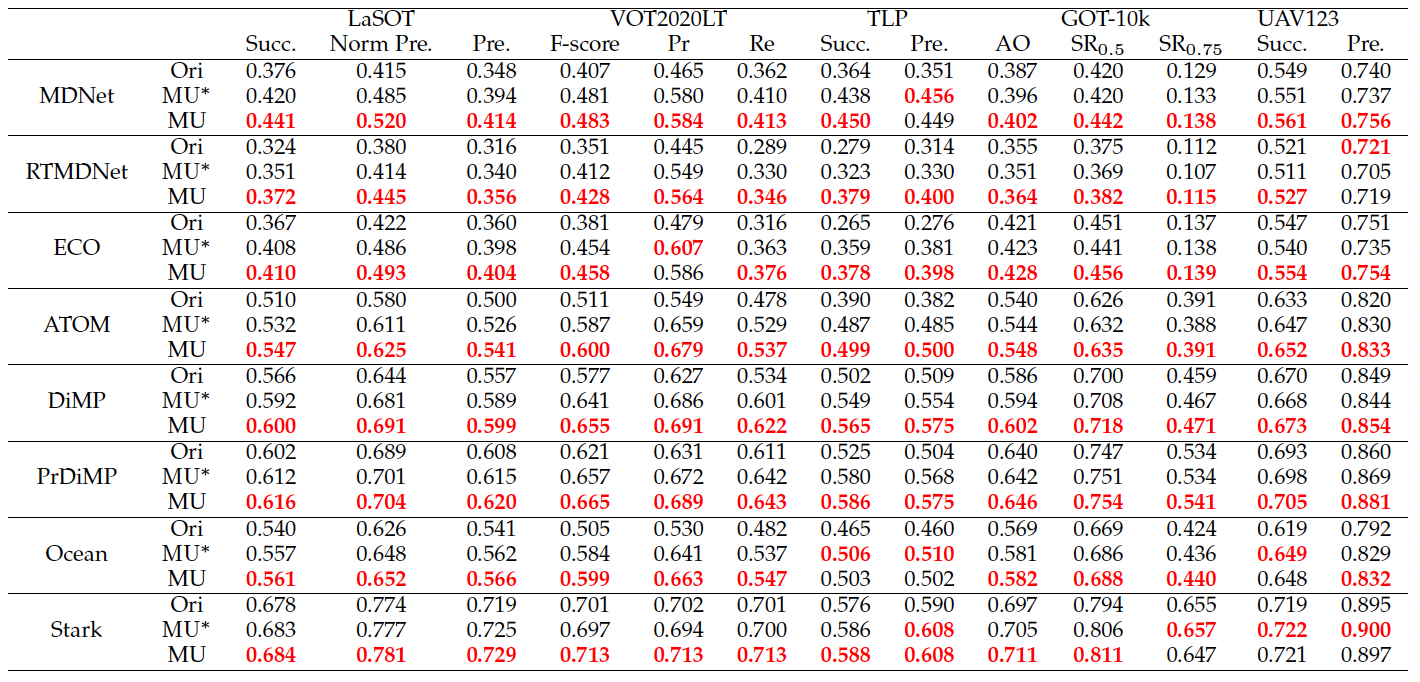

The proposed module can be easily embedded into other online-update trackers to make their online-update more accurately. To show its generalization ability, we integrate the upgraded meta-updater (MU) and its original version (MU*) into eight different types of short-term trackers with online updates, including correlation filter-based method (ECO), particle-based methods (MDNet and RTMDNet), representative discriminative methods (ATOM, DiMP, and PrDiMP), Siamese-based method (Ocean), and Transformer-based method (Stark). We perform these trackers on four long-term tracking benchmarks (VOT2020LT, OxUvALT, TLP and LaSOT) and two short-term tracking benchmarks (GOT-10k, UAV123) to demonstrate the effectiveness of the proposed meta-updater.

- Models:

Please place the models into the corresponding locations of the project according to the folder order in the following link.

[Baidu Yun] (a1ca)

- Results:

[Baidu Yun] (i1pw)

- python 3.7

- CUDA 10.2

- ubuntu 18.04

conda create -n MU python=3.7

source activate MU

cd path/to/XXX_MU //e.g. DiMP_MU

pip install -r requirements.txt

(Other settings can refer to the official codes of the specific baseline trackers.)

-

Edit the path in the file "local_path.py" to your local path.

-

Place the models into the corresponding locations of the project according to the folder order in the above models link.

-

Test the tracker

cd XXX_MU

python test_tracker.py

If you want to perform the original meta-updater(MU*), you need to change the @model_constructor in the file "ltr/models/tracking/tcNet.py":

model = tclstm_fusion() //for the upgraded meta-updater(MU)

model=tclstm() //for the original meta-updater(MU*)

- Evaluate results

python evaluate_results.py

- Run the baseline tracker and record all results(bbox, response map,...) on LaSOT dataset to the training data of meta-updater.You can modify test_tracker.py like this:

p = p_config()

p.tracker = 'Dimp'

p.name = p.tracker

p.save_training_data=True

eval_tracking('lasot', p=p, mode='all')

- Edit the file "ltr/tcopt.py", modify tcopts['lstm_train_dir'] and tcopts['train_data_dir'] like this:

tcopts['lstm_train_dir'] = './models/Dimp_MU' //save path of training models

tcopts['train_data_dir'] = '../results/Dimp/lasot/train_data' //dir of training data

- Run ltr/run_training.py to train the upgraded meta-updater.

If you have any questions when install the environment or run the tracker, please contact me (zj982853200@mail.dlut.edu.cn).

- For the "Stark_MU", we set the "p.update_interval" to 200 for long-term trackng benchmarks, and set it to 50 for short-term tracking benchmarks.

- The upgraded meta-updater (MU) has a hyper-parameter "p.lof_thresh", it controls the model's update in the initial 20 frames based on the local outlier factor (LOF) of the current bounding box. We find that the trackers usually perform well when p.lof_thresh=2.5. For different trackers and tracking benchmarks, 1.5~3.5 is a proper range for obtaining a better performance.

-

This work is an extention of:

-

Baseline trackers:

-

[ECO] "ECO: Efficient Convolution Operators for Tracking". CVPR 2017. [paper] [code]

-

[MDNet] "Learning Multi-Domain Convolutional Neural Networks for Visual Tracking". CVPR 2016. [paper] [code]

-

[ATOM] "ATOM: Accurate Tracking by Overlap Maximization". CVPR 2019. [paper] [code]

-

[DiMP] "Learning Discriminative Model Prediction for Tracking". ICCV 2019. [paper] [code]

-

[PrDiMP] "Probabilistic Regression for Visual Tracking". CVPR 2020. [paper] [code]

-

[Ocean] "Ocean: Object-aware Anchor-free Tracking". ECCV 2020. [paper] [code]

-

[Stark] "Learning Spatio-Temporal Transformer for Visual Tracking". ICCV 2021. [paper] [code]

-

The training code is based on the PyTracking toolkit. Thanks for PyTracking for providing useful toolkit for SOT.