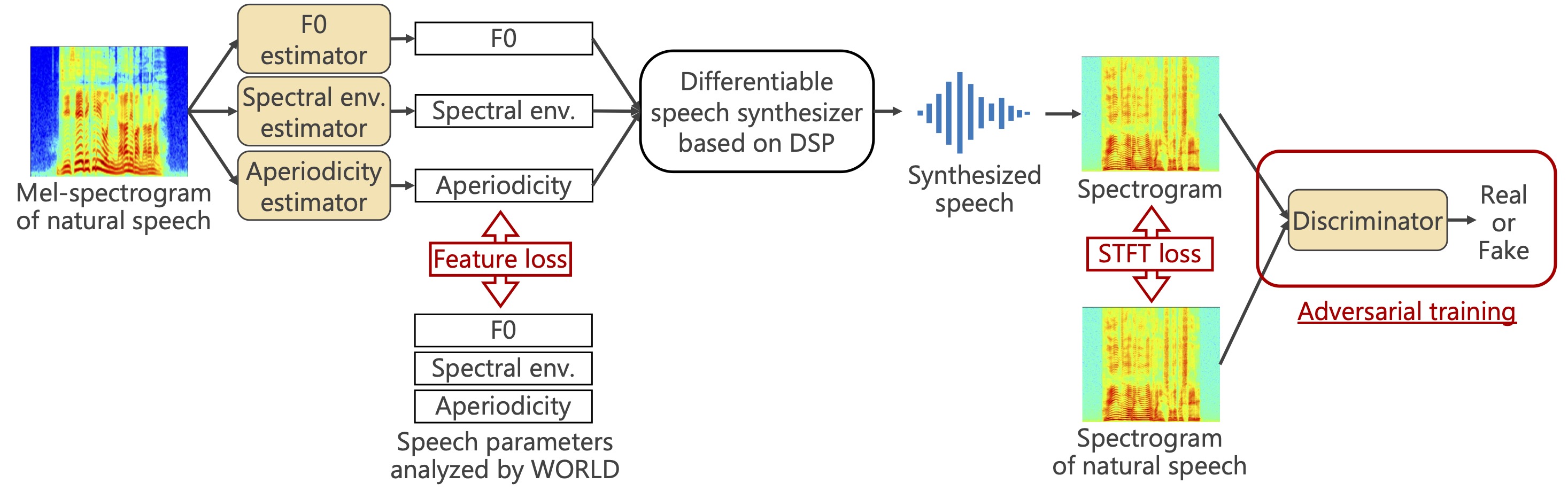

We implement a differentiable speech analysis-synthesis system (see figure below), which consists of a speech parameter estimator and a differentiable speech synthesizer. We can train a speech parameter estimator (Deep Neural Network: DNN) to ensure that the re-synthesized speech from the estimated parameters gets closer to natural speech. This repository contains an implementation of Differentiable Speech Synthesizer and a training code of a speech parameter estimation model using the synthesizer.

-

Recommend

Python 3.8.0. -

Install python packages:

$ pip install -r requirements.txt

Vocoder synthesis part of signal processing implemented in PyTorch.

The following is a sample code to synthesize waveforms in DSS using analysis parameters by WORLD.

from dss import DifferentiableSpeechSynthesizer, audio

# World Parameter

f0, vuv, sp, ap = audio.world_analysis(

waveform=waveform, # tensor

fs=24000, # samples

n_fft=1024, # samples

frame_period=5, # msec

harvest=True,

)

# DSS Instance

synthesizer = DifferentiableSpeechSynthesizer(

device="cpu",

sample_rate=24000, # samples

n_fft=1024, # samples

hop_length=120, # samples (5 msec)

synth_hop_length=24, # samples (1 msec)

)

# NOTE: When using the synthesis process by DSS, determine the lower limit

# of F0 or convert to continuous F0. If F0 = 0[Hz], it will not work well.

f0 = torch.clamp(input=f0, min=71.0)

# Synth by DSS

dss_wav = synthesizer(f0_hz=f0, spectral_env=sp, aperiodicity=ap)This is a script that generates the results of the analysis-synthesis; we recommend that you check the source code as it is an example of how to use DSS.

python test_anasyn.py \

--input_data_dir INPUT_WAV_DIR_PATH \

--output_data_dir OUTPUT_WAV_DIR_PATH- The speech analysis synthesis results of the wav files in

INPUT_WAV_DIR_PATHare output toOUTPUT_WAV_DIR_PATH. Worldis used for speech analysis to estimate F0, spectral envelope, and aperiodicity indices.- Two types of synthesis results are generated: one by

Worldand the other byDSS. *_world.wav: Synthesis result byWorld (Harvest)*_dss.wav: Synthesis result byDSS

Prepare ParallelWaveGAN:

$ git clone https://github.com/kan-bayashi/ParallelWaveGAN.git

$ cd ParallelWaveGAN

$ pip install -e .Download jvs_ver1.zip into ./download/, and unzip the file.

Edit conf/config.yaml and run the script:

$ python preprocess.pyEdit conf/config.yaml and run the script:

$ python train.py- Apache 2.0 license (LICENSE)

- Yuta Matsunaga (The University of Tokyo)

- Ryo Terashima

@inproceedings{Matsunaga:ASJ2022A-2,

author = {松永 裕太 and 寺島 涼 and 橘 健太郎},

title = {微分可能な信号処理に基づく音声合成器を用いた DNN 音声パラメータ推定の検討},

booktitle = {日本音響学会第148回(2022年秋季)研究発表会},

month = {Sep.},

year = {2022},

}

@inproceedings{Matsunaga:ASJ2022A-2,

author = {Yuta Matsunaga and Ryo Terashima and Kentaro Tachibana},

title = {A study of DNN-based speech parameter estimation with speech synthesizer based on differentiable digital signal processing},

booktitle = {Acoustical Society of Japan 2022 Autumn Meeting},

month = {Sep.},

year = {2022},

note = {in Japanese},

}