Spark Authorizer

Spark Authorizer provides you with SQL Standard Based Authorization for Apache Spark™ like SQL Standard Based Hive Authorization. While you are using Spark SQL or Dataset/DataFrame API to load data from tables embedded with Apache Hive™ metastore, this library provides row/column level fine-grained access controls with Apache Ranger™.

Security is one of fundamental features for enterprise adoption. Apache Ranger™ offers many security plugins for many Hadoop ecosystem components, such as HDFS, Hive, HBase, Solr and Sqoop2. However, Apache Spark™ is not counted in yet. When a secured HDFS cluster is used as a data warehouse accessed by various users and groups via different applications wrote by Spark and Hive, it is very difficult to guarantee data management in a consistent way. Apache Spark users visit data warehouse only with Storage based access controls offered by HDFS. This library shares Ranger Hive plugin with Hive to help Spark talking to Ranger Admin.

How to Use

With Spark Submit Arguments

Spark Authorizer has been contributed to spark-packages.org, see @ https://spark-packages.org/package/yaooqinn/spark-authorizer

Include this package in your Spark Applications using: spark-shell, pyspark, or spark-submit

For Spark 2.1.x

> $SPARK_HOME/bin/spark-shell --packages yaooqinn:spark-authorizer:1.1.1.spark2.1For Spark 2.2.x

> $SPARK_HOME/bin/spark-shell --packages yaooqinn:spark-authorizer:1.0.0.spark2.2For Spark 2.3.x

> $SPARK_HOME/bin/spark-shell --packages yaooqinn:spark-authorizer:1.0.0.spark2.3With Maven

In your pom.xml, add:

<dependencies>

<!-- list of dependencies -->

<dependency>

<groupId>yaooqinn</groupId>

<artifactId>spark-authorizer</artifactId>

<version>1.1.1.spark2.1</version>

</dependency>

</dependencies>

<repositories>

<!-- list of other repositories -->

<repository>

<id>SparkPackagesRepo</id>

<url>http://dl.bintray.com/spark-packages/maven</url>

</repository>

</repositories>

This may cause transitive dependency problem

Manually

An Alternative way to use this library is to build it of your own.

Specifying Spark Authorization for Apache Spark

| Branch | Latest Stable Version | Spark Version | Notes |

|---|---|---|---|

| master | N/A | master | periodically update to catch up |

| spark-2.3 | 1.0.0.spark2.3 | 2.3.0 | - |

| spark-2.2 | 1.0.0.spark2.2 | 2.2.x | - |

| spark-2.1 | 1.0.0.spark2.1 | 2.1.x | - |

Installing Spark Authorizer to Spark

cp spark-authorizer-<version>.jar $SPARK_HOME/jars(only required when manually build this)- install ranger-hive-plugin for spark

- configure you

hive-site.xmland ranger configuration file, you may find an sample in[./conf]

Interactive Spark Shell

The easiest way to start using Spark is through the Scala shell:

bin/spark-shell --master yarn --proxy-user hzyaoqinSecondly, implement the Authorizer Rule to Spark's extra Optimizations.

import org.apache.spark.sql.catalyst.optimizer.Authorizerspark.experimental.extraOptimizations ++= Seq(Authorizer)Check it out

scala> spark.experimental.extraOptimizations

res2: Seq[org.apache.spark.sql.catalyst.rules.Rule[org.apache.spark.sql.catalyst.plans.logical.LogicalPlan]] = List(org.apache.spark.sql.catalyst.optimizer.Authorizer$@1196537d)Note that extra optimizations are appended to the end of all the inner optimizing rules. It's good for us to do authorization after column pruning.

Your may notice that it only shut the door for men with a noble character but leave the door open for the scheming ones.

To avoid that, I suggest you modify ExperimentalMethods.scala#L47 and Bulid Spark of your own.

@volatile var extraOptimizations: Seq[Rule[LogicalPlan]] = Nilto

import scala.reflect.runtime.{universe => ru}

private val rule = "org.apache.spark.sql.catalyst.optimizer.Authorizer"

private val mirror = ru.runtimeMirror(Utils.getContextOrSparkClassLoader)

private val clazz = mirror.staticModule(rule)

private val module = mirror.reflectModule(clazz)

@volatile var extraOptimizations: Seq[Rule[LogicalPlan]] = Seq(

module.instance.asInstanceOf[Rule[LogicalPlan]])

Use Scala Reflection to inject it into Spark extra Optimizations in case of maven cyclic dependency error cause failing in comiple Spark source code.

Also, I suggest that make extraOptimizations to a val to avoid reassignment in which case you may have to fix some unit test too.

Without modifying, you either control the spark session such as supplying a Thrift/JDBC Sever or hope for "Manner maketh Man"

Suffer for the Authorization Pain

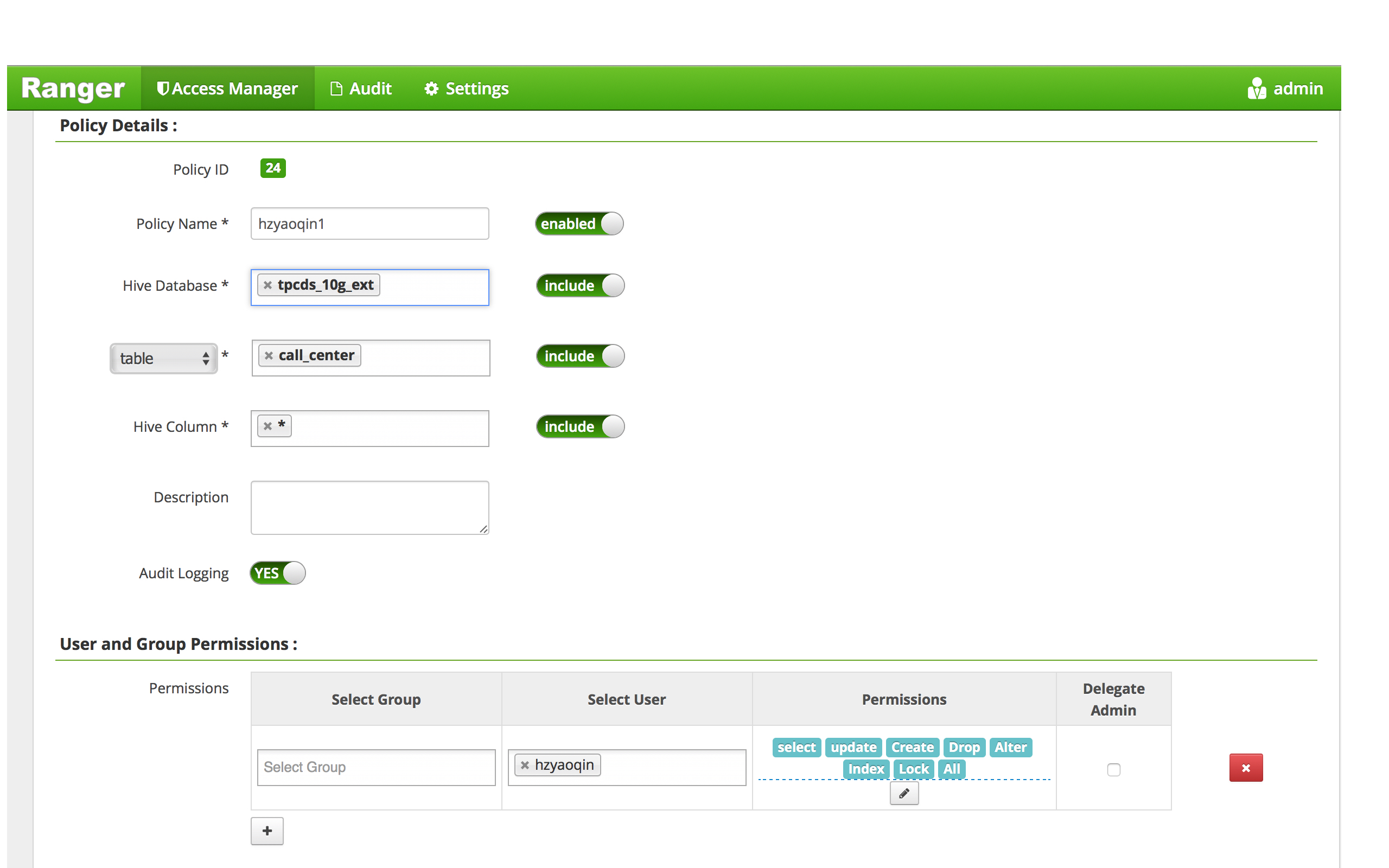

We create a ranger policy as below:

Check Privilage with some simple cases.

Show databases

Actually, user [hzyaoqin] should only see only one privileged database -- tpcds_10g_ext, this is not a bug, but a compromise not hacking Spark's source code

scala> spark.sql("show databases").show

+--------------+

| databaseName|

+--------------+

| default|

| spark_test_db|

| tpcds_10g_ext|

+--------------+Switch database

scala> spark.sql("use spark_test_db").show

17/12/08 17:06:17 ERROR optimizer.Authorizer:

+===============================+

|Spark SQL Authorization Failure|

|-------------------------------|

|Permission denied: user [hzyaoqin] does not have [USE] privilege on [spark_test_db]

|-------------------------------|

|Spark SQL Authorization Failure|

+===============================+Oops...

scala> spark.sql("use tpcds_10g_ext").show

++

||

++

++LOL...

Select

scala> spark.sql("select cp_type from catalog_page limit 1").show

17/12/08 17:09:58 ERROR optimizer.Authorizer:

+===============================+

|Spark SQL Authorization Failure|

|-------------------------------|

|Permission denied: user [hzyaoqin] does not have [SELECT] privilege on [tpcds_10g_ext/catalog_page/cp_type]

|-------------------------------|

|Spark SQL Authorization Failure|

+===============================+Oops...

scala> spark.sql("select * from call_center limit 1").show

+-----------------+-----------------+-----------------+---------------+-----------------+---------------+--------+--------+------------+--------+--------+-----------+---------+--------------------+--------------------+-----------------+-----------+----------------+----------+---------------+----------------+--------------+--------------+---------------+-------+-----------------+--------+------+-------------+-------------+-----------------+

|cc_call_center_sk|cc_call_center_id|cc_rec_start_date|cc_rec_end_date|cc_closed_date_sk|cc_open_date_sk| cc_name|cc_class|cc_employees|cc_sq_ft|cc_hours| cc_manager|cc_mkt_id| cc_mkt_class| cc_mkt_desc|cc_market_manager|cc_division|cc_division_name|cc_company|cc_company_name|cc_street_number|cc_street_name|cc_street_type|cc_suite_number|cc_city| cc_county|cc_state|cc_zip| cc_country|cc_gmt_offset|cc_tax_percentage|

+-----------------+-----------------+-----------------+---------------+-----------------+---------------+--------+--------+------------+--------+--------+-----------+---------+--------------------+--------------------+-----------------+-----------+----------------+----------+---------------+----------------+--------------+--------------+---------------+-------+-----------------+--------+------+-------------+-------------+-----------------+

| 1| AAAAAAAABAAAAAAA| 1998-01-01| null| null| 2450952|NY Metro| large| 2| 1138| 8AM-4PM|Bob Belcher| 6|More than other a...|Shared others cou...| Julius Tran| 3| pri| 6| cally| 730| Ash Hill| Boulevard| Suite 0| Midway|Williamson County| TN| 31904|United States| -5.00| 0.11|

+-----------------+-----------------+-----------------+---------------+-----------------+---------------+--------+--------+------------+--------+--------+-----------+---------+--------------------+--------------------+-----------------+-----------+----------------+----------+---------------+----------------+--------------+--------------+---------------+-------+-----------------+--------+------+-------------+-------------+-----------------+

LOL...

Dataset/DataFrame

scala> spark.read.table("catalog_page").limit(1).collect17/12/11 14:46:33 ERROR optimizer.Authorizer:

+===============================+

|Spark SQL Authorization Failure|

|-------------------------------|

|Permission denied: user [hzyaoqin] does not have [SELECT] privilege on [tpcds_10g_ext/catalog_page/cp_catalog_page_sk,cp_catalog_page_id,cp_promo_id,cp_start_date_sk,cp_end_date_sk,cp_department,cp_catalog_number,cp_catalog_page_number,cp_description,cp_type]

|-------------------------------|

|Spark SQL Authorization Failure|

+===============================+

Oops...

scala> spark.read.table("call_center").limit(1).collectres3: Array[org.apache.spark.sql.Row] = Array([1,AAAAAAAABAAAAAAA,1998-01-01,null,null,2450952,NY Metro,large,2,1138,8AM-4PM,Bob Belcher,6,More than other authori,Shared others could not count fully dollars. New members ca,Julius Tran,3,pri,6,cally,730,Ash Hill,Boulevard,Suite 0,Midway,Williamson County,TN,31904,United States,-5.00,0.11])

LOL...