Mahmoud Afifi, Marcus A. Brubaker, and Michael S. Brown

York University

Paper | Supplementary Materials | Video | Poster | PPT

Reference code for the paper HistoGAN: Controlling Colors of GAN-Generated and Real Images via Color Histograms. Mahmoud Afifi, Marcus A. Brubaker, and Michael S. Brown. In CVPR, 2021. If you use this code or our dataset, please cite our paper:

@inproceedings{afifi2021histogan,

title={HistoGAN: Controlling Colors of GAN-Generated and Real Images via Color Histograms},

author={Afifi, Mahmoud and Brubaker, Marcus A. and Brown, Michael S.},

booktitle={Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition},

year={2021}

}

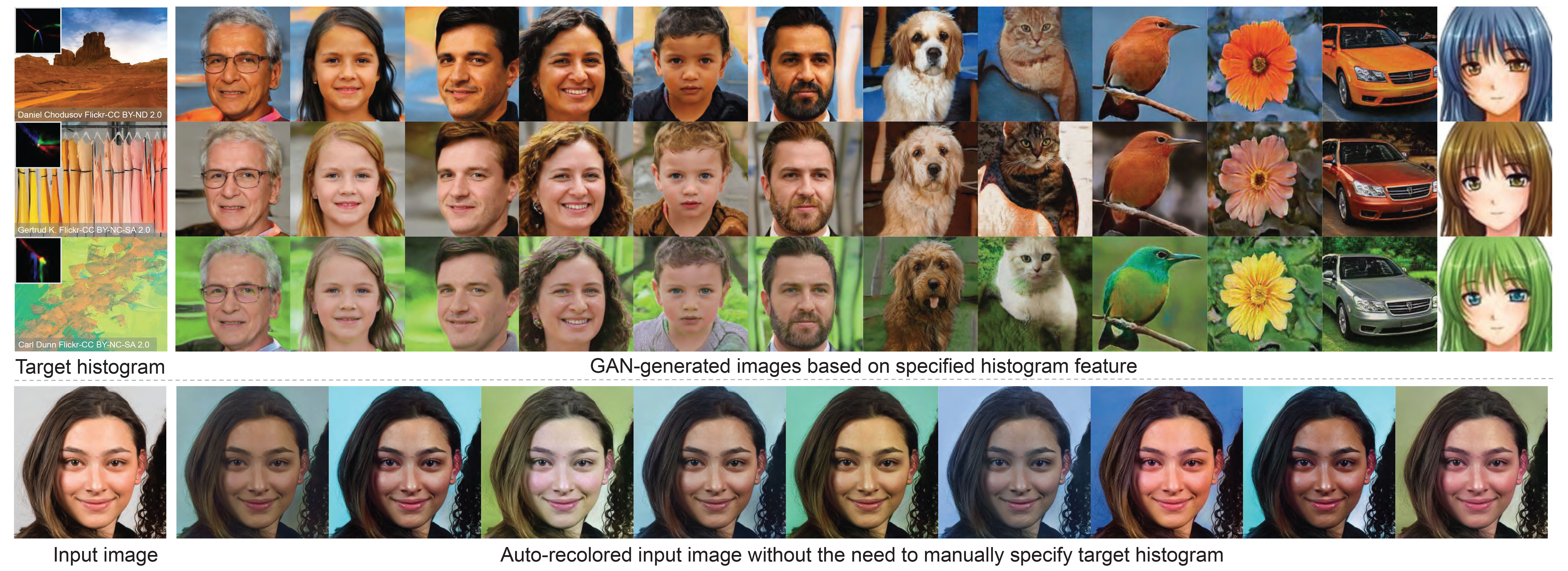

In this paper, we present HistoGAN, a color histogram-based method for controlling GAN-generated images' colors. We focus on color histograms as they provide an intuitive way to describe image color while remaining decoupled from domain-specific semantics. Specifically, we introduce an effective modification of the recent StyleGAN architecture to control the colors of GAN-generated images specified by a target color histogram feature. We then describe how to expand HistoGAN to recolor real images. For image recoloring, we jointly train an encoder network along with HistoGAN. The recoloring model, ReHistoGAN, is an unsupervised approach trained to encourage the network to keep the original image's content while changing the colors based on the given target histogram. We show that this histogram-based approach offers a better way to control GAN-generated and real images' colors while producing more compelling results compared to existing alternative strategies.

- Pytorch

- numpy

- tqdm

- pillow

- linear-attention-transformer (optional)

- vector-quantize-pytorch (optional)

- torch-optimizer

- retry

- dlib (optional)

Conda & pip commands:

conda create -n histoGAN python=3.6 numpy=1.13.3 scipy

conda activate histoGAN

conda install pytorch torchvision -c python

conda install -c conda-forge tqdm

conda install -c anaconda pillow

pip install CMake

pip install dlib

pip install linear-attention-transformer

pip install vector-quantize-pytorch

pip install torch-optimizer

pip install retry

You may face some problems in installing dlib on Windows via pip. It is required only for the face pre-processing option (see below for more details). In order to install dlib for Windows, please follow this link. If couldn't install dlib, you can comment this line and do not use the --face_extraction option for ReHistoGAN.

We provide a Colab notebook example code to compute our histogram loss. This histogram loss is differentiable and can be easily integrated into any deep learning optimization.

In the Colab tutorial, we provide different versions of the histogram class to compute histogram loss for different color spaces: RGB-uv, rg-chroma, and CIE Lab. For CIE Lab, input images are supposed to be already in CIE LAB space before computing the loss. The code of these histogram classes is also provided in ./histogram_classes. In HistoGAN and ReHistoGAN, we trained using RGB-uv histogram features. To use rg-chroma or CIE Lab, you simply replace this import from histogram_classes.RGBuvHistBlock import RGBuvHistBlock with from histogram_classes.X import X as RGBuvHistBlock, where X is the name of the histogram class. This change should be applied to all source code files that use the histogram feature. Note that for the CIE LAB histograms, you need to first convert loaded images into the CIE LAB space in the Dataset class in both histoGAN and ReHistoGAN codes. That also requires converting the generated images back to sRGB space before saving them.

If you faced issues with memory, please check this issue for potential solutions.

To train/test a histoGAN model, use histoGAN.py. Trained models should be located in the models directory and each trained model's name should be a subdirectory in the models directory. For example, to test a model named test_histoGAN, you should have models/test_histoGAN/model_X.pt exists (where X refers to the epoch number).

To train a histoGAN model on a dataset located at ./datasets/faces/ for example:

python histoGAN.py --name histoGAN_model --data ./datasets/faces/ --num_train_steps XX --gpu 0

XX should be replaced with the number of iterations.

During training, you can watch example samples generated by the generator network in the results directory (specified by --results_dir). Each column in the generated sample images shares the same training histogram feature. Shown below is the training progress of a HistoGAN trained on the FFHQ dataset using --network_capacity 16 and --image_size 256.

There is no clear criterion to stop training, so watching generated samples will help to detect when the generator network starts diverging. Also reporting the FID score after each checkpoint may help.

You may need to increase the number of training steps (specified by --num_train_steps), if the generator didn't diverge by the end of training. If the network starts generating degraded results after a short period of training, you may either want to reduce the network capacity (specified by --network_capacity) or use data augmentation by using --aug_prob value that is higher than 0.

Shown below is the training progress of HistoGAN when trained on portrait images with and without augmentation applied. As shown, the generator starts to generate degraded images after a short training period, while it keeps generating reasonable results when augmentation is applied.

Here is an example of how to generate new samples of a trained histoGAN models named Faces_histoGAN:

python histoGAN.py --name Faces_histoGAN --generate True --target_his ./target_images/1.jpg --gpu 0

The shown figure below illustrates what this command does. First, we generate a histogram feature of this input image. Then, this feature is fed into our HistoGAN to generate face image samples.

Generated samples will be located in ./results_HistoGAN/Faces_histoGAN.

Another example is given below, where we use a fixed input noise and style vectors for the first blocks of the generator network, while we change the input histograms. In this example, we first use --save_noise_latent = True to save the noise and latent data for the first blocks. Then, we load the saved noise and latent files, using --target_noise_file and --target_latent_file, to generate the same samples but with different color histograms.

python histoGAN.py --name Faces_histoGAN --generate True --target_his ./target_images/1.jpg --save_noise_latent True --gpu 0

python histoGAN.py --name Faces_histoGAN --generate True --target_his ./target_images/ --target_noise_file ./temp/Face_histoGAN/noise.py --target_latent_file ./temp/Faces_histoGAN/latents.npy --gpu 0

Additional useful parameters are given below.

--name: Model name.--models_dir: Models directory (to save or load models).--data: Dataset directory (for training).--new: Set to True to train a new model. If--new = False, it will start training/evaluation from the last saved model.--image_size: Output image size (should be a power of 2).--batch_sizeand--gradient_accumulate_every: To control the size of mini-batch and the accumulation in computing the gradient.--network_capacity: To control network capacity.--attn_layers: To add a self-attention to the designated layer(s) of the discriminator (and the corresponding layer(s) of the generator). For example, if you would like to add a self-attention layer after the output of the 1st and 2nd layers, use--attn_layers 1,2. In our training, we did not use any attention layers, but it could improve the results if added.--results_dir: Results directory (for testing and evaluation during training).--target_hist: Target histogram (image, npy file of target histogram, or directory of either images or histogram files). To generate a histogram of images, check create_hist_sample.py.--generate: Set to True for testing.--save_noise_latent: To save the noise and latent of current generated samples intempdirectory (for testing).--target_noise_file: To load noise from a saved file (for testing)--target_latent_file: To load latent from a saved file (for testing).--num_image_tiles: Number of image tiles to generate.--gpu: CUDA device ID.--aug_types: Options include:translation,cutout, andcolor. Example:--aug_types translation cutout.--dataset_aug_prob: Probability of dataset augmentation: applies random cropping--aug_prob: Probability of discriminator augmentation. It applies operations specified in--aug_types. Note that if you use--aug_prob > 0.0to train the model, you should use--aug_prob > 0.0in testing as well to work properly.--hist_bin: Number of bins in the histogram feature.--hist_insz: Maximum size of the image before computing the histogram feature.--hist_method: "Counting" method used to construct histograms. Options include:inverse-quadratickernel,RBFkernel, orthresholding.--hist_resizing: If--hist_inszdoesn't match the input image size, the image is resized based on the resizing method. Resizing options are:interpolationorsampling.--hist_sigma: If one of the kernel methods used to compute the histogram feature (specified in--hist_method), this is the kernel sigma parameter.--alpha: histogram loss scale factor (for training).

Very soon!

As mentioned in the paper, we trained HistoGAN on several datasets. Our pre-trained models were trained using --network_capacity = 16 and --image_size = 256 due to hardware limitations. Better results can be achieved by increasing the network capacity and using attention layers (--attn_layers). Here are examples of our trained models (note: these models include both generator and discriminator nets):

- Faces | Google Drive mirror

- Faces_20

- Cars

- Flowers

- Anime

- Landscape

- PortraitFaces

- PortraitFaces_aug

- PortraitFaces_20_aug

Note that for model names that include _20, use --network_capacity 20 in testing. If the model name includes _aug, make sure to set --aug_prob to any value higher than zero. Below are examples of generated samples from each model. Each shown group of generated images share the same histogram feature.

Very soon!

Very soon!

As the case of most GAN methods, our ReHistoGAN targets a specific object domain to achieve the image recoloring task. This restriction may hinder the generalization

of our method to deal with images taken from arbitrary domains. To deal with that, we collected images from a different domain, aiming to represent the "universal" object

domain (see the supplemental materials for more details). To train our ReHistoGAN on this "universal" object domain, we used --network_capacity 18 without any further changes in the original architecture.

- Faces model-0

- Faces model-1

- Faces model-2

- Faces model-3

- Universal model-0

- Universal model-1

- Universal model-2

The ReHistoGAN code will be available very soon.

Our collected set of 4K landscape images is available here.

We have extracted face images from the WikiArt dataset. This set includes ~7,000 portrait face images. You can download our processed portrait set from here. If you use this dataset, please cite our paper in addition to the WikiArt dataset. This set is provided only for non-commercial research purpose. The images in the WikiArt dataset were obtained from WikiArt.org. By using this set, you agree to obey the terms and conditions of WikiArt.org.

A significant part of this code was was built on top of the PyTorch implementation of StyleGAN by Phil Wang.