Direct-a-Video: Customized Video Generation with User-Directed Camera Movement and Object Motion.

Shiyuan Yang, Liang Hou, Haibin Huang, Chongyang Ma, Pengfei Wan, Di Zhang, Xiaodong Chen, Jing Liao

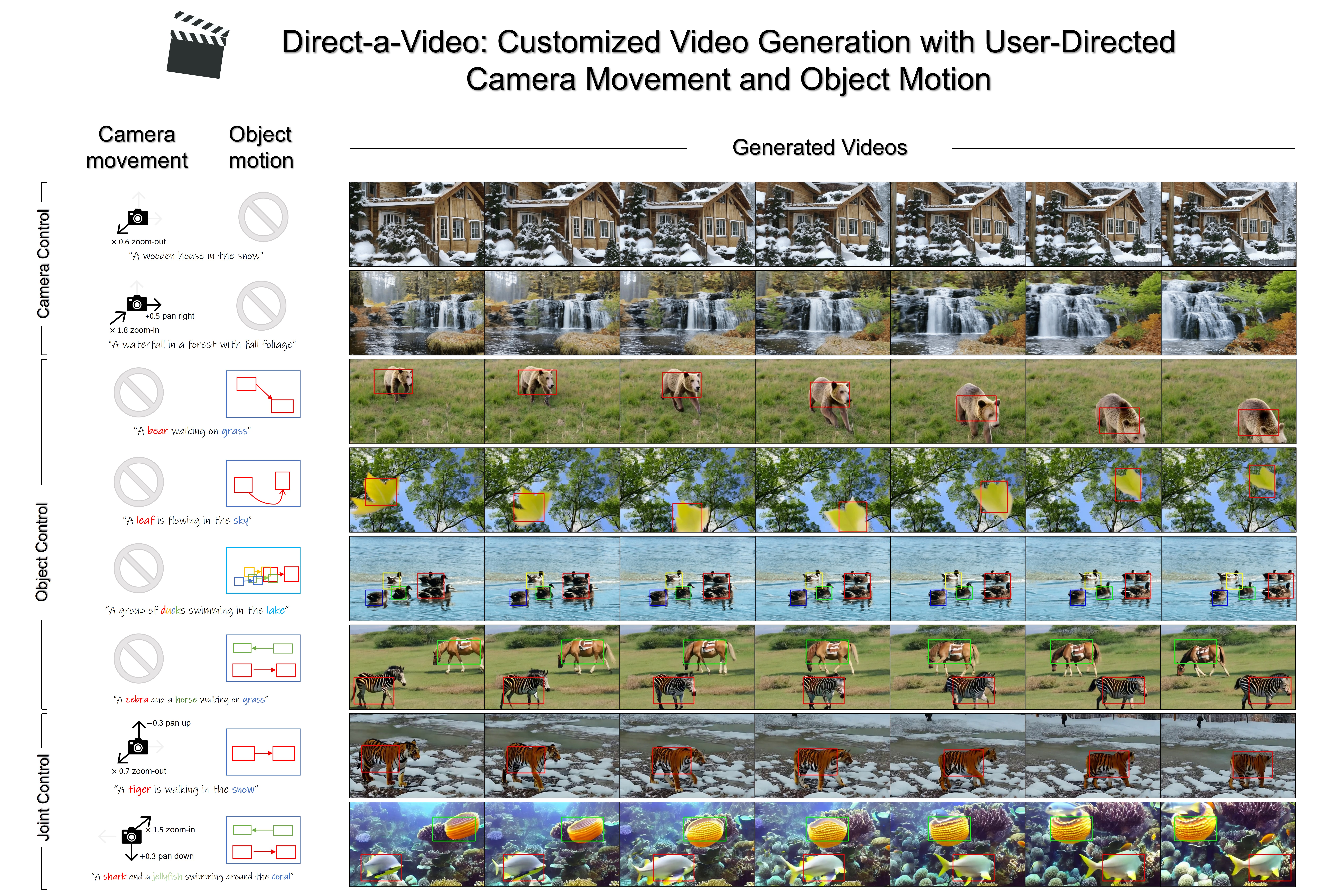

TL;DR: Direct-a-Video is a text-to-video generation framework that allows users to individually or jointly control the camera movement and/or object motion.

You may create a new environment:

conda create --name dav python=3.8

conda activate davThe required python packages are listed in requirements.txt, you can install these packages by running :

pip install -r requirements.txtWe use Zeroscope_v2_576w as our base model, you can cache it to locally by running the following python code.

import torch

from diffusers import DiffusionPipeline

pipe = DiffusionPipeline.from_pretrained("cerspense/zeroscope_v2_576w", torch_dtype=torch.float16)Based on text-to-video base model, we additionally trained a camera module that enables camera motion control. The camera module is available at

OneDrive or GoogleDrive. Please download it and save to the ./ckpt directory.

We prepared two ways to run the inference - either using python notebook or using our qt5-based UI. See instructions below:

Refer to the inference.ipynb, follow the step-by-step instructions and comments inside.

We also designed a UI (which is based on pyqt5) for interactive use. Here are the instructions:

-

Run the ui launching script

./ui/main_ui.py, make sure your machine supports graphics display if you are running on a remote server.python ui/main_ui.py

you'll see the interface below

-

Input your prompt in Section A. Instructions on prompt:

- Use * to mark the object(s) word and the background word (optional), just append * right after the word. For example, "a tiger* and a bear* walking in snow*"

- If an object has more than one word, use ( ) to wrap them. E.g., a (white tiger) walking in (green grassland)"

- The mark * and ( ) can be used together, e.g., a tiger* and a (bear) walking in (green grassland)"

- The marked background word (if any) should always be the last marked word, as seen in the above examples.

-

[optional] Camera motion: set camera movement parameters in Section B, remember to check the enable box first!

-

[optional] Object Motion: draw object motion boxes in Section C:

- Check the enable box at the top to enable this function.

- On blank canvas, left-click and drag the mouse to draw a starting box, release the mouse, then left-click and drag the mouse to draw an ending box.

- Upon releasing the mouse, a straight path will be automatically generated between starting box and ending box. You can right-click somewhere to adjust this path.

- You can click "add object" button to add another box pair.

-

[optional] You can change the random seed in section D, we do not recommend changing the video resolution.

-

In Section E, click

initialize the modelto initialize the models (done once only before generation). -

After initialization is done, click

Generate videobutton , wait for a while and the output results will be displayed. You can go back to step 3 or 4 or 5 to adjust the input and hyperparamters then generate again.Some tips:

- If the model generates tightly packed box-shape objects, try to increase the attention amplifcation timestep in Section C to higher value like 0.98. You can also decrease the amplifcation weight to lower values like 10.

- Initial noise is important to output, try with different seeds if not get desired output.

We use a static shot subset of Movieshot for training the camera motion. We first download the dataset, we then use BLIP-2 to generate caption for each video. Finally, we make the training data in csv format, see ./data/example_train_data.csv for example.

The main training script for camera motion is train_cam.py. You may want to go through it before running. We prepared a bash file train_cam_launcher.sh, where you can set the arguments for launching the training script using Accelerator. We list some useful arguments:

- --output_dir: the directory to save training outputs, including validation samples, and checkpoints.

- --train_data_csv: csv file containing training data, see './data/example_train_data.csv' for example.

- --val_data_csv: csv file containing validation data, see './data/example_val_data.csv' for example.

- --n_sample_frames: number of video frames

- --h: video height

- --w: video width

- --validation_interval: how many iters to run validation set

- --checkpointing_interval: how many iters to save ckpt

- --mixed_precision: can be one of 'no' (i.e.,fp32), 'fp16', or 'bf16' (only on certain GPUs)

- --gradient_checkpointing: enable this to save memory

After setting up, run the bash script to launch the training:

bash train_cam_launcher.sh@inproceedings{dav24,

author = {Shiyuan Yang and Liang Hou and Haibin Huang and Chongyang Ma and Pengfei Wan and Di Zhang and Xiaodong Chen and Jing Liao},

title = {Direct-a-Video: Customized Video Generation with User-Directed Camera Movement and Object Motion},

booktitle = {Special Interest Group on Computer Graphics and Interactive Techniques Conference Conference Papers '24 (SIGGRAPH Conference Papers '24)},

year = {2024},

location = {Denver, CO, USA},

date = {July 27--August 01, 2024},

publisher = {ACM},

address = {New York, NY, USA},

pages = {12},

doi = {10.1145/3641519.3657481},

}

This repo is mainly built on Text-to-video diffusers pipeline. Some code snippets in the repo were borrowed from GLGEN diffusers repo and DenseDiff repo.