Deep Koalarization

Deep Koalarization: Image Colorization using CNNs and Inception-ResNet-v2 (2017).

Federico Baldassarre*, Diego Gonzalez Morin* and Lucas Rodés Guirao* (* Authors contributed equally)

This project was developed during as part of the DD2424 Deep Learning in Data Science course at KTH Royal Institute of Technology, spring 2017.

The code is built using Keras and Tensorflow.

Consider starrring this project if you found it useful ⭐!

Citation

If you find Deep Koalarization useful in your research, please consider citing our paper as

@article{deepkoal2017,

author = {Federico Baldassarre, Diego Gonzalez-Morin, Lucas Rodes-Guirao},

title = {Deep-Koalarization: Image Colorization using CNNs and Inception-ResNet-v2},

journal = {ArXiv:1712.03400},

url = {https://arxiv.org/abs/1712.03400},

year = 2017,

month = dec

}

Abstract

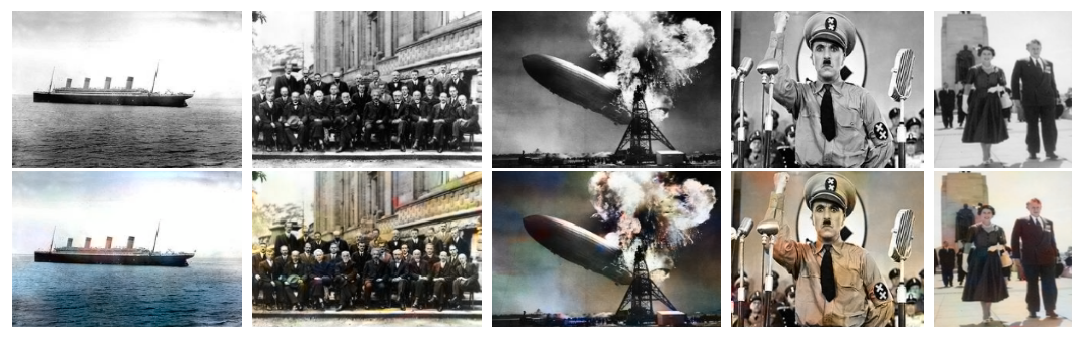

We review some of the most recent approaches to colorize gray-scale images using deep learning methods. Inspired by these, we propose a model which combines a deep Convolutional Neural Network trained from scratch with high-level features extracted from the Inception-ResNet-v2 pre-trained model. Thanks to its fully convolutional architecture, our encoder-decoder model can process images of any size and aspect ratio. Other than presenting the training results, we assess the "public acceptance" of the generated images by means of a user study. Finally, we present a carousel of applications on different types of images, such as historical photographs.

Overview

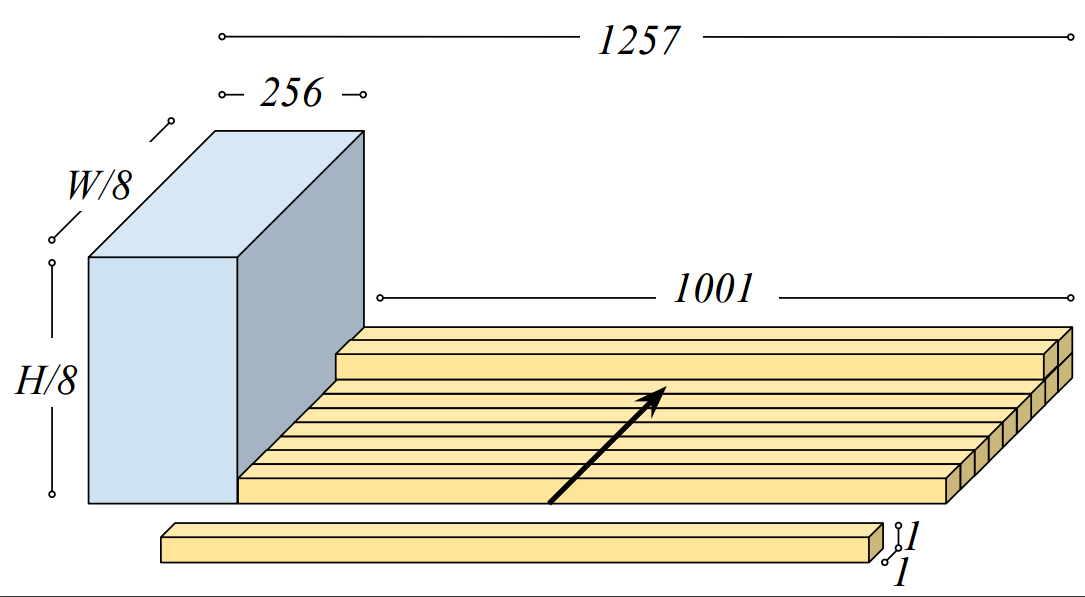

Inspired by Iizuka and Simo-Serra et al. (2016), we combine a deep CNN architecture with Inception-ResNet-v2 pre-trained on ImageNet dataset, which assists the overall colorization process by extracting high-level features. In particular, Inception-ResNet-v2

The fusion between the fixed-size embedding and the intermediary result of the convolutions is performed by means of replication and stacking as described in Iizuka and Simo-Serra et al. (2016).

We have used the MSE loss as the objective function.

The Training data for this experiment could come from any source. We decuded to use ImageNet, which nowadays is considered the de-facto reference for image tasks. This way, it makes easier for others to replicate our experiments.

Installation

Refer to INSTRUCTIONS to setup and use the code in this repo.

Results

ImageNet

Historical pictures

Thanks to the people who noticed our work!

We are proud if our work gets noticed and helps/inspires other people on their path to knowledge. Here's a list of references we are aware of, some of the authors contacted us, some others we just happened to find online:

- François Chollet tweeted about this project (thank you for Keras)

- Emil Wallnér on FloydHub Blog and freecodecamp

- Amir Kalron on Logz.io Blog

- sparkexpert on CSDN