Official implementation of

- Frequency Dynamic Convolution: Frequency-Adaptive Pattern Recognition for Sound Event Detection (Accepted to INTERSPEECH 2022)

by Hyeonuk Nam, Seong-Hu Kim, Byeong-Yun Ko, Yong-Hwa Park

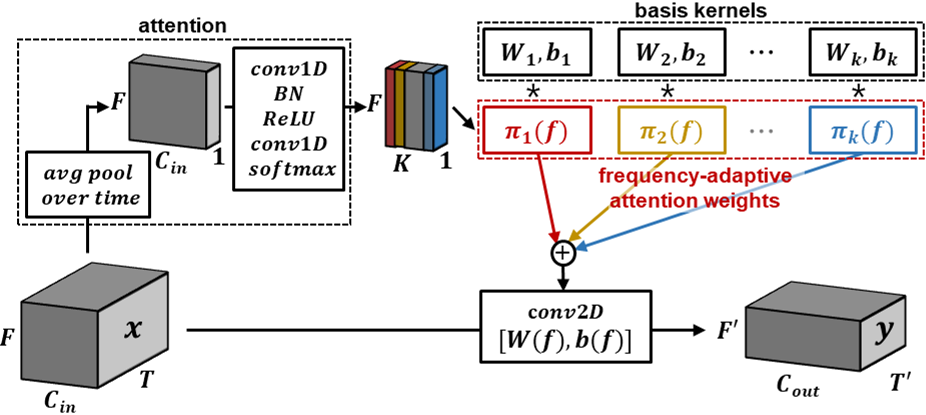

Frequency Dynamic Convolution applied kernel that adapts to each frequency bin of input, in order to remove translation equivariance of 2D convolution along the frequency axis.

- Traditional 2D convolution enforces translation equivariance on time-frequency domain audio data in both time and frequency axis.

- However, sound events exhibit time-frequency patterns that are translation equivariant in time axis but not in frequency axis.

- Thus, frequency dynamic convolution is proposed to remove physical inconsistency of traditional 2D convolution on sound event detection.

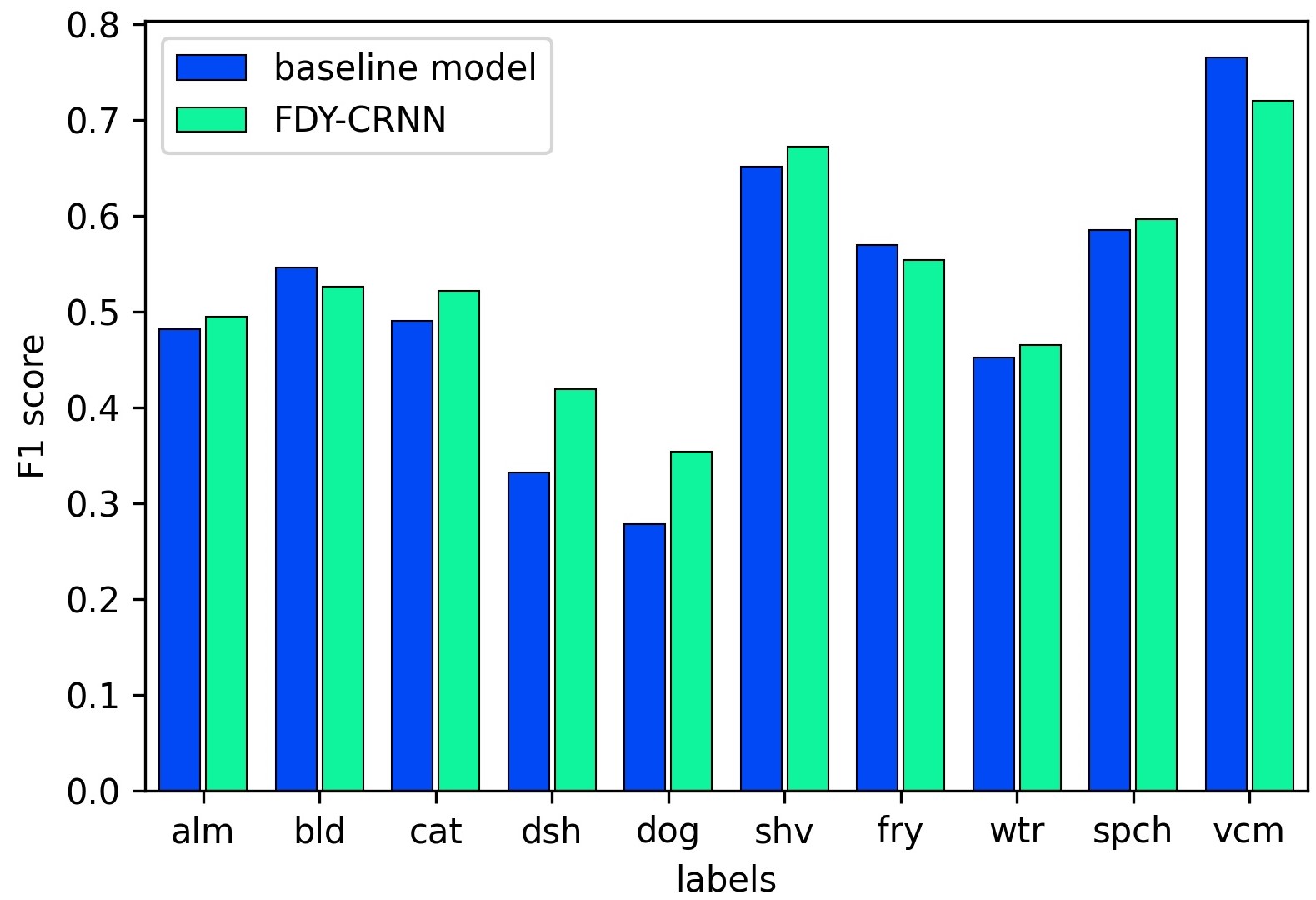

Above bar chart compares the class-wise event-based F1 scores for the CRNN model with and without frequency dynamic convolution. It can be observed that

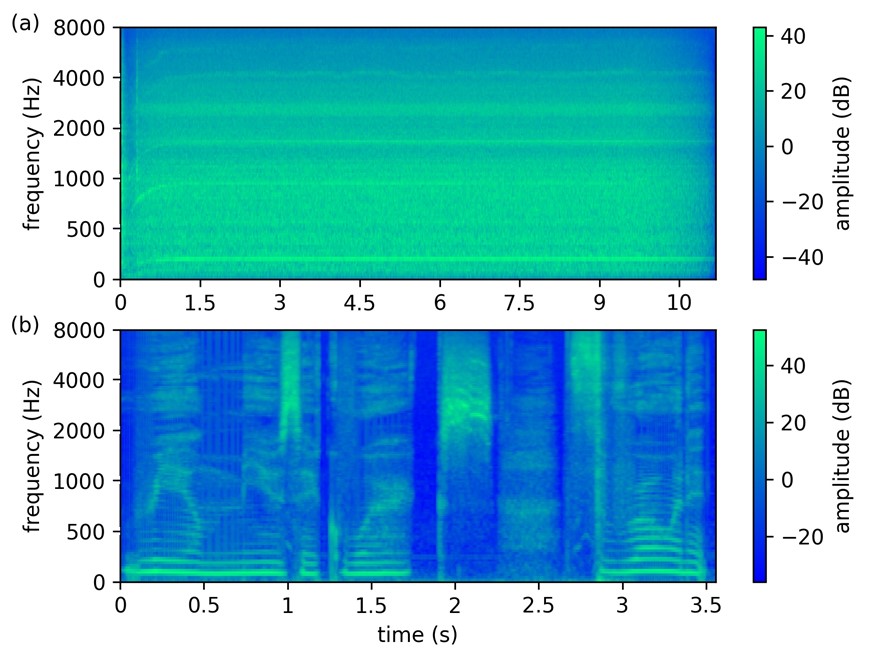

- Quasi-stationary sound events such as blender, frying and vacuum cleaner exhibits simple time-frequency patterns (example shown below (a), a log mel spectrogram of vacuum cleaner sound). Thus, frequency dynamic convolution is less effective on these sound events.

- Non-stationary sound events such as alarm/bell ringing, cat, dishes, dog, electric shaver/toothbrush, running water and speech exhibits intricate time-frequency patterns (example shown below (b), a log mel spectrogram of speech sound). Thus, frequency dynamic convolution is especially effective on these sound events.

For more detailed explanations, refer to the paper mentioned above, or contact the author of this repo below.

Python version of 3.7.10 is used with following libraries

- pytorch==1.8.0

- pytorch-lightning==1.2.4

- pytorchaudio==0.8.0

- scipy==1.4.1

- pandas==1.1.3

- numpy==1.19.2

other requrements in requirements.txt

You can download datasets by reffering to DCASE 2021 Task 4 description page or DCASE 2021 Task 4 baseline. Then, set the dataset directories in config yaml files accordingly. You need DESED real datasets (weak/unlabeled in domain/validation/public eval) and DESED synthetic datasets (train/validation).

You can train and save model in exps folder by running:

python main.py| Model | PSDS1 | PSDS2 | Collar-based F1 | Intersection-based F1 |

|---|---|---|---|---|

| w/o Dynamic Convolution | 0.416 | 0.640 | 51.8% | 74.4% |

| DY-CRNN | 0.441 | 0.663 | 52.6% | 75.0% |

| TDY-CRNN | 0.415 | 0.652 | 51.2% | 75.1% |

| FDY-CRNN | 0.452 | 0.670 | 53.3% | 75.3% |

- These results are based on max values of each metric for 16 separate runs on each setting (refer to paper for details).

Trained model is at exps/new_exp_gpu=0.

You can test trained model by editing configuration @training, "test_only" = True then run main.py:

| Model | PSDS1 | PSDS2 | Collar-based F1 | Intersection-based F1 |

|---|---|---|---|---|

| trained FDY-CRNN (student) | 0.447 | 0.667 | 51.5% | 74.4% |

| trained FDY-CRNN (teacher) | 0.442 | 0.657 | 52.1% | 74.0% |

- DCASE 2021 Task 4 baseline

- Sound event detection with FilterAugment

- Temporal Dynamic CNN for text-independent speaker verification

If this repository helped your works, please cite papers below! 2nd paper is about data augmentation method called FilterAugment which is applied to this work.

@article{nam2022freqdynamicconv,

title={Frequency Dynamic Convolution: Frequency-Adaptive Pattern Recognition for Sound Event Detection},

author={Hyeonuk Nam and Seong-Hu Kim and Byeong-Yun Ko and Yong-Hwa Park},

journal={arXiv preprint arXiv:2203.15296},

year={2022},

}

@INPROCEEDINGS{nam2021filteraugment,

author={Nam, Hyeonuk and Kim, Seong-Hu and Park, Yong-Hwa},

booktitle={ICASSP 2022 - 2022 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP)},

title={Filteraugment: An Acoustic Environmental Data Augmentation Method},

year={2022},

pages={4308-4312},

doi={10.1109/ICASSP43922.2022.9747680}

}Please contact Hyeonuk Nam at frednam@kaist.ac.kr for any query.