A PyTorch implementation of Dynamical Isometry and a Mean Field Theory of CNNs: How to Train 10,000-Layer Vanilla Convolutional Neural Networks

-

Install PyTorch

-

Start training (default: Cifar-10)

python train.py- Visualize the learning curve

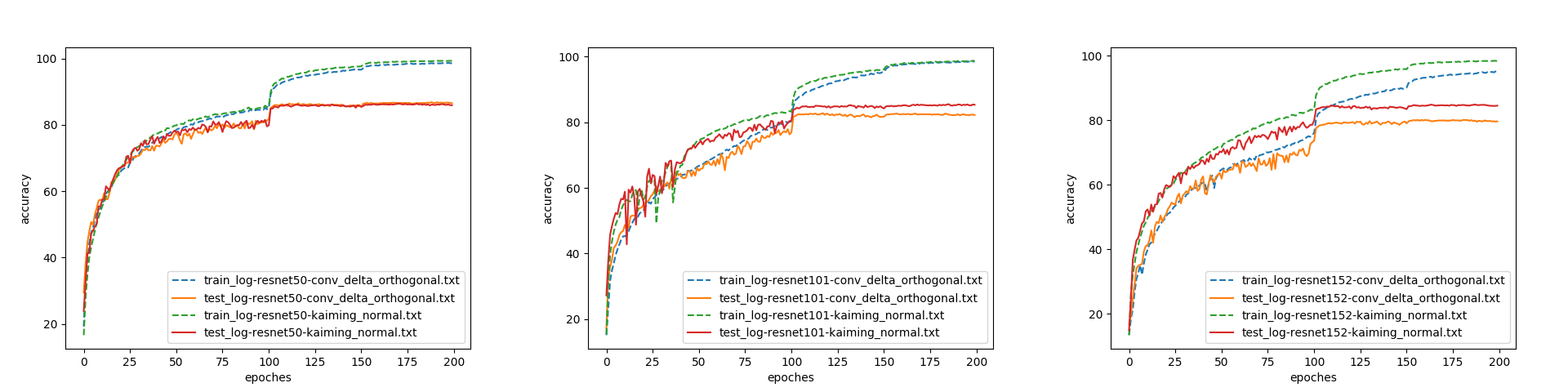

python tools/plot.py log-xxx.txt log-yyy.txtWe conducted experiments on Resnet-50, 101, 152.

To adapt to the initilizer, we modify the block expansion of resnet from 4 to 1,

such that out_channels is always not smaller than in_channels.

The learning rate is initialized with 0.1, divided by 10 at epoch 100 and 150 and terminated at epoch 200.

More discussion can be found at Reddit. It seems that currently the theory applies on Vanilla CNN with tanh activations. Skip-connection with ReLU activations or other modern architectures is still an open problem.