Unofficial pytorch implementation of Masked AutoEncoder

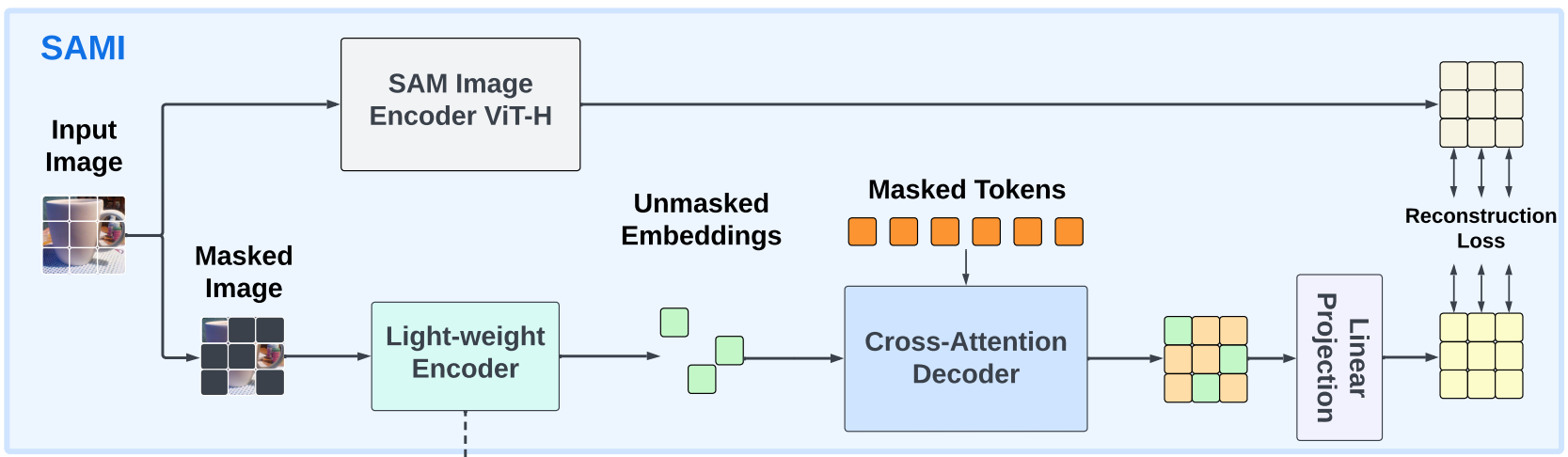

Based on my understanding of EfficientSAM's SAMI framework and the technical details given in the paper, I tried to implement the SAMI pre-training framework, using SAM's ViT to improve the performance of small-scale ViT models, including ViT-Tiny and ViT-Small.

Unfortunately, I currently do not have sufficient computing resources to verify whether my implementation can reproduce the SAMI experimental results in the EfficientSAM paper.

First of all, you need to follow the requirements of this README file to prepare the SAM's ViT checkpoint, which will be used as the teacher model to supervise the small-scale ViT in the SAMI pretraining stage.

We have kindly provided the bash script train_pretrain.sh file for pretraining. You can modify some hyperparameters in the script file according to your own needs.

- Single GPU

# bash train_pretrain.sh <model> <teacher model> <batch size> <data> <data path> <world size> <resume>

bash train_pretrain.sh vit_t vit_h 256 imagenet_1k /path/to/imagenet_1k/ 1 None- Multi GPUs

# bash train_pretrain.sh <model> <teacher model> <batch size> <data> <data path> <world size> <resume>

bash train_pretrain.sh vit_t vit_h 256 imagenet_1k /path/to/imagenet_1k/ 8 NoneWe have kindly provided the bash script train_finetune.sh file for finetuning. You can modify some hyperparameters in the script file according to your own needs.

- Single GPU

# bash train_pretrain.sh <model> <batch size> <data> <data path> <world size> <resume>

bash train_finetune.sh vit_t 256 imagenet_1k /path/to/imagenet_1k/ 1 None- Multi GPUs

# bash train_pretrain.sh <model> <batch size> <data> <data path> <world size> <resume>

bash train_finetune.sh vit_t 256 imagenet_1k /path/to/imagenet_1k/ 8 None- Evaluate the

top1 & top5accuracy ofViT-Tinyon CIFAR10 dataset:

python train_finetune.py --dataset cifar10 -m vit_t --batch_size 256 --img_size 32 --patch_size 2 --eval --resume path/to/checkpoint- Evaluate the

top1 & top5accuracy ofViT-Tinyon ImageNet-1K dataset:

python train_finetune.py --dataset imagenet_1k --root /path/to/imagenet_1k -m vit_t --batch_size 256 --img_size 224 --patch_size 16 --eval --resume path/to/checkpoint- We use the SAM's

ViT-Has the teacher to supervise the small-scale ViT. - We use the

AttentionPoolingClassifieras the classifier. - We finetune the models with 100 epoch on ImageNet-1K.

| Method | Model | Teacher | Epoch | Top 1 | Weight | MAE weight |

|---|---|---|---|---|---|---|

| MAE | ViT-T | - | 100 | |||

| MAE | ViT-S | - | 100 | |||

| SAMI | ViT-T | SAM ViT-H | 100 | |||

| SAMI | ViT-S | SAM ViT-H | 100 |

- We use the small ViT pretrained by the SAMI as the backbone of

ViTDet.

| Method | Model | Backbone | Epoch | Top 1 | Weight | MAE weight |

|---|---|---|---|---|---|---|

| SAMI | ViTDet | Vit-T | 100 | |||

| SAMI | ViTDet | Vit-S | 100 |

Thanks for Kaiming He's inspiring work on MAE and the official source code of MAE.