This repository contains the Pytorch implementation of my paper:

Y. Liu, X. Yang, D. Xie, X. Wang, L. Shen, H. Huang, N. Balasubramanian, "Adaptive Activation Network and Functional Regularization for Efficient and Flexible Deep Multi-Task Learning." in Proceedings of the 34th AAAI Conference on Artificial Intelligence (AAAI-20), 2020. [citation]

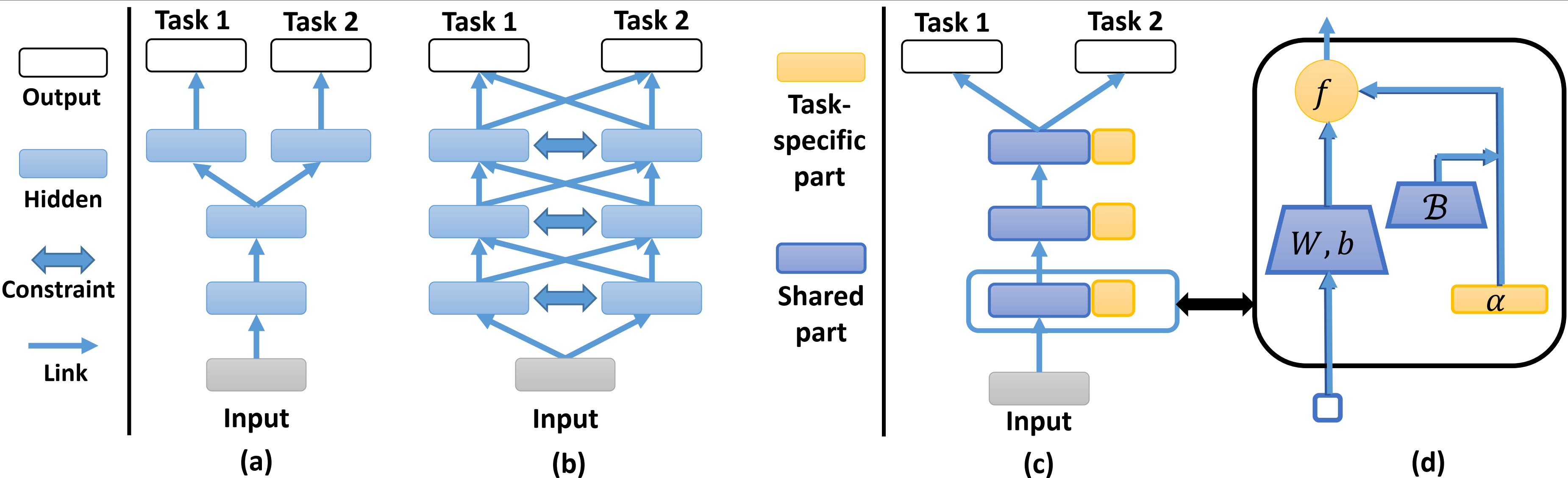

In this paper, we are going to automatically learn the optimal splitting of network architecture for deep Multi-Task Learning in a scalable way.

- Pytorch == 1.1.0

- Pyorch-Ignite == 0.2.0

- h5py == 2.9.0

Inside this repository, we conduct comprehensive experiments on two datasets.

We also implement several recent deep Multi-Task Learning methods, including:

- Multilinear Relationship Network (MRN): soft-sharing method that models task relationship by tensor Gaussian distribution. [citation]

- Deep Multi-Task Representation Learning (DMTRL): soft-sharing method based on tensor decomposition. [citation]

- Soft Layer Ordering (Soft-Order): compute task-specific order of the shared sets of hidden layer. [citation]

- Cross-Stitch: soft-sharing method that computes feature by linear combination of the task-specific hidden layers. [citation]

- Multi-gate Mixture-of-Experts (MMoE): Computes the last hidden feature by the gated combination of a set of neural networks (Experts). [citation]

The implementations can be found in the path Youtube8M/models.py.

In the path Layers, we already implement the layers of MRN, DMTRL and TAL in our model. You can directly use them as the

general Pytorch layers nn.Linear and nn.Conv2d. The only difference is that these layers have some extra parameters to set-up the regularization based on their proposed methods,

and they have an additional member function self.regularization(c) that computes the corresponding

regularization term with respect to the Lagrangian coefficient c. The details are given in Layers/README.md.

For the detailed instructions on reproducing our experiments, please refer

Youtube8M/README.md and Omniglot/README.md.