希望它能在技术学习、公众号爬虫、开发者变现等多方面给你带来启发

- 源代码数十个模块,结构清晰,付费社区还有详细的配置文档

- 项目介绍详尽,概括了主要的特性和技术栈

- 配有精心剪辑的介绍视频和动图,如果你正在为公司的公众号采集开发犯愁,分享给技术负责人,相信会帮助你们节约时间

如果你和我一样是一个有孩子的工程师,相信也一定面临着多重挑战和压力,文末我给你准备了一个小彩蛋,希望在家庭教育上,能给你多一份自信。带孩子本可以很轻松...

weixin_crawler是一款使用Scrapy、Flask、Echarts、Elasticsearch等实现的微信公众号文章爬虫,可采集任意公众号的全部历史文章,包括阅读数据.自带分析报告(报告样例)和全文检索功能,几百万的文档都能瞬间搜索。weixin_crawler设计的初衷是尽可能多、尽可能快地爬取微信公众的历史发文

weixin_crawler尚处于维护之中, 方案有效, 请放心尝试. weixin_crawler is under maintaining, the code works greatly free to explore please

如果你想先看看这个项目是否有趣,这段不足3分钟的介绍视频一定是你需要的 If you want to check if weixin_crawler is interesting or powerful enougth, this video will help to save time https://www.youtube.com/watch?v=CbfLRCV7oeU&t

-

使用Python3编写

Python3 is used

-

爬虫框架为Scrapy并且实际用到了Scrapy的诸多特性,是深入学习Scrapy的不错开源项目

Made full use of scrapy, if you are struggling with scrapy this repo helps to spark

-

利用Flask、Flask-socketio、Vue实现了高可用性的UI界面。功能强大实用,是新媒体运营等岗位不错的数据助手

Flask、Flask-socketio、Vue are used to build a full stack project crawler

-

得益于Scrapy、MongoDB、Elasticsearch的使用,数据爬取、存储、索引均简单高效

Thanks to scrapy mongodb elasticsearch weixin_crawler is not only a crawler but also a search engine

-

支持微信公众号的全部历史发文爬取

Able to crawl all the history articles of any weixin official account

-

支持微信公众号文章的阅读量、点赞量、赞赏量、评论量等数据的爬取

Able to crawl the reading data

-

自带面向单个公众号的数据分析报告

Released with report module based on sigle official account

-

利用Elasticsearch实现了全文检索,支持多种搜索和模式和排序模式,针对搜索结果提供了趋势分析图表

It is also a search engine

-

支持对公众号进行分组,可利用分组数据限定搜索范围

Able to group official account which can be used to define searching range

-

原创手机自动化操作方法,可实现爬虫无人监管

Whith the help of adb, weixin_crawler is able to opereate Android phone automatically, which means it can work without any human monitoring

-

支持多微信APP同时采集, 理论上采集速度可线性增加

Mutiple weixin app is supported to imporove crawling speed linearly

| 语言 | Python3.6 | |

|---|---|---|

| 前端 | web框架 | Flask / Flask-socketio / gevent |

| js/css库 | Vue / Jquery / W3css / Echarts / Front-awsome | |

| 后端 | 爬虫 | Scrapy |

| 存储 | Mongodb / Redis | |

| 索引 | Elasticsearch |

weixin_crawler已经在Win/Mac/Linux系统下运行成功, 建议优先使用win系统尝试 weixin_crawler could work on win/mac/linux, although it is suggested to try on win os firstly

downlaod mongodb / redis / elasticsearch from their official sites and install them

run them at the same time under the default configuration. In this case mongodb is localhost:27017 redis is localhost:6379(or you have to config in weixin_crawler/project/configs/auth.py)

Inorder to tokenize Chinese, elasticsearch-analysis-ik have to be installed for Elasticsearch

install nodejs and then npm install anyproxy and redis in weixin_crawler/proxy

cd to weixin_crawler/proxy and run node proxy.js

install anyproxy https CA in both computer and phone side

if you are not sure how to use anyproxy, here is the doc

NOTE: you may can not simply type pip install -r requirements.txt to install every package, twisted is one of them which is needed by scrapy. When you get some problems about installing python package(twisted for instance), here always have a solution——downlod the right version package to your drive and run $ pip install package_name

I am not sure if your python enviroment will throw other package not found error, just install any package that is needed

scrapy Python36\Lib\site-packages\scrapy\http\request\ _init_.py --> weixin_crawler\source_code\request\__init__.py

scrapy Python36\Lib\site-packages\scrapy\http\response\ _init_.py --> weixin_crawler\source_code\response\_init_.py

pyecharts Python36\Lib\site-packages\pyecharts\base.py --> weixin_crawler\source_code\base.py. In this case function get_echarts_options is added in line 106

If you want weixin_crawler work automatically those steps are necessary or you shoud operate the phone to get the request data that will be detected by Anyproxy manual

Install adb and add it to your path(windows for example)

install android emulator(NOX suggested) or plugin your phone and make sure you can operate them with abd from command line tools

If mutiple phone are connected to your computer you have to find out their adb ports which will be used to add crawler

adb does not support Chinese input, this is a bad news for weixin official account searching. In order to input Chinese, adb keyboard has to be installed in your android phone and set it as the default input method, more is here

Why could weixin_crawler work automatically? Here is the reason:

- Wechat 7.03 or lower version is required

- If you want to crawl a wechat official account, you have to search the account in you phone and click its "全部消息" then you will get a message list , if you roll down more lists will be loaded. Anyone of the messages in the list could be taped if you want to crawl this account's reading data

- If a nickname of a wechat official account is given, then wexin_crawler operate the wechat app installed in a phone, at the same time anyproxy is 'listening background'...Anyway weixin_crawler get all the request data requested by wechat app, then it is the show time for scrapy

- As you supposed, in order to let weixin_crawler operate wechat app we have to tell adb where to click swap and input, most of them are defined in weixin_crawler/project/phone_operate/config.py. BTW phone_operate is responsible for wechat operate just like human beings, its eyes are baidu OCR API and predefined location tap area, its fingers are adb

$ cd weixin_crawler/project/

$ python(3) ./main.py

Now open the browser and everything you want would be in localhost:5000.

In this long step list you may get stucked, join our community for help, tell us what you have done and what kind of error you have found.

Let's go to explore the world in localhost:5000 together

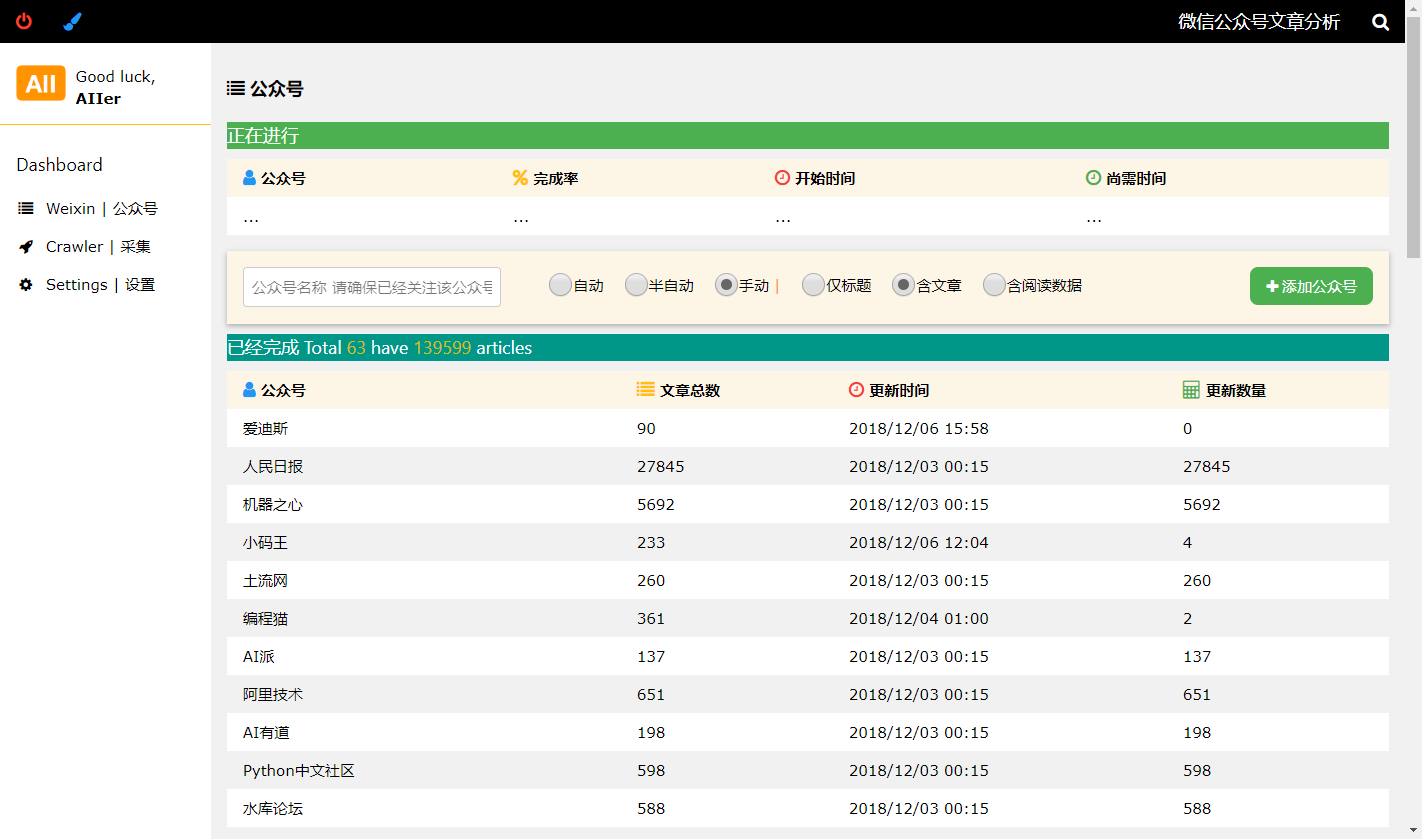

UI主界面

添加公众号爬取任务和已经爬取的公众号列表

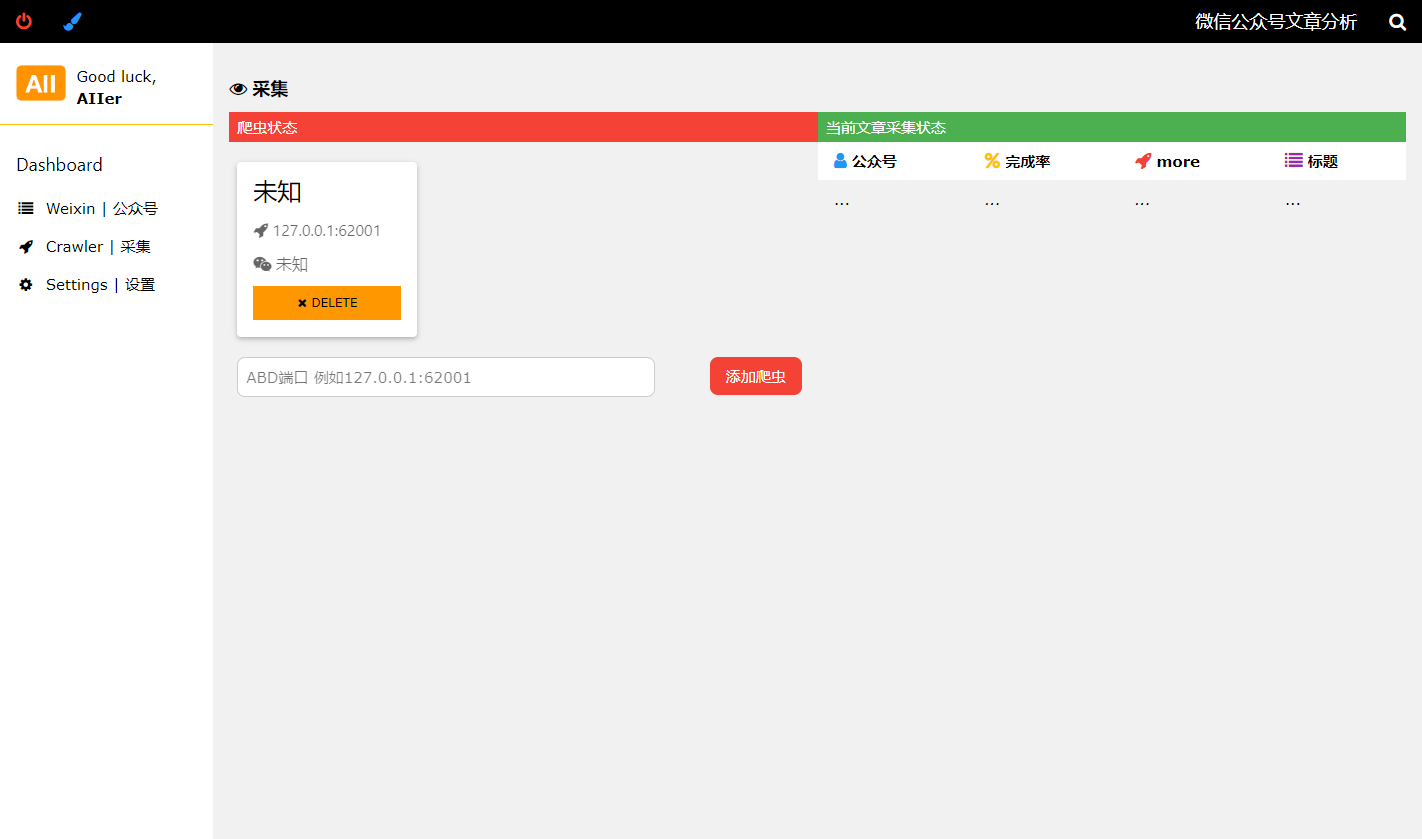

爬虫界面

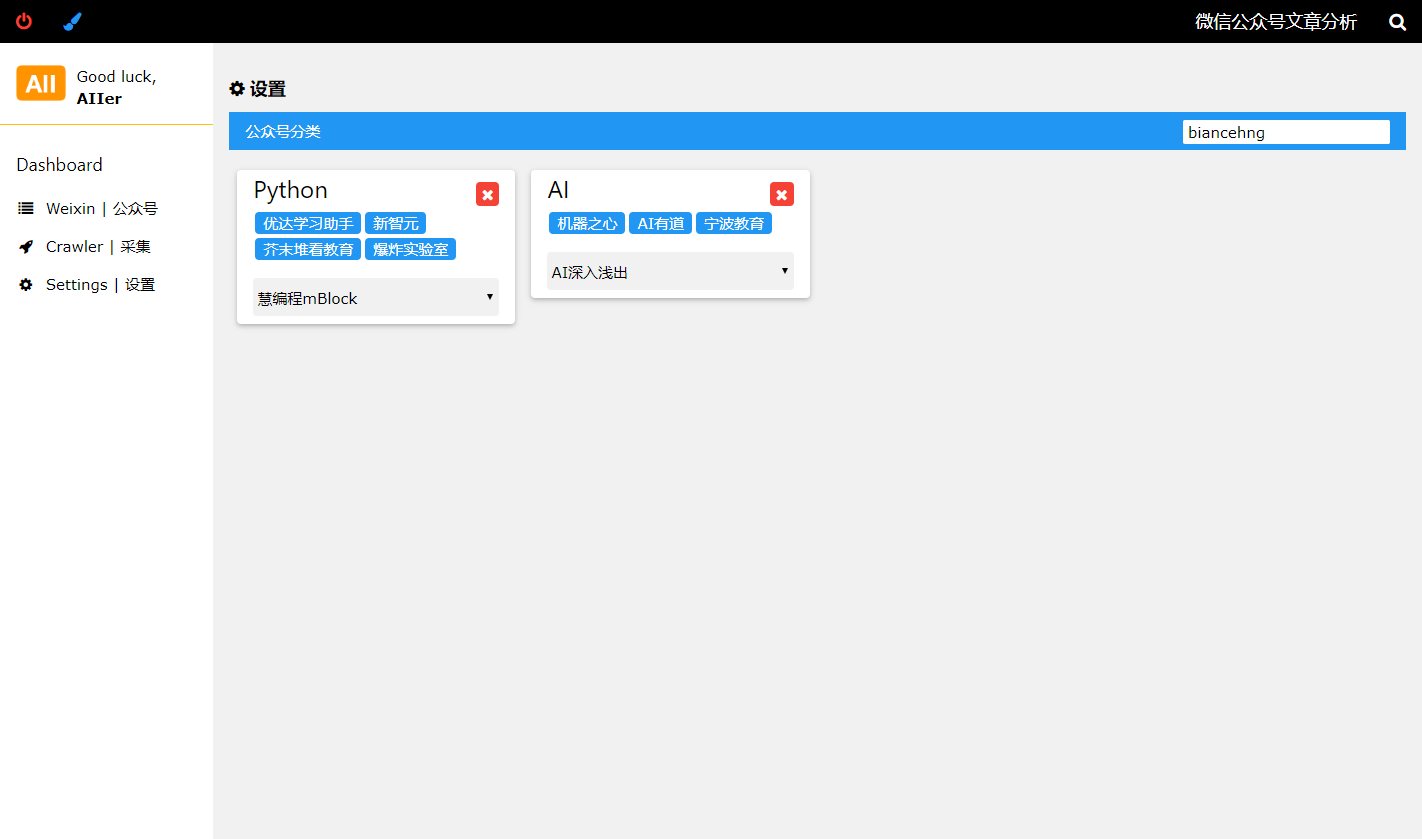

设置界面

公众号历史文章列表

报告

搜索

我为 weixin_crawler 配套了付费社群,内有详细的配置文档和其它开发者的问题讨论历史,一定会帮你节约大量时间。

如果你对微信公众号爬虫有跟多想法,欢迎到社群和我深入讨论。

| 添加作者微信 | 加入付费社群 |

|---|---|

|

|

做生意赚钱得靠信息差,做教育带孩子这事儿,它也有信息不对称

内容很多,你不用专门抽时间学习。做饭、走路、公交、地铁、开车、家务时随便听听,没准儿哪句话就改变你家孩子的一生