To appear in NerIPS 2021.

[paper][Poster & Video][arXiv][code] [reviews]

Yahui Liu1,3, Enver Sangineto1, Wei Bi2, Nicu Sebe1, Bruno Lepri3, Marco De Nadai3

1University of Trento, Italy, 2Tencent AI Lab, China, 3Bruno Kessler Foundation, Italy.

| Dataset | Download Link |

|---|---|

| ImageNet | train,val |

| CIFAR-10 | all |

| CIFAR-100 | all |

| SVHN | train,test, extra |

| Oxford-Flower102 | images, labels, splits |

| Clipart | images, train_list, test_list |

| Infograph | images, train_list, test_list |

| Painting | images, train_list, test_list |

| Quickdraw | images, train_list, test_list |

| Real | images, train_list, test_list |

| Sketch | images, train_list, test_list |

- Download the datasets and pre-processe some of them (i.e., imagenet, domainnet) by using codes in the

scriptsfolder. - The datasets are prepared with the following stucture (except CIFAR-10/100 and SVHN):

dataset_name

|__train

| |__category1

| | |__xxx.jpg

| | |__...

| |__category2

| | |__xxx.jpg

| | |__...

| |__...

|__val

|__category1

| |__xxx.jpg

| |__...

|__category2

| |__xxx.jpg

| |__...

|__...

After prepare the datasets, we can simply start the training with 8 NVIDIA V100 GPUs:

sh train.sh

We can also load the pre-trained model and test the performance:

sh eval.sh

For fast evaluation, we present the results of Swin-T trained with 100 epochs on various datasets as an example (Note that we save the model every 5 epochs during the training, so the attached best models may be slight different from the reported performances).

| Datasets | Baseline | Ours |

|---|---|---|

| CIFAR-10 | 59.47 | 83.89 |

| CIFAR-100 | 53.28 | 66.23 |

| SVHN | 71.60 | 94.23 |

| Flowers102 | 34.51 | 39.37 |

| Clipart | 38.05 | 47.47 |

| Infograph | 8.20 | 10.16 |

| Painting | 35.92 | 41.86 |

| Quickdraw | 24.08 | 69.41 |

| Real | 73.47 | 75.59 |

| Sketch | 11.97 | 38.55 |

We provide a demo to download the pretrained models from Google Drive directly:

python3 ./scripts/collect_models.py

This code is highly based on the Swin-Transformer. Thanks to the contributors of this project.

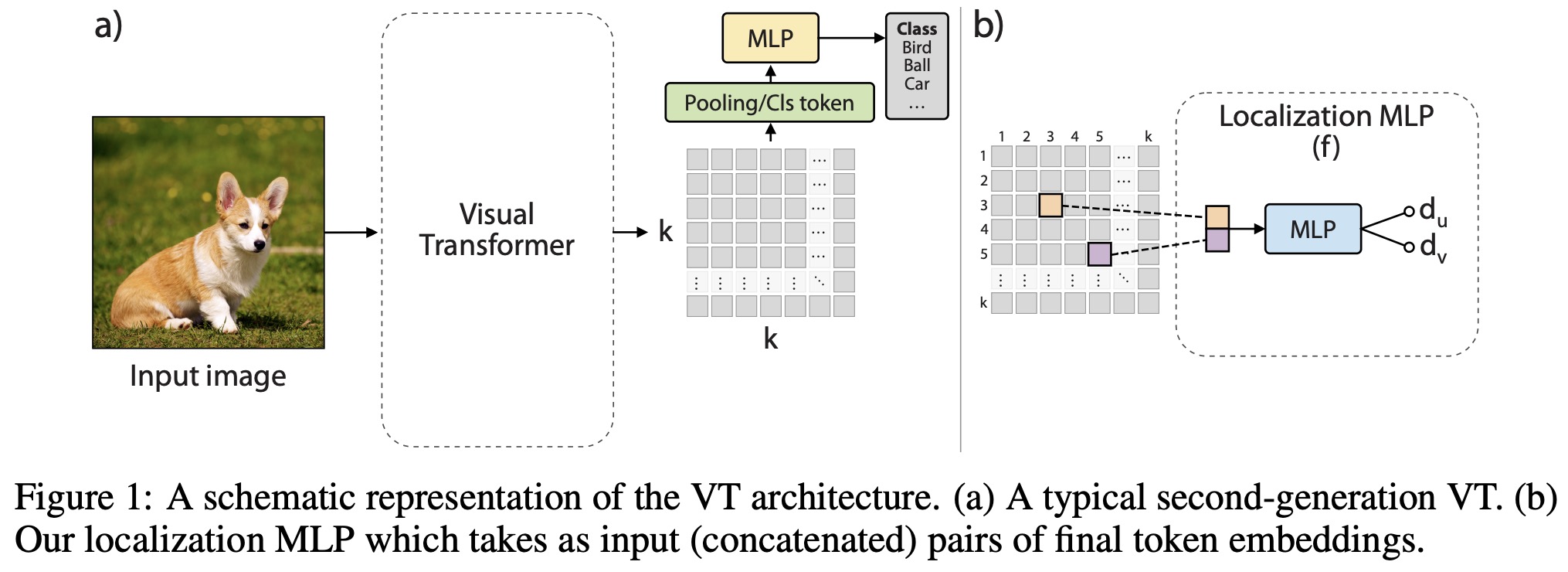

@InProceedings{liu2021efficient,

author = {Liu, Yahui and Sangineto, Enver and Bi, Wei and Sebe, Nicu and Lepri, Bruno and De Nadai, Marco},

title = {Efficient Training of Visual Transformers with Small Datasets},

booktitle = {Conference on Neural Information Processing Systems (NeurIPS)},

year = {2021}

}

If you have any questions, please contact me without hesitation (yahui.cvrs AT gmail.com).