Materials for paper "Are large language models temporally grounded?" (pdf)

Configure your conda environment from our provided llama-hf_environment.yml by,

conda env create -f llama-hf_environment.yml

conda activate llama-hf

Our experiments require the inference with these following models,

davinci,text-davinci-002,text-davinci-003.

You will need to prepare your own OpenAI API call and access for these models in advance.

Our experiments require the inference with these following models,

LLaMA-7BLLaMA-13BLLaMA-33BLLaMA-65BLLaMA-2-7BLLaMA-2-13BLLaMA-2-70BLLaMA-2-7B-chatLLaMA-2-13B-chatLLaMA-2-70B-chat

We recommend you to download all files from huggingface hub in to a local path, see the LLaMA here and here for LLaMA-2.

Using the following scripts for testing GPT and LLaMA with zero/few-shot prompting,

sh run-mctaco-gpt.sh

sh run-mctaco-llama.sh

We provide our evaluation script based on the original McTACO's evaluation. We recommend you to get familiar with its original repository as the first step,

sh eval-mctaco.sh

Using the following scripts for testing GPT and LLaMA models with few-shot prompting,

sh run-caters-gpt.sh

sh run-caters-llama.sh

Our evaluation script is strictly following the evaluation of temporal-bart model. Again, we recommend you to get familiar with its repository as well,

To run the evaluation, simply run this code,

python3 eval-caters.py $OUTPUT_PATH $MODEL_NAME

Taking Llama-2-70b-chat-hf as an example,

python3 eval-caters.py llama-output/caters/caters-fs-pt1-output-icl3/ Llama-2-70b-chat-hf

You can use the following scripts for inference with GPT,

sh run-tempeval-gpt.sh

You can use the following scripts for doing zero/few-shot + likelihood/decoding-based evaluation, and chain-of-thought experiments for LLaMA models.

sh run-tempeval-llama.sh

To run the bi-directional evaluation in checking model's reasoning consistency, simply run this code,

python3 eval-tempeval-bi.py $OUTPUT_PATH $MODEL_NAME

Taking Llama-2-70b-chat-hf as an example,

python3 eval-tempeval-bi.py llama-output/tempeval-qa-bi/fs-bi-pt1-icl3-output-likelihood/ Llama-2-70b-chat-hf

To evaluate the reasoning performance for LLaMA with the chain-of-thought prompting, simply run this code,

python3 eval-tempeval-bi-cot.py $OUTPUT_PATH $MODEL_NAME

Taking Llama-2-70b-chat-hf as an example,

python3 eval-tempeval-bi-cot.py llama-output/tempeval-qa-bi/fs-bi-pt1-icl3-cot-output-likelihood/ Llama-2-70b-chat-hf

We provide all our model's outputs in all datasets in gpt-output and llama-output for reproducing the results reported in our paper.

@misc{qiu2023large,

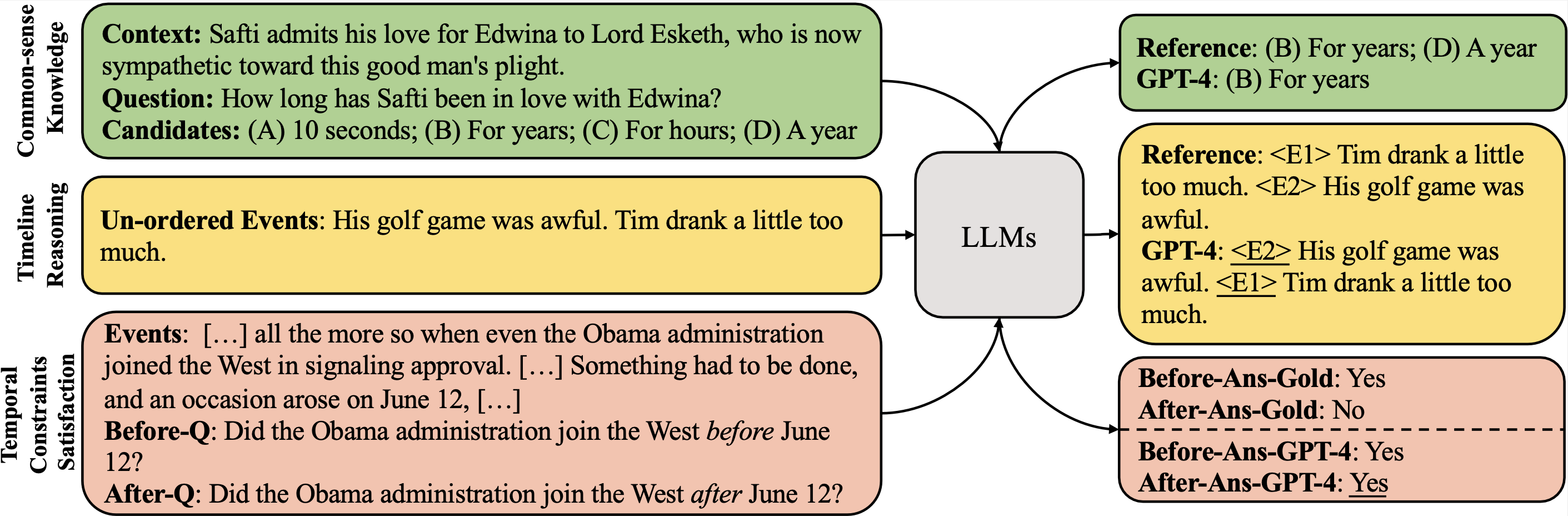

title={Are Large Language Models Temporally Grounded?},

author={Yifu Qiu and Zheng Zhao and Yftah Ziser and Anna Korhonen and Edoardo M. Ponti and Shay B. Cohen},

year={2023},

eprint={2311.08398},

archivePrefix={arXiv},

primaryClass={cs.CL}

}