This repository contains the code and data for the paper Benchmarking Large Language Models for Optimization Modeling and Enhancing Reasoning via Reverse Socratic Synthesis. In this work, we propose a new benchmark (E-OPT) for evaluating the performance of large language models (LLMs) on optimization modeling tasks. Furthermore, to alleviate the data scarcity for optimization problems, and to bridge the gap between open-source LLMs on a small scale (e.g., Llama-2-7b and Llama-3-8b) and closedsource LLMs (e.g., GPT-4), we further propose a novel data synthesis method namely ReSocratic. Experimental results show that the our ReSocratic significantly improves the performance of solving optimization problems.

- 🔥 [2024.6] We released the code of ReSocratic along with its corresponding synthetic data.

- 🔥 [2024.5] Based on the previous competition track, we contributed a more challenging and diverse benchmark (E-OPT) with a wider range of question types.

- 🔥 [2024.4] We launch the competition track of Automated Optimization Problem-Solving with Code in AI for Math Workshop and Challenges at ICML 2024.

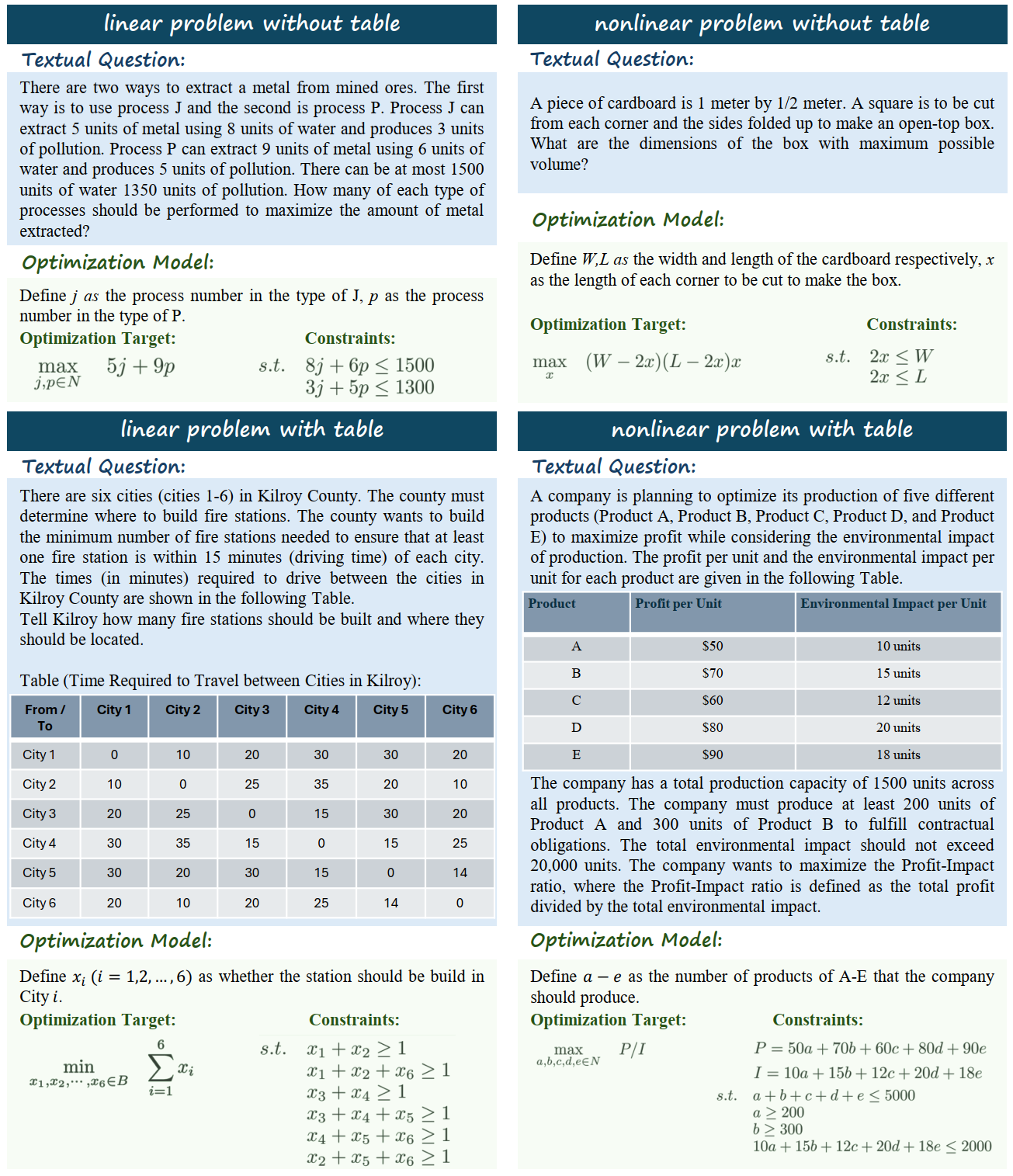

We propose a high-quality benchmark named E-OPT for optimization problems with complex samples in multiple forms. As far as we know, this is the first large-scale benchmark to measure the model’s end-to-end solving ability in optimization problems including nonlinear and tabular data. E-OPT features linear programming (linear), non-linear optimization problems (non-linear), and table content as in industrial use (table), resulting in a comprehensive and versatile benchmark for LLM optimization problem-solving.

A initial version of this benchmark serves as a competition track of ICML 2024 AI4MATH Workshop

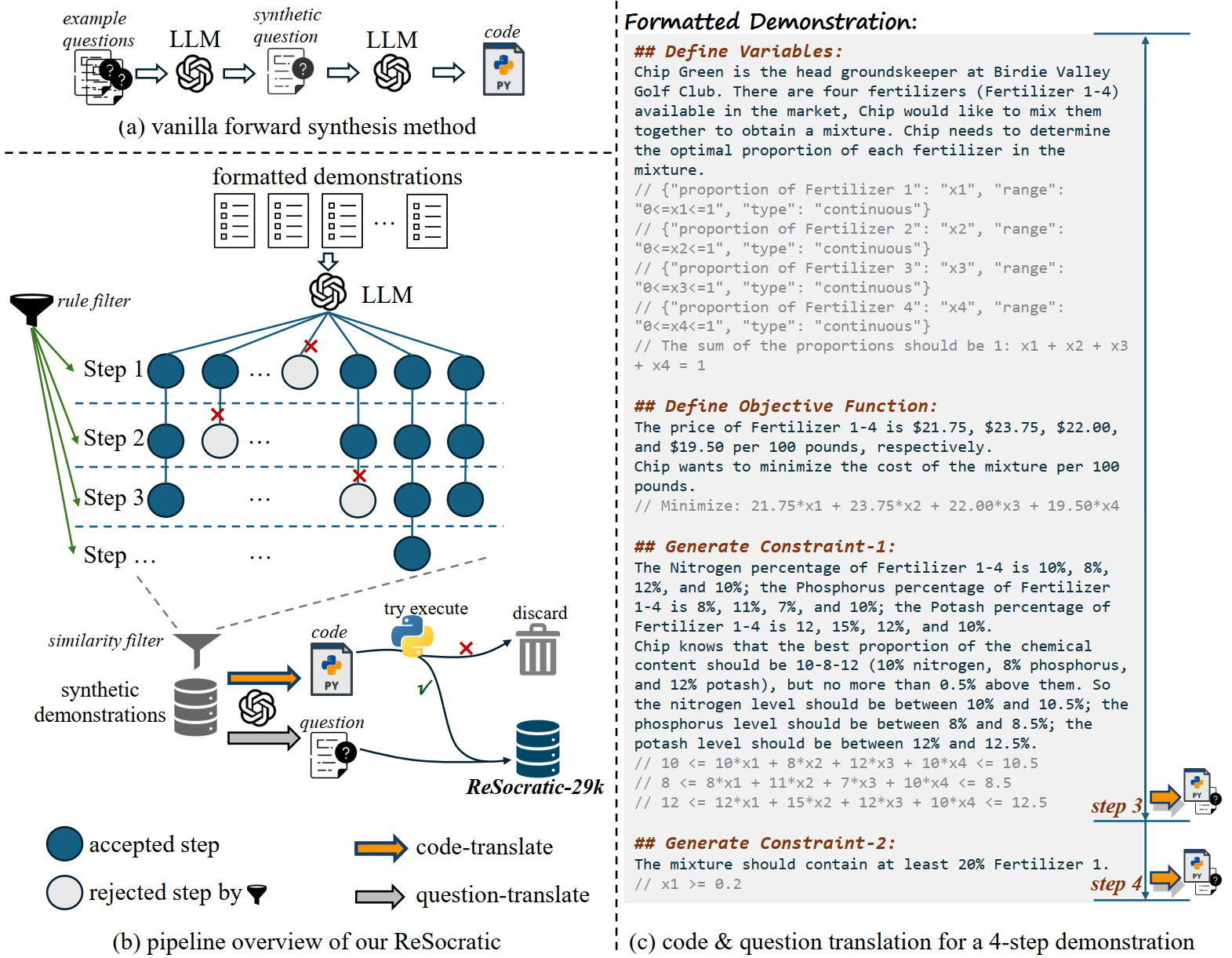

We introduce ReSocratic, a novel data synthesis method for eliciting diverse and reliable data. The main idea of ReSocratic is to synthesize an optimization problem with step-by-step generation in a reverse manner from our elaborate scenarios to questions.

(a) The forward data synthesis method is to synthesize the question first, and then let the

LLM generate the answer to the synthetic question. (b) In contrast, the reverse data synthesis method

we propose, ReSocratic, first synthesizes carefully designed formatted scenarios, and then transforms

the synthesized scenarios into code (answers) and questions. (c) Our carefully designed scenarios are

structured in a step-by-step manner, with each step containing a natural language description as well

as the corresponding formalized mathematical content. Starting from the third step of the synthetic

scenario, each subsequent step is transformed into a question-code pair.

(a) The forward data synthesis method is to synthesize the question first, and then let the

LLM generate the answer to the synthetic question. (b) In contrast, the reverse data synthesis method

we propose, ReSocratic, first synthesizes carefully designed formatted scenarios, and then transforms

the synthesized scenarios into code (answers) and questions. (c) Our carefully designed scenarios are

structured in a step-by-step manner, with each step containing a natural language description as well

as the corresponding formalized mathematical content. Starting from the third step of the synthetic

scenario, each subsequent step is transformed into a question-code pair.

We synthesize the ReSocratic-29k dataset with 29k samples by using our ReSocratic. The synthetic data can be seen in the synthetic_data folder.

We show performance on our E-OPT benchmark for different LLMs. The results are shown in the table below. The code pass rate is the percentage of the code that successfully executes.

| Model | Linear w/ Table | Linear w/o Table | Nonlinear w/ Table | Nonlinear w/o Table | All | Code Pass |

|---|---|---|---|---|---|---|

| Zero-shot Prompt | ||||||

Llama-3-8B-Instruct | 0.29% | 0.0% | 0.0% | 0.0% | 0.17% | 8.8% |

GPT-3.5-Turbo | 37.5% | 68.1% | 16.0% | 19.5% | 49.1% | 85.0% |

Llama-3-70B-Instruct | 50.0% | 76.9% | 32.0% | 30.8% | 59.5% | 86.8% |

DeepSeek-V2 | 27.5% | 40.4% | 18.0% | 29.3% | 34.4% | 74.0% |

GPT-4 | 62.5% | 75.4% | 32.0% | 42.1% | 62.8% | 88.8% |

| Few-shot Prompt | ||||||

Llama-3-8B-Instruct | 2.5% | 17.8% | 8.0% | 11.3% | 13.6% | 26.9% |

GPT-3.5-Turbo | 40.0% | 75.4% | 26.0% | 28.6% | 56.4% | 93.2% |

Llama-3-70B-Instruct | 57.5% | 79.2% | 32.0% | 33.8% | 62.5% | 91.2% |

DeepSeek-V2 | 56.3% | 79.5% | 32.0% | 27.1% | 61.0% | 85.5% |

GPT-4 | 71.3% | 80.7% | 34.0% | 34.6% | 65.5% | 88.3% |

| SFT with Synthetic Data | ||||||

Llama-2-7B-Chat | 11.3% | 40.6% | 32.0% | 15.8% | 30.6% | 93.7% |

Llama-3-8B-Instruct | 32.5% | 63.5% | 44.0% | 33.0% | 51.1% | 96.3% |

Clone our repository and install the required packages.

git-lfs clone https://github.com/yangzhch6/ReSocratic.git

cd ReSocratic

pip install -r requirements.txtEval GPT

python gpt_baseline.py

--model_name "gpt-4" or "gpt-3.5-turbo"

--prompt_path "prompt/solve/scip_zeroshot.txt" or "prompt/solve/scip_fewshot.txt"

Eval Llama

CUDA_VISIBLE_DEVICES=0,1 python llama{2/3}_baseline.py \

--prompt_path "prompt/solve/scip_zeroshot.txt" or "prompt/solve/scip_fewshot.txt" \

--model_name_or_path "model_path" \

--output_path "results.json" \

--tensor_parallel_size 4 \

--batch_size 8

Synthesize scenarios

python resocratic_synthesize.py \

--pool_path "prompt/synthesis/pool/{linear/nonlinear}.json"

Translate scenarios to questions

python synthesize_question.py \

--data_path "synthetic scenarios path" \

--output_path "output file path"

Translate scenarios to code

python synthesize_code.py \

--data_path "synthetic scenarios path" \

--output_path "output file path"

sft Llama-2-7b-Chat

bash scripts/train_llama2.sh

sft Llama-3-8b-Instruct

bash scripts/train_llama3.sh