by Xiaofeng Yang, Cheng Chen, Xulei Yang, Fayao Liu, Guosheng Lin.

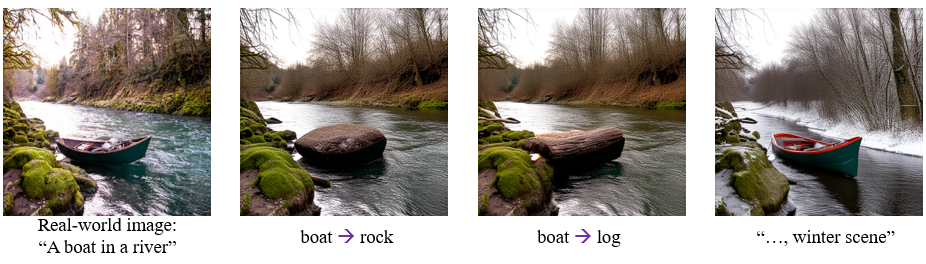

Large-scale diffusion models have achieved remarkable performance in generative tasks. Beyond their initial training applications, these models have proven their ability to function as versatile plug-and-play priors. For instance, 2D diffusion models can serve as loss functions to optimize 3D implicit models. Rectified flow, a novel class of generative models, enforces a linear progression from the source to the target distribution and has demonstrated superior performance across various domains. Compared to diffusion-based methods, rectified flow approaches surpass in terms of generation quality and efficiency, requiring fewer inference steps. In this work, we present theoretical and experimental evidence demonstrating that rectified flow based methods offer similar functionalities to diffusion models — they can also serve as effective priors. Besides the generative capabilities of diffusion priors, motivated by the unique time-symmetry properties of rectified flow models, a variant of our method can additionally perform image inversion. Experimentally, our rectified flow-based priors outperform their diffusion counterparts — the SDS and VSD losses — in text-to-3D generation. Our method also displays competitive performance in image inversion and editing.

- 2024/06/05: Code release.

- 2024/06/21: Add support for Stable Diffusion 3 (June, Medium version).

- Code release. The base text-to-image model is based on InstaFlow.

- Add support for Stable Diffusion 3 after the model is released.

- Stability AI will release "a much improved version" of SD3 soon (refer to here). We'll add support for the new version ASAP.

Our codes are based on the implementations of ThreeStudio. Please follow the instructions in ThreeStudio to install the dependencies.

To use SD3: please follow the instructions here to login to huggingface and update diffusers. When you run our codes, the models will be automatically downloaded.

Using Stable Diffusion 3 as the base rectified-flow model.

# run RFDS in 2D space for image generation

python 2dplayground_RFDS_sd3.py

# run RFDS-Rev in 2D space for image generation

python 2dplayground_RFDS_Rev_sd3.py

# run iRFDS in 2D space for image editing (requires 20g GPU memory)

python 2dplayground_iRFDS_sd3.py

python launch.py --config configs/rfds_sd3.yaml --train --gpu 0 system.prompt_processor.prompt="A DSLR photo of a hamburger"

python launch.py --config configs/rfds-rev_sd3.yaml --train --gpu 0 system.prompt_processor.prompt="A DSLR photo of a hamburger"

python launch.py --config configs/rfds-rev_sd3_low_memory.yaml --train --gpu 0 system.prompt_processor.prompt="A DSLR photo of a hamburger"

Caption: A DSLR image of a hamburger

RFDS |

RFDS-Rev |

A DSLR image of a hamburger |

A 3d model of an adorable cottage with a thatched roof |

A DSLR image of a hamburger |

A 3d model of an adorable cottage with a thatched roof |

- In SD3, the RFDS baseline already delivers great results. If your GPU memory is limited, it's recommended to use the RFDS baseline version.

- SD3 is not trained with reflow (check out the InstaFlow paper for more on that). So, we found it a bit tougher to do image inversion using iRFDS and SD3. Additionally, the transformer backbone makes it difficult to replace objects with text control without using prompt-to-prompt.

Using InstaFlow as the base rectified-flow model (use less GPU memory).

# run RFDS in 2D space for image generation

python 2dplayground_RFDS.py

# run RFDS-Rev in 2D space for image generation

python 2dplayground_RFDS_Rev.py

# run iRFDS in 2D space for image editing

python 2dplayground_iRFDS.py

python launch.py --config configs/rfds.yaml --train --gpu 0 system.prompt_processor.prompt="A DSLR photo of a hamburger"

python launch.py --config configs/rfds-rev.yaml --train --gpu 0 system.prompt_processor.prompt="A DSLR photo of a hamburger"

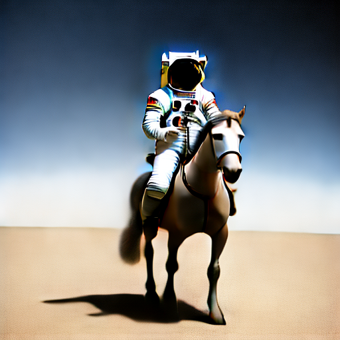

Caption: an astronaut is riding a horse

RFDS |

RFDS-Rev |

A DSLR image of a hamburger |

An intricate ceramic vase with peonies painted on it |

RFDS is built on the following open-source projects:

- ThreeStudio Main Framework

- InstaFlow Large-scale text-to-image Rectified Flow model

@article{yang2024rfds,

title={Text-to-Image Rectified Flow as Plug-and-Play Priors},

author={Xiaofeng Yang and Cheng Chen and Xulei Yang and Fayao Liu and Guosheng Lin},

journal={arXiv-2406.03293},

year={2024}

}