Wang, Yi, Ying-Cong Chen, Xin Tao, and Jiaya Jia. "Vcnet: A robust approach to blind image inpainting." In European Conference on Computer Vision, pp. 752-768. Springer, Cham, 2020.

Unofficial implementation of VCNet.

Clone this repo:

git clone https://github.com/xyfJASON/VCNet-pytorch.git

cd VCNet-pytorchCreate and activate a conda environment:

conda create -n vcnet python=3.9

conda activate vcnetInstall dependencies:

pip install -r requirements.txtThe code will use pretrained VGG16, which can be downloaded by:

wget https://download.pytorch.org/models/vgg16-397923af.pth -o ~/.cache/torch/hub/checkpoints/vgg16-397923af.pthFollowing the paper, I use CelebA-HQ, FFHQ, ImageNet and Places datasets for inpainting. All the images are resized or cropped to 256x256. When training on FFHQ, CelebA-HQ and ImageNet are the noise source. When training on ImageNet and Places, they are the noise source for each other.

I've trained and tested the model on two kinds of masks:

- Generated brushstroke-like masks + iterative gaussian smoothing proposed by GMCNN. This is the closest setup to the VCNet paper, though the brushstroke generation algorithm and parameters may still be different.

- Irregular Mask Dataset provided by NVIDIA + direct gaussian smoothing. The masks are evenly splitted by area ratio and thus enable us to test the model's performance w.r.t masks' size.

Please see notes on masks for more information.

The training procedure is composed of two stages. First, MPN and RIN are separately trained; then, after both networks are converged, they're jointly optimized.

# First stage (separately train MPN and RIN)

accelerate-launch train_separate.py [-c CONFIG] [-e EXP_DIR] [--xxx.yyy zzz ...]

# Second stage (jointly train MPN and RIN)

accelerate-launch train_joint.py [-c CONFIG] [-e EXP_DIR] --train.pretrained PRETRAINED [--xxx.yyy zzz ...]- This repo uses the 🤗 Accelerate library for multi-GPUs/fp16 supports. Please read the documentation on how to launch the scripts on different platforms.

- Results (logs, checkpoints, tensorboard, etc.) of each run will be saved to

EXP_DIR. IfEXP_DIRis not specified, they will be saved toruns/exp-{current time}/. - To modify some configuration items without creating a new configuration file, you can pass

--key valuepairs to the script. For example, as shown above, when training on the second stage, you can load the pretrained model in the first stage by--train.pretrained /path/to/pretrained/model.pt.

For example, to train the model on FFHQ:

# First stage

accelerate-launch train_separate.py -c ./configs/separate_ffhq_brush_realnoise.yaml

# Second stage

accelerate-launch train_joint.py -c ./configs/joint_ffhq_brush_realnoise.yaml --train.pretrained ./runs/exp-xxxx/ckpt/step079999/model.ptaccelerate-launch evaluate.py -c CONFIG \

--model_path MODEL_PATH \

[--n_eval N_EVAL] \

[--micro_batch MICRO_BATCH]- This repo uses the 🤗 Accelerate library for multi-GPUs/fp16 supports. Please read the documentation on how to launch the scripts on different platforms.

- You can adjust the batch size on each device by

--micro_batch MICRO_BATCH. The evaluation speed depends on your system and larger batch size doesn't necessarily result in faster evaluation. - The metrics include BCE, PSNR, SSIM and LPIPS.

accelerate-launch sample.py -c CONFIG \

--model_path MODEL_PATH \

--n_samples N_SAMPLES \

--save_dir SAVE_DIR \

[--micro_batch MICRO_BATCH]- This repo uses the 🤗 Accelerate library for multi-GPUs/fp16 supports. Please read the documentation on how to launch the scripts on different platforms.

- You can adjust the batch size on each device by

--micro_batch MICRO_BATCH. The sampling speed depends on your system and larger batch size doesn't necessarily result in faster sampling.

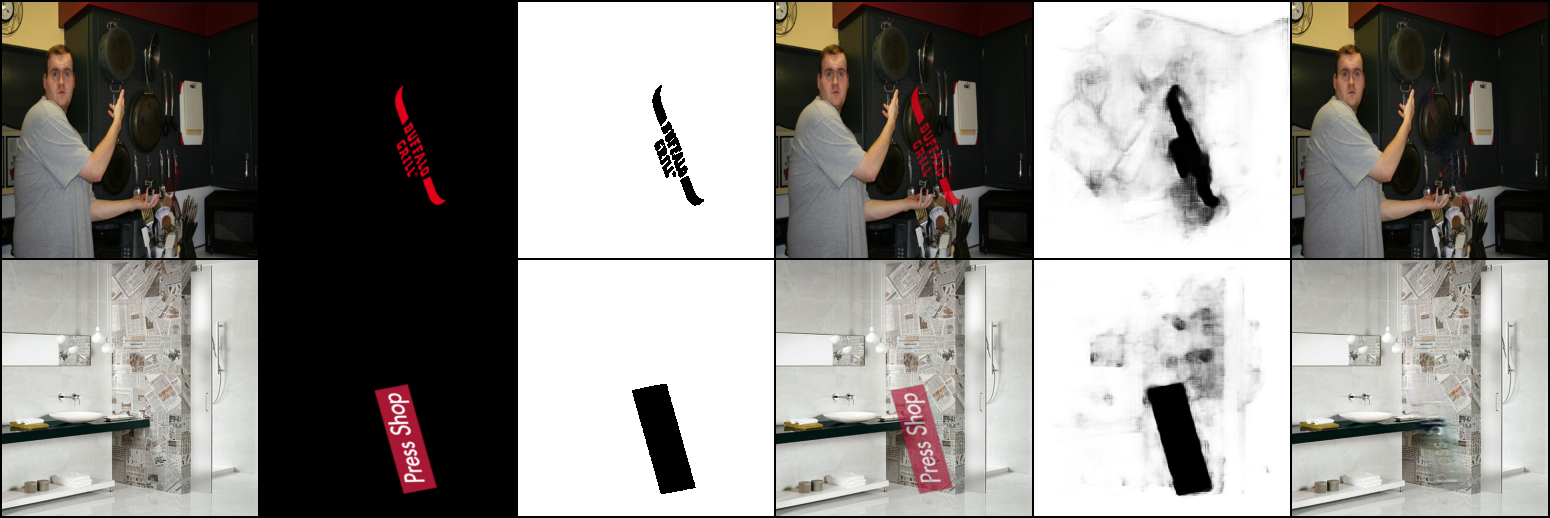

The pretrained model can be adapted to downstream image restoration tasks as long as the corrputed images, ground-truth images and ground-truth masks are provided in the training set. Specifically, this code supports finetuning and testing on LOGO dataset (watermark removal) and ISTD dataset (shadow removal).

All metrics are evaluated on 10K images.

Quantitative results:

| Mask type and ratio | BCE | PSNR | SSIM | LPIPS |

|---|---|---|---|---|

| irregular (0.01, 0.1] | 0.1172 | 31.4808 | 0.9488 | 0.0286 |

| irregular (0.1, 0.2] | 0.1436 | 27.8591 | 0.9119 | 0.0545 |

| irregular (0.2, 0.3] | 0.1385 | 25.3436 | 0.8671 | 0.0856 |

| irregular (0.3, 0.4] | 0.1282 | 23.2898 | 0.8189 | 0.1199 |

| irregular (0.4, 0.5] | 0.1276 | 21.6085 | 0.7659 | 0.1589 |

| irregular (0.5, 0.6] | 0.1270 | 18.9777 | 0.6808 | 0.2279 |

| brushstoke | 0.0793 | 23.6723 | 0.8139 | 0.1302 |

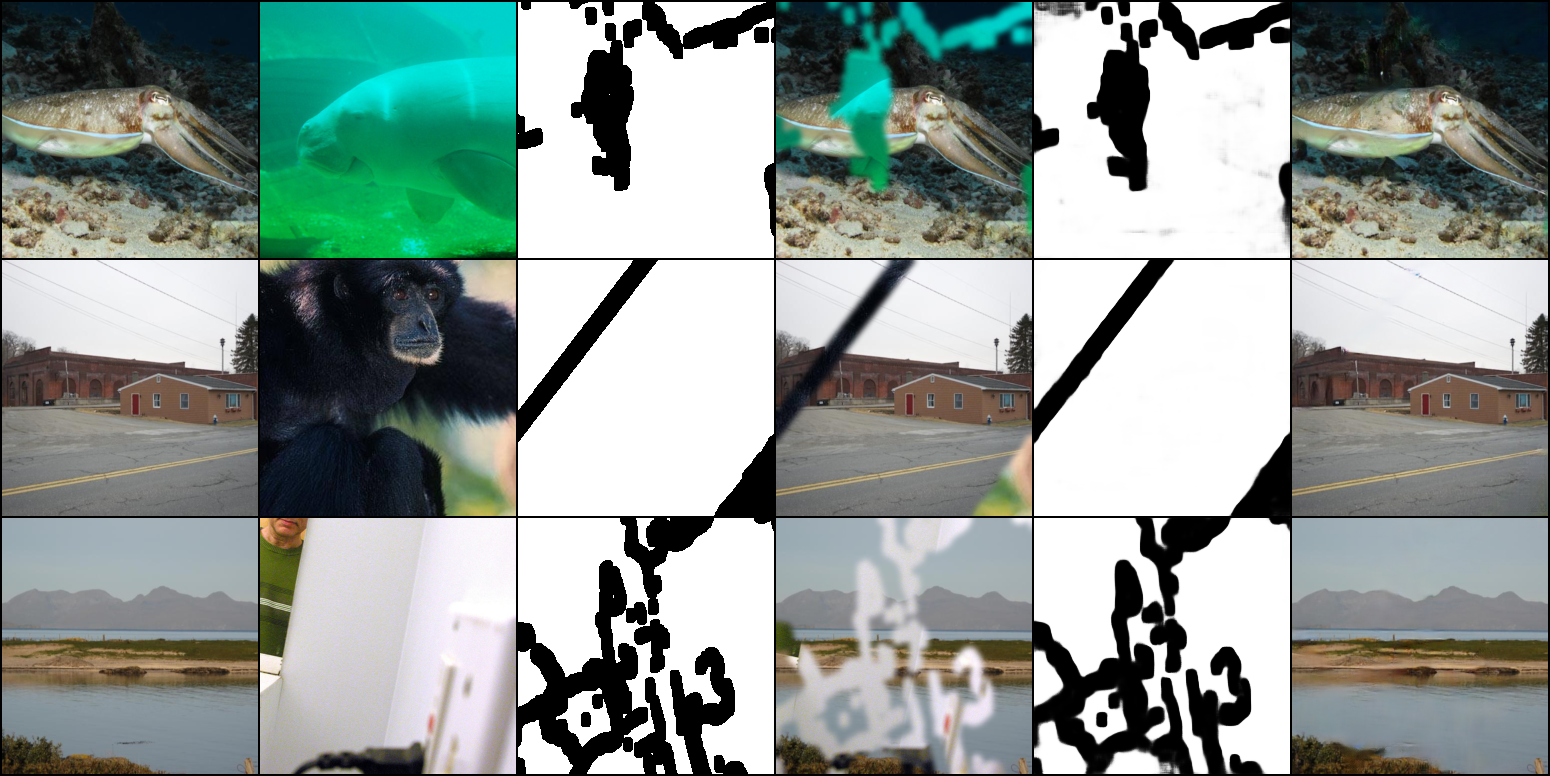

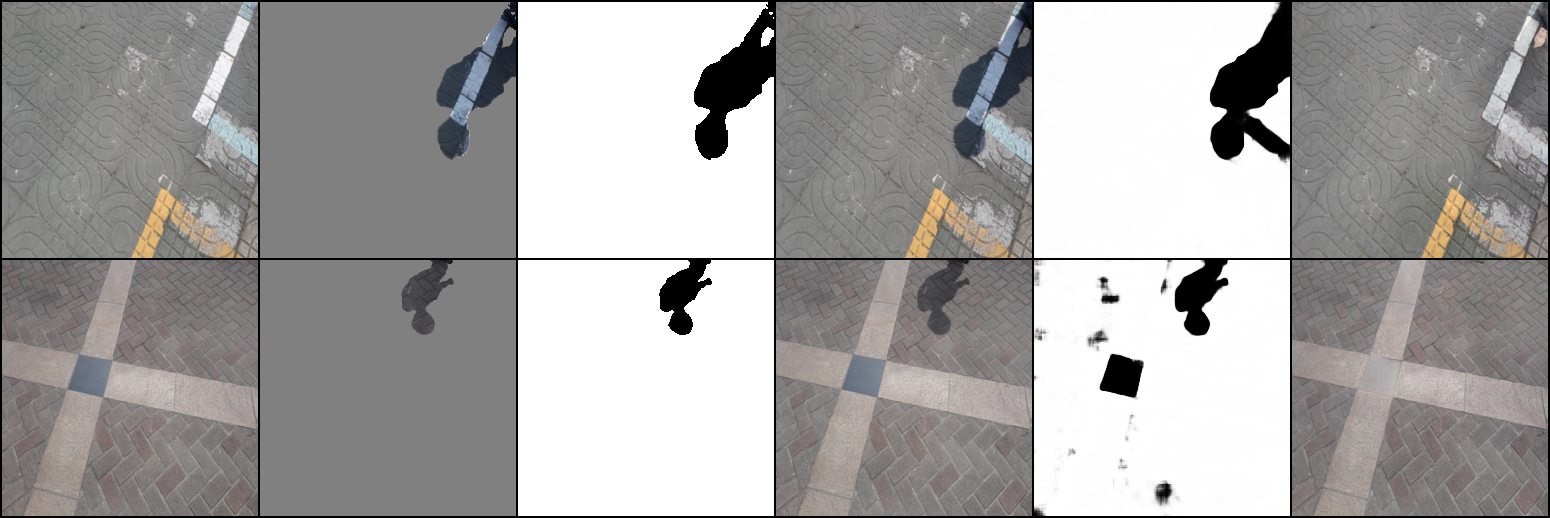

Selected samples:

Quantitative results:

| Mask type and ratio | BCE | PSNR | SSIM | LPIPS |

|---|---|---|---|---|

| irregular (0.01, 0.1] | 0.0759 | 31.7148 | 0.9615 | 0.0291 |

| irregular (0.1, 0.2] | 0.1000 | 27.2678 | 0.9181 | 0.0658 |

| irregular (0.2, 0.3] | 0.0984 | 24.3350 | 0.8626 | 0.1119 |

| irregular (0.3, 0.4] | 0.0875 | 22.2100 | 0.8028 | 0.1602 |

| irregular (0.4, 0.5] | 0.0828 | 20.5598 | 0.7396 | 0.2104 |

| irregular (0.5, 0.6] | 0.0655 | 18.0231 | 0.6402 | 0.2918 |

| brushstroke | 0.0550 | 22.6913 | 0.7880 | 0.1687 |

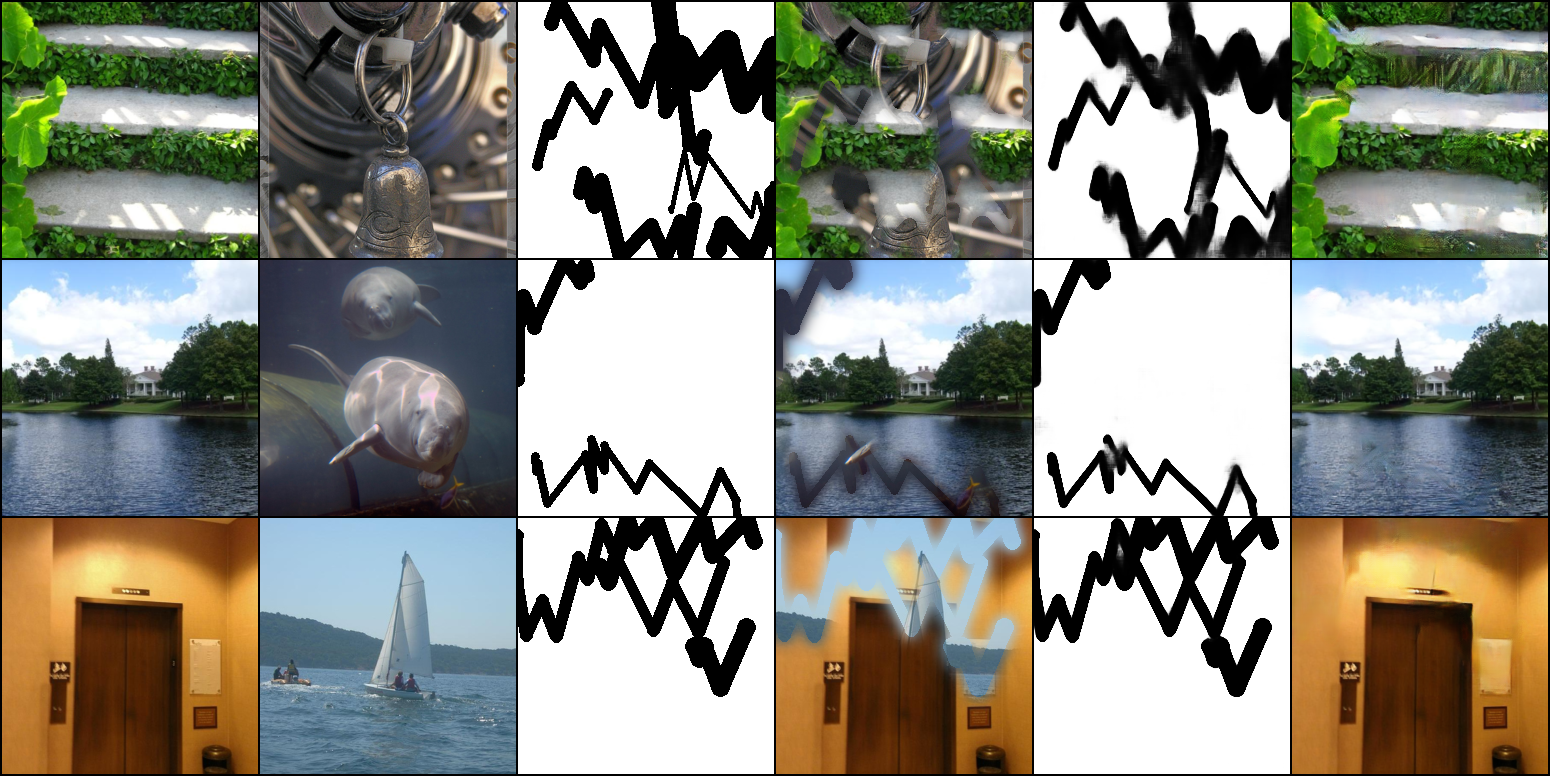

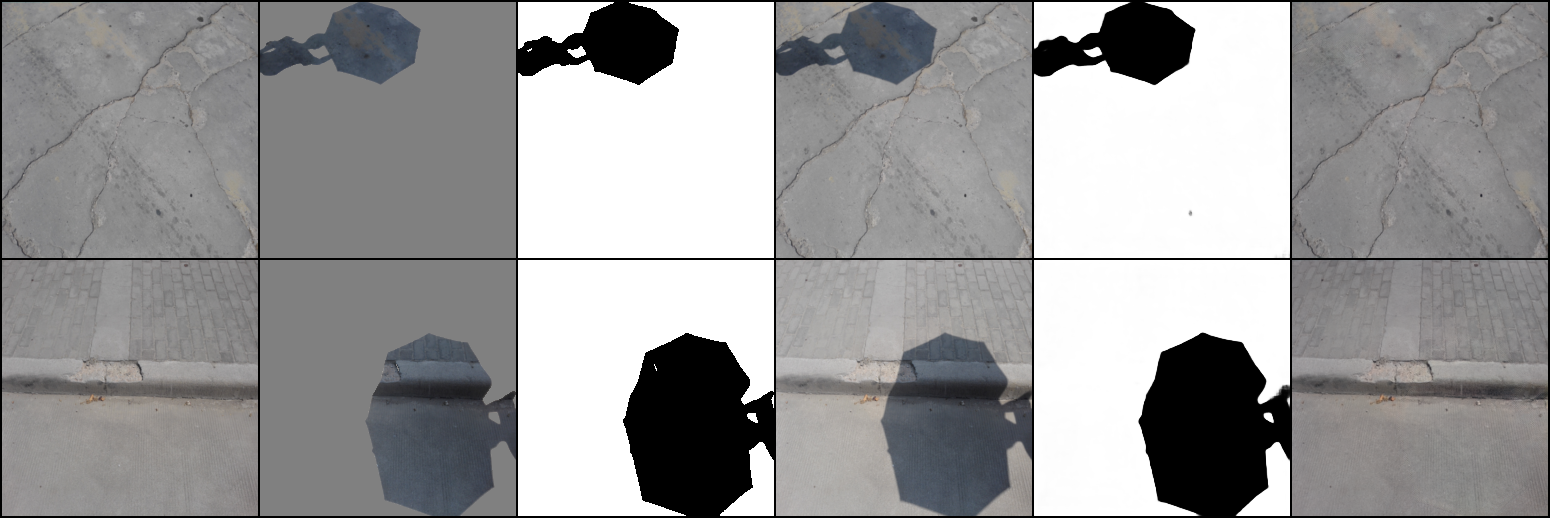

Selected samples:

Quantitative results:

| Mask type and ratio | BCE | PSNR | SSIM | LPIPS |

|---|---|---|---|---|

| irregular (0.01, 0.1] | 0.0813 | 31.1359 | 0.9497 | 0.0306 |

| irregular (0.1, 0.2] | 0.1021 | 27.1368 | 0.9063 | 0.0673 |

| irregular (0.2, 0.3] | 0.0982 | 24.3580 | 0.8510 | 0.1145 |

| irregular (0.3, 0.4] | 0.0853 | 22.3020 | 0.7922 | 0.1644 |

| irregular (0.4, 0.5] | 0.0789 | 20.6609 | 0.7292 | 0.2182 |

| irregular (0.5, 0.6] | 0.0579 | 18.1530 | 0.6329 | 0.3091 |

| brushstroke | 0.0528 | 22.8164 | 0.7815 | 0.1717 |

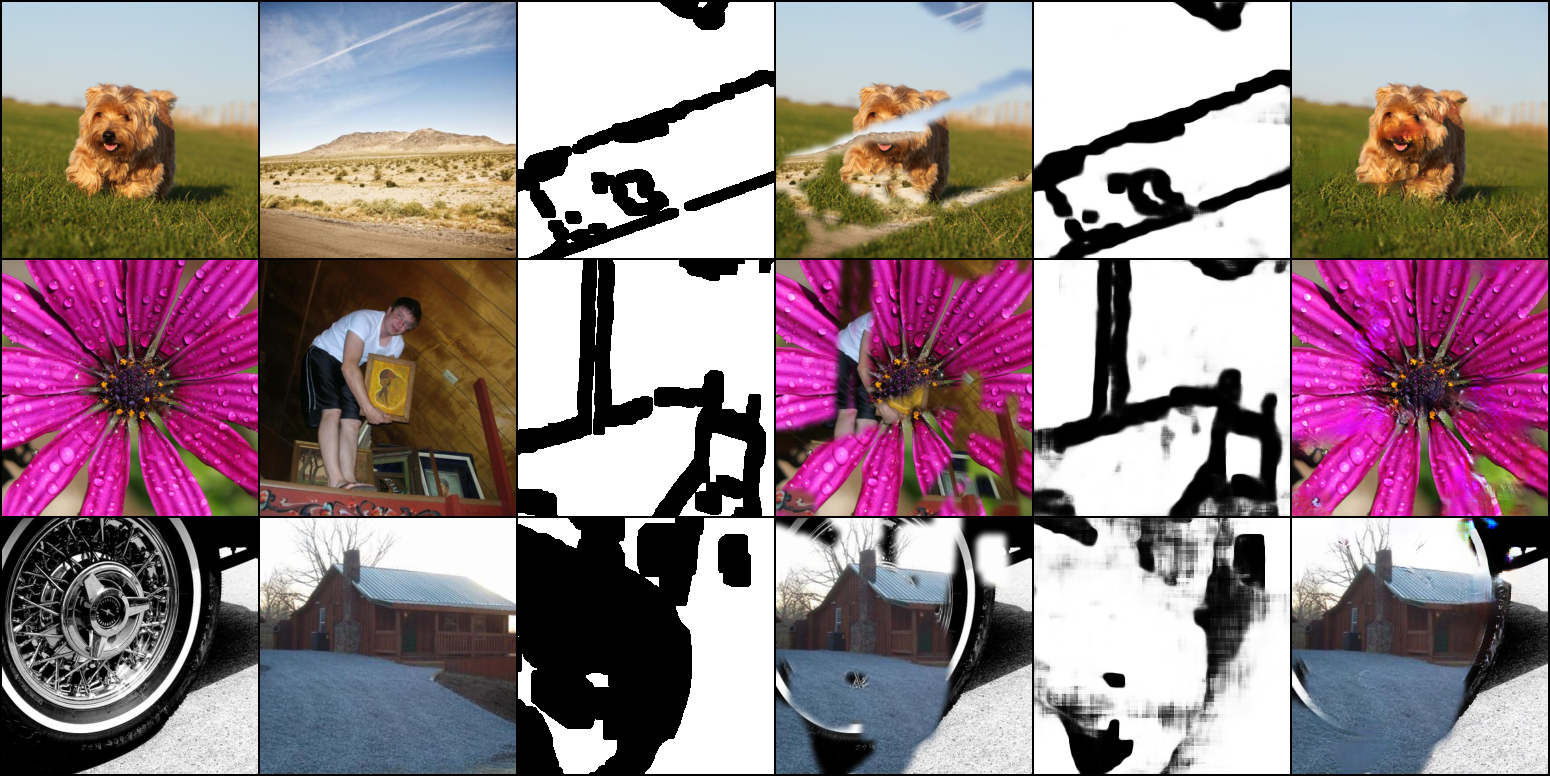

Selected samples: