Pathak, Deepak, Philipp Krahenbuhl, Jeff Donahue, Trevor Darrell, and Alexei A. Efros. "Context encoders: Feature learning by inpainting." In Proceedings of the IEEE conference on computer vision and pattern recognition, pp. 2536-2544. 2016.

Unofficial implementation of Context-Encoder.

Clone this repo:

git clone https://github.com/xyfJASON/Context-Encoder-pytorch.git

cd Context-Encoder-pytorchCreate and activate a conda environment:

conda create -n context-encoder python=3.9

conda activate context-encoderInstall dependencies:

pip install -r requirements.txtaccelerate-launch train.py [-c CONFIG] [-e EXP_DIR] [--xxx.yyy zzz ...]- This repo uses the 🤗 Accelerate library for multi-GPUs/fp16 supports. Please read the documentation on how to launch the scripts on different platforms.

- Results (logs, checkpoints, tensorboard, etc.) of each run will be saved to

EXP_DIR. IfEXP_DIRis not specified, they will be saved toruns/exp-{current time}/. - To modify some configuration items without creating a new configuration file, you can pass

--key valuepairs to the script.

For example, to train the model on CelebA-HQ:

accelerate-launch train.py -c ./configs/celebahq.yamlTo do ablation study on the effect of adversarial loss:

accelerate-launch train.py -c ./configs/celebahq.yaml --train.coef_adv 0.0accelerate-launch evaluate.py -c CONFIG \

--model_path MODEL_PATH \

[--n_eval N_EVAL] \

[--micro_batch MICRO_BATCH]- This repo uses the 🤗 Accelerate library for multi-GPUs/fp16 supports. Please read the documentation on how to launch the scripts on different platforms.

- You can adjust the batch size on each device by

--micro_batch MICRO_BATCH. - The metrics include L1 Error, PSNR and SSIM, all of which are evaluated only in the 64x64 central area.

accelerate-launch sample.py -c CONFIG \

--model_path MODEL_PATH \

--n_samples N_SAMPLES \

--save_dir SAVE_DIR \

[--micro_batch MICRO_BATCH]- This repo uses the 🤗 Accelerate library for multi-GPUs/fp16 supports. Please read the documentation on how to launch the scripts on different platforms.

- You can adjust the batch size on each device by

--micro_batch MICRO_BATCH. The sampling speed depends on your system and larger batch size doesn't necessarily result in faster sampling.

Quantitative results:

| Loss | L1 Error | PSNR | SSIM |

|---|---|---|---|

| L2 + Adv | 0.0720 | 20.3027 | 0.5915 |

| L2 only | 0.0694 | 20.5573 | 0.6153 |

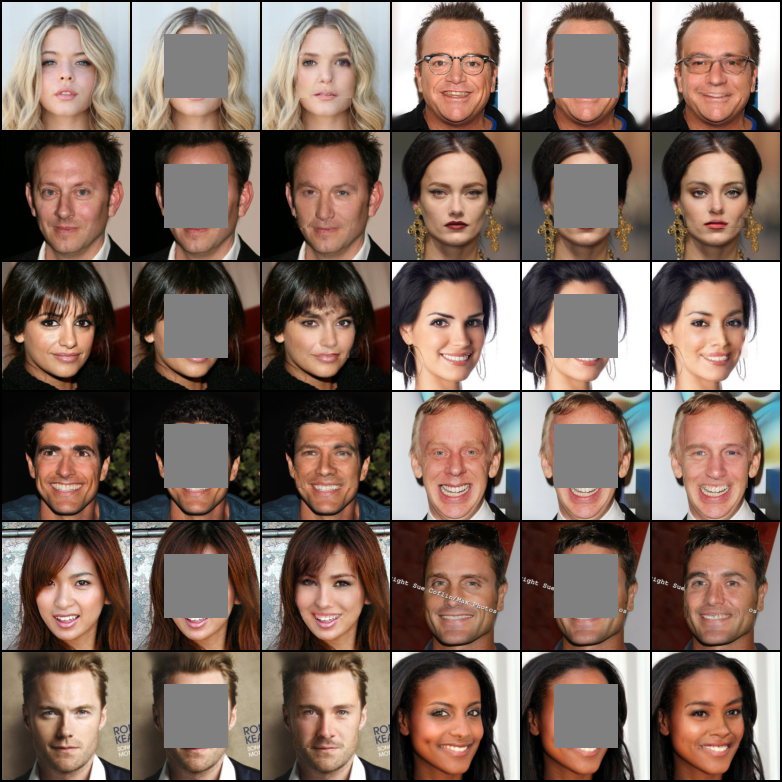

Selected samples:

| L2 + Adv | L2 only |

|---|---|

|

|