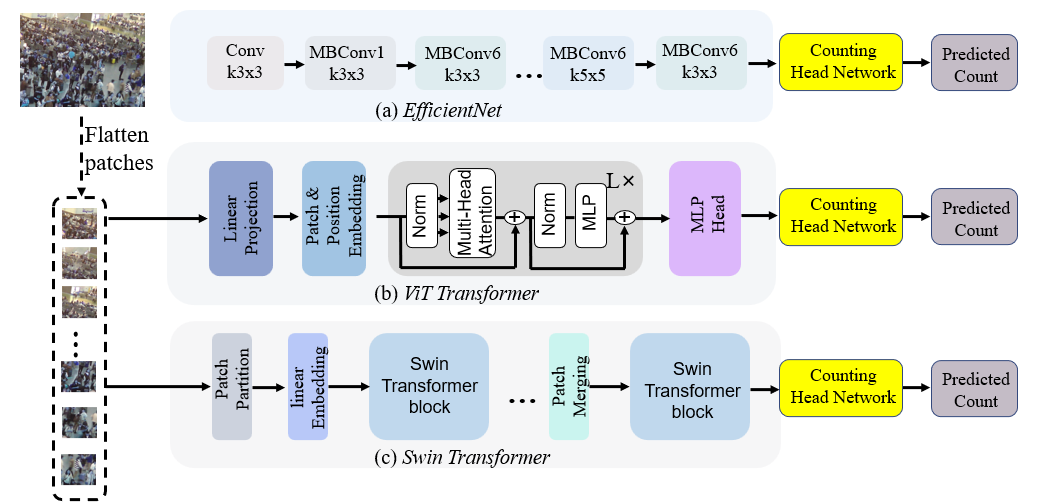

- An officical implementation of weakly-supervised crowd counting with token attention and fusion. Our work presents a simple and effective crowd counting method with only image-level count annotations, i.e., the number of people in an image (weak supervision).We investigate three backbone networks regarding transfer learning capacity in the weakly supervised crowd counting problem. Then, we propose an effective network composed of a Transformer backbone and token channel attention module (T-CAM) in the counting head, where the attention in channels of tokens can compensate for the self-attention between tokens of the Transformer. Finally, a simple token fusion is proposed to obtain global information.

Weakly-supervised crowd counting with token attention and fusion: A Simple and Effective Baseline (ICASSP 2024)

-

An officical implementation of WSCC_TAF: weakly-supervised crowd counting with token attention and fusion. Our work presents a simple and effective crowd counting method with only image-level count annotations, i.e., the number of people in an image (weak supervision).We investigate three backbone networks regarding transfer learning capacity in the weakly supervised crowd counting problem. Then, we propose an effective network composed of a Transformer backbone and token channel attention module (T-CAM) in the counting head, where the attention in channels of tokens can compensate for the self-attention between tokens of the Transformer. Finally, a simple token fusion is proposed to obtain global information.

-

Paper Link

| Backbone | MAE | MSE |

|---|---|---|

| EfficientNet-B7 | 76.4 | 115.0 |

| ViT-B-384 | 72.6 | 123.4 |

| Swin_B-384 | 67.0 | 108.5 |

| Mamba | 71.7 | 122.8 |

- Code refers to here

python >=3.6

pytorch >=1.5

opencv-python >=4.0

scipy >=1.4.0

h5py >=2.10

pillow >=7.0.0

imageio >=1.18

timm==0.1.30

- Download ShanghaiTech dataset from Baidu-Disk, passward:cjnx; or Google-Drive

- Download UCF-QNRF dataset from here

- Download JHU-CROWD ++ dataset from here

- Download NWPU-CROWD dataset from Baidu-Disk, passward:3awa; or Google-Drive

cd data

run python predataset_xx.py

“xx” means the dataset name, including sh, jhu, qnrf, and nwpu. You should change the dataset path.

Generate image file list:

run python make_npydata.py

Training example:

python train.py --dataset ShanghaiA --save_path ./save_file/ShanghaiA --batch_size 24 --model_type 'token'

python train.py --dataset ShanghaiA --save_path ./save_file/ShanghaiA batch_size 24 --model_type 'gap'

python train.py --dataset ShanghaiA --save_path ./save_file/ShanghaiA batch_size 24 --model_type 'swin'

python train.py --dataset ShanghaiA --save_path ./save_file/ShanghaiA batch_size 24 --model_type 'mamba'

Please utilize a single GPU with 24G memory or multiple GPU for training. On the other hand, you also can change the batch size.

Test example:

Download the pretrained model from Baidu-Disk, passward:8a8n

python test.py --dataset ShanghaiA --pre model_best.pth --model_type 'gap'

...

If you find this project is useful for your research, please cite:

@inproceedings{wang2024weakly,

title={Weakly-Supervised Crowd Counting with Token Attention and Fusion: A Simple and Effective Baseline},

author={Wang, Yi and Hu, Qiongyang and Chau, Lap-Pui},

booktitle={ICASSP 2024-2024 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP)},

pages={13456--13460},

year={2024},

organization={IEEE}

}