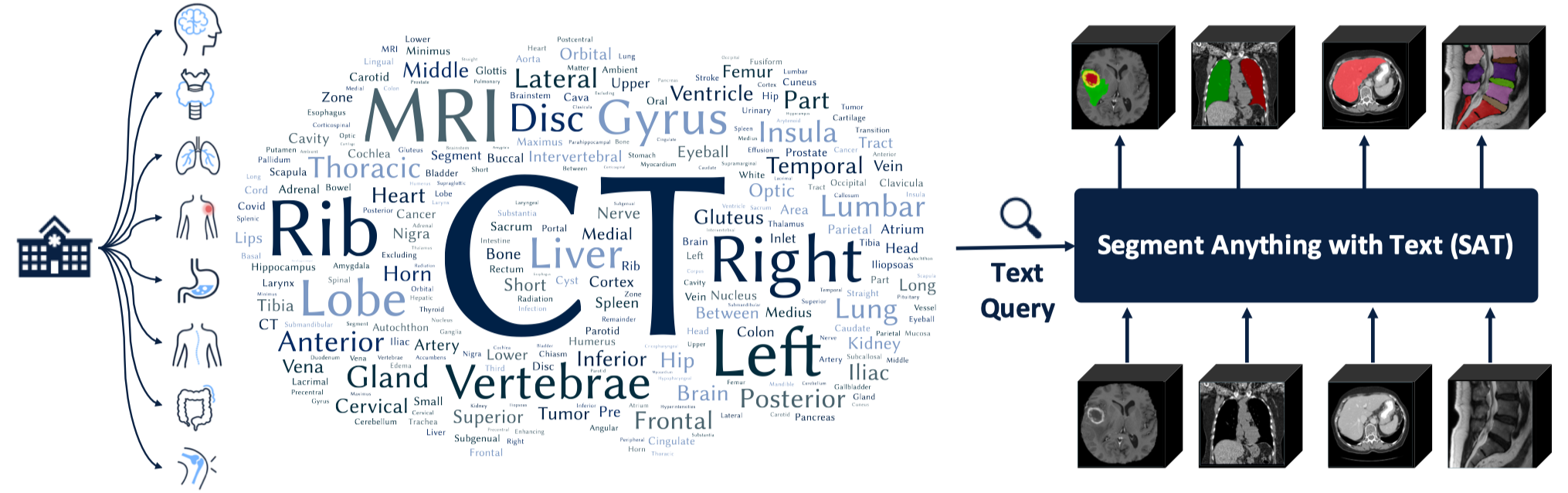

This is the official repository for "One Model to Rule them All: Towards Universal Segmentation for Medical Images with Text Prompts"

Due to potential copyright issues in our knowledge data, we have temporarily withdrawn our paper from ArXiv, and are currently preparing a revised version of the model and paper.

- S1. Build the environment following the requirements.

- S2. Download checkpoint of SAT and Text Encodewr from Baidu Netdisk or Google Drive.

- S3. Prepare the data to inference in a jsonl file. A demo can be found in

data/inference_demo/demo.jsonl. Make sure the image path, label name and modality are correctly filled in. For the label name and modality, please refer to Table 8 of the paper for reference. Download links of the dataset involved in training SAT-Nano can be found below. - S4. Start the inference with the following command:

Note that you may modify

torchrun \ --nproc_per_node=1 \ --master_port 1234 \ inference.py \ --data_jsonl 'data/inference_demo/demo.jsonl' \ --checkpoint 'path to SAT-Nano checkpoint' \ --text_encoder_checkpoint 'path to Text encoder checkpoint' \--max_queriesand--batchsizeto accelerate the inference, based on the computation resource you have. - S5. Check the path where you store the images. For each image, a folder with the same name will be created. Inside each folder, you will find the predictions for each label (named after the label), the aggregate results for all labels (prediction.nii.gz), and the input image (image.nii.gz). You can visualize them using the ITK-SNAP.

The implementation of U-Net relies on a customized version of dynamic-network-architectures, to install it:

cd model

pip install -e dynamic-network-architectures-main

Some other key requirements:

torch>=1.10.0

numpy==1.21.5

monai==1.1.0

transformers==4.21.3

nibabel==4.0.2

einops==0.6.1

positional_encodings==6.0.1

- Release the inference code of SAT-Nano

- Release the model of SAT-Nano

- Release SAT-Ultra

If you use this code for your research or project, please cite:

@arxiv{zhao2023model,

title={One Model to Rule them All: Towards Universal Segmentation for Medical Images with Text Prompt},

author={Ziheng Zhao and Yao Zhang and Chaoyi Wu and Xiaoman Zhang and Ya Zhang and Yanfeng Wang and Weidi Xie},

year={2023},

journal={arXiv preprint arXiv:2312.17183},

}