This is the implementation of paper "Li, J., et al., A Frequency Domain Neural Network for Fast Image Super-resolution. International Joint Conference on Neural Networks (IJCNN), 2018." PDF

- OS: CentOS 7 Linux kernel 3.10.0-514.el7.x86_64

- CPU: Intel Xeon(R) CPU E5-2667 v4 @ 3.20GHz x 32

- Memory: 251.4 GB

- GPU: NVIDIA Tesla P4, 8 GB

- Cuda 8.0 (Cudnn installed)

- Caffe (pycaffe and matcaffe interface required)

- Python 2.7.5

- Matlab 2017b

These datasets are the same as other paper provided. Readers can directly use them or download them from here:

BSDS100, BSDS200, General-100, Set5, Set14, T91, Train_291, Urban100, and DIV2K.

- Copy the 'train' directory to '.../Caffe_ROOT/examples/', and rename the directory to 'FNNSR'.

- Prepare datasets into your own directory.

- (optional) run 'data_aug.m' in Matlab for data augmentation; e.g., data_aug('data/BSDS200'), which will generates a new directory 'BSDS-200-aug'.

- Run 'generate_train.m' and 'generate_test.m' in Matlab to generate 'train_FNNSR_x[2,3,4].h5' and 'test_FNNSR_x[2,3,4].h5'. (modify the data path in *.m files)

- (optional) Modify the parameters in 'create_FNNSR_x[2,3,4].py'.

- Run in command line: 'python create_FNNSR_[2,3,4].py'. It will regenerate 'train_x[2,3,4].prototxt' and 'test_x[2,3,4].prototxt'.

- (optional) modify the parametes in 'solver_x[2,3,4].prototxt'.

- Run in command line './examples/FNNSR/run_train_x[2,3,4].sh' at 'Caffe_ROOT' path.

- Waiting for the training procedure completed.

- net: "examples/FNNSR/train_x2.prototxt"

- test_iter: 1000

- test_interval: 100

- base_lr: 1e-3

- lr_policy: "step"

- gamma: 0.5

- stepsize: 5000

- momentum: 0.9

- weight_decay: 1e-04

- display: 100

- max_iter: 100000

- snapshot: 5000

- snapshot_prefix: 'examples/FNNSR/model/x2/x2_d_c_k_'

- solver_mode: GPU

- type: "SGD"

- Prepare datasets into 'data' directory.

- Copy 'test_x[2,3,4].prototxt' from training directory to 'test' directory.

- Copy '*.caffemodel' from training directory to 'test/model' directory.

- Modify some paths in 'test_FNNSR_main.m' if necessary.

- Run 'test_FNNSR_main.m' in Matlab.

- Metrics will be printed and reconstrcuted images will be saved into 'result' directory.

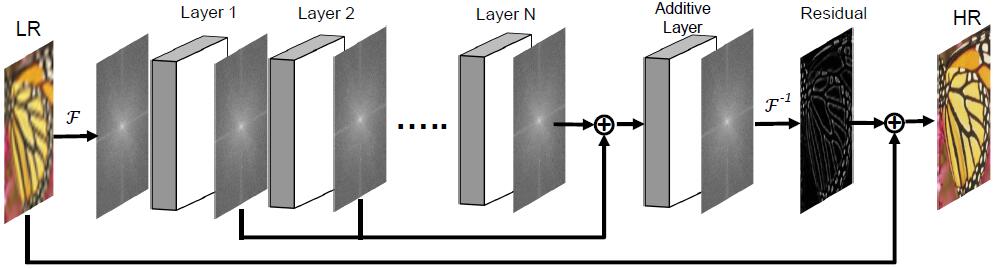

Readers can use 'Netscope' to visualize the network architecture

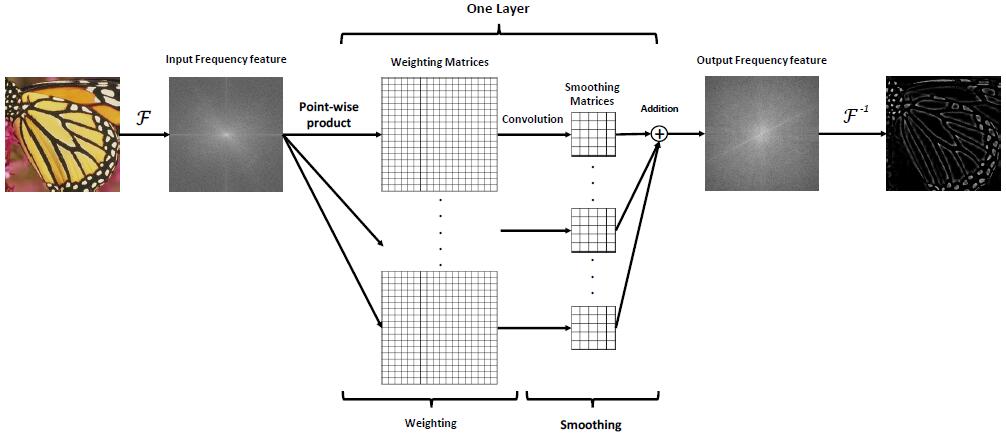

We define a new layer named "ElementWiseProduct" in Caffe, which implements the elementwise product opreation to data with a weight matrix:

input:

- /caffe/include/caffe/layers/elementwise_product_layer.hpp

- /caffe/src/caffe/layers/elementwise_product_layer.cpp

- /caffe/src/caffe/layers/elementwise_product_layer.cu

Place the three files above to corresponding positions of your own caffe directory. Do not forget to add a few definitions into '.../caffe/src/caffe/proto/caffe.proto' file. Detailed steps are provided in 'warning do NOT replace.txt' in our directory along with 'caffe.proto'.

If you have any suggestion or question, please do not hesitate to contact me.

Ph.D. candidate, Shengke Xue

College of Information Science and Electronic Engineering

Zhejiang University, Hangzhou, P.R. China