https://arxiv.org/abs/2205.07680

Bo Li, Kai-Tao Xue, Bin Liu, Yu-Kun Lai

cond env create -f environment.yml

conda activate BBDM

For datasets that have paired image data, the path should be formatted as:

your_dataset_path/train/A # training reference

your_dataset_path/train/B # training ground truth

your_dataset_path/val/A # validating reference

your_dataset_path/val/B # validating ground truth

your_dataset_path/test/A # testing reference

your_dataset_path/test/B # testing ground truthAfter that, the dataset configuration should be specified in config file as:

dataset_name: 'your_dataset_name'

dataset_type: 'custom_aligned'

dataset_config:

dataset_path: 'your_dataset_path'For colorization and inpainting tasks, the references may be generated from ground truth. The path should be formatted as:

your_dataset_path/train # training ground truth

your_dataset_path/val # validating ground truth

your_dataset_path/test # testing ground truthFor generalization, the gray image and ground truth are all in RGB format in colorization task. You can use our dataset type or implement your own.

dataset_name: 'your_dataset_name'

dataset_type: 'custom_colorization or implement_your_dataset_type'

dataset_config:

dataset_path: 'your_dataset_path'We randomly mask 25%-50% of the ground truth. You can use our dataset type or implement your own.

dataset_name: 'your_dataset_name'

dataset_type: 'custom_inpainting or implement_your_dataset_type'

dataset_config:

dataset_path: 'your_dataset_path'Modify the configuration file based on our templates in configs/Template-*.yaml Don't forget to specify your VQGAN checkpoint path and dataset path.

Specity your shell file based on our templates in configs/Template-shell.sh

If you wish to train from the beginning

python3 main.py --config configs/Template_LBBDM_f4.yaml --train --sample_at_start --save_top --gpu_ids 0

If you wish to continue training, specify the model checkpoint path and optimizer checkpoint path in the train part.

python3 main.py --config configs/Template_LBBDM_f4.yaml --train --sample_at_start --save_top --gpu_ids 0

--resume_model path/to/model_ckpt --resume_optim path/to/optim_ckpt

If you wish to sample the whole test dataset to evaluate metrics

python3 main.py --config configs/Template_LBBDM_f4.yaml --sample_to_eval --gpu_ids 0 --resume_model path/to/model_ckpt

Note that optimizer checkpoint is not needed in test and specifying checkpoint path in commandline has higher priority than specifying in configuration file.

sh shell/your_shell.sh

For simplicity, we re-trained all of the models based on the same VQGAN model from LDM.

The pre-trained VQGAN models provided by LDM can be directly used for all tasks.

https://github.com/CompVis/latent-diffusion#bibtex

All of our models can be found here. https://pan.baidu.com/s/1xmuAHrBt9rhj7vMu5HIhvA?pwd=hubb

Our code is implemented based on Latent Diffusion Model and VQGAN

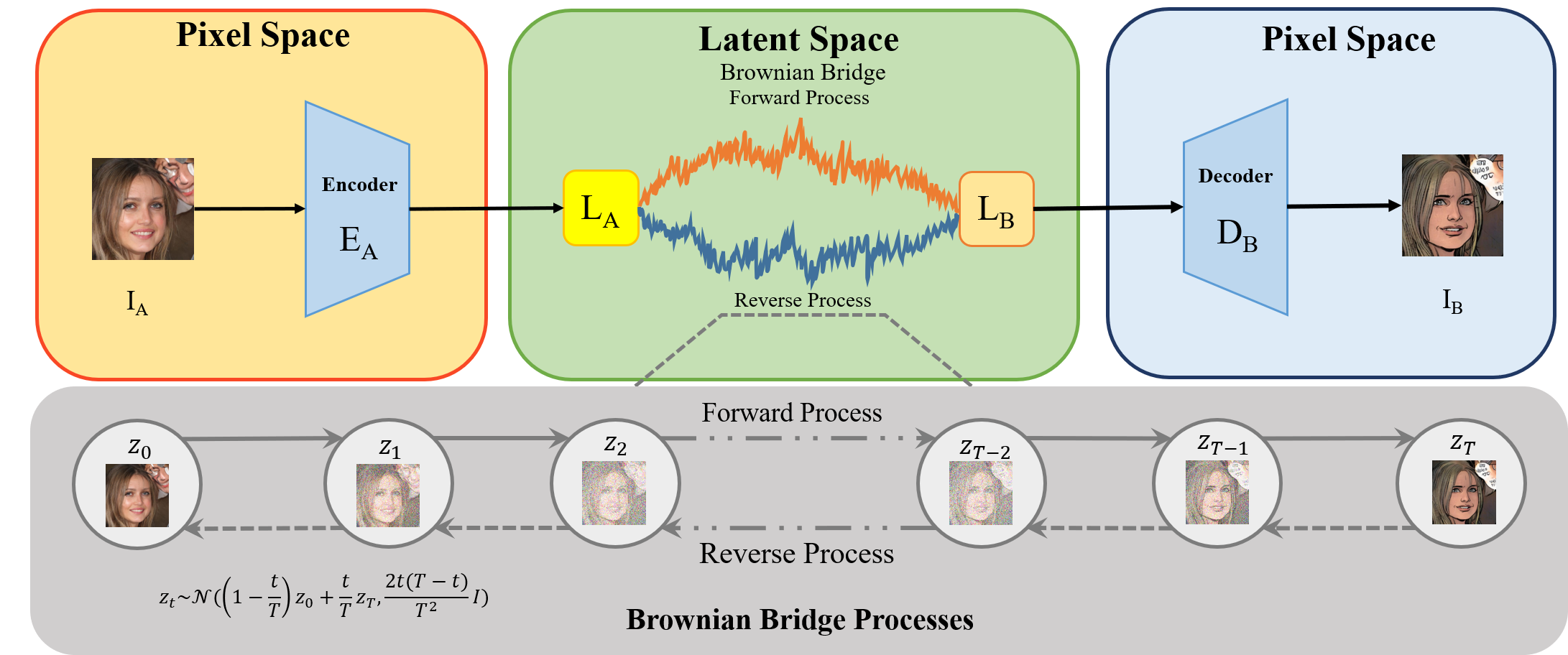

@inproceedings{li2023bbdm,

title={BBDM: Image-to-image translation with Brownian bridge diffusion models},

author={Li, Bo and Xue, Kaitao and Liu, Bin and Lai, Yu-Kun},

booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition},

pages={1952--1961},

year={2023}

}