The goal of this project is to upscale low resolution images (currently only x2 scaling). To achieve this we used the CNN Residual Dense Network described in Residual Dense Network for Image Super-Resolution (Zhang et al. 2018).

We wrote a Keras implementation of the network and set up a Docker image to carry training and testing. You can train either locally or on the cloud with AWS and nvidia-docker with only a few commands.

We welcome any kind of contribution. If you wish to contribute, please see the Contribute section.

- Sample Results

- Getting Started

- Predict

- Train

- Unit Testing

- Additional Information

- Contribute

- Maintainers

- License

Below we show the original low resolution image (centre), the super scaled output of the network (centre) and the result of the baseline scaling obtained with GIMP bicubic scaling (right).

-

Install Docker

-

Build docker image for local usage

docker build -t isr . -f Dockerfile.cpu

In order to train remotely on AWS EC2 with GPU

-

Install Docker Machine

-

Install AWS Command Line Interface

-

Set up an EC2 instance for training with GPU support. You can follow our nvidia-docker-keras project to get started

Place your images (png, jpg) under data/input, the results will be saved under /data/output.

Check config.json for more information on parameters and default folders.

NOTE: make sure that your images only have 3 layers (the png format allows for 4).

From the main folder run

docker run -v $(pwd)/data/:/home/isr/data isr test pre-trained

From the remote machine run (using our DockerHub image)

sudo nvidia-docker run -v $(pwd)/isr/data/:/home/isr/data idealo/image-super-resolution-gpu test pre-trained

Train either locally with (or without) Docker, or on the cloud with nvidia-docker and AWS.

Place your training and validation datasets under data/custom with the following structure:

- Training low resolution images under

data/custom/lr/train - Training high resolution images under

data/custom/hr/train - Validation low resolution images under

data/custom/lr/validation - Validation high resolution images under

data/custom/hr/validation

Use the custom-data flag in the train and setup commands (replace <dataset-flag>) to train on this dataset.

We trained our model on the DIV2K dataset. If you want to train on this dataset too, you can download it by running python scripts/getDIV2K.py from the main folder. This will place the dataset under its default folders (check config.json for more details). Use the div2k flag for the train command to train on this dataset.

- From the main folder run

bash scripts/setup.sh <name-of-ec2-instance> build install update <dataset-flag>. The available dataset-flags arediv2kandcustom-data. Make sure you downloaded the dataset first. - ssh into the machine

docker-machine ssh <name-of-ec2-instance> - Run training with

sudo nvidia-docker run -v $(pwd)/isr/data/:/home/isr/data -v $(pwd)/isr/logs/:/home/isr/logs -it isr train <dataset-flag>

The log folder is mounted on the docker image. Open another EC2 terminal and run

tensorboard --logdir /home/ubuntu/isr/logs

and locally

docker-machine ssh <name-of-ec2-instance> -N -L 6006:localhost:6006

A few helpful details

- DO NOT include a Tensorflow version in

requirements.txtas it would interfere with the version installed in the Tensorflow docker image - DO NOT use

Ubuntu Server 18.04 LTSAMI. Use theUbuntu Server 16.04 LTSAMI instead

From the main project folder run

docker run -v $(pwd)/data/:/home/isr/data -v $(pwd)/logs/:/home/isr/logs -it isr train <dataset-flag>

To perform unit testing install Anaconda and create the conda environment with

conda env create -f src/environment.yml.

From ./src folder run

python -m pytest -vs --disable-pytest-warnings tests

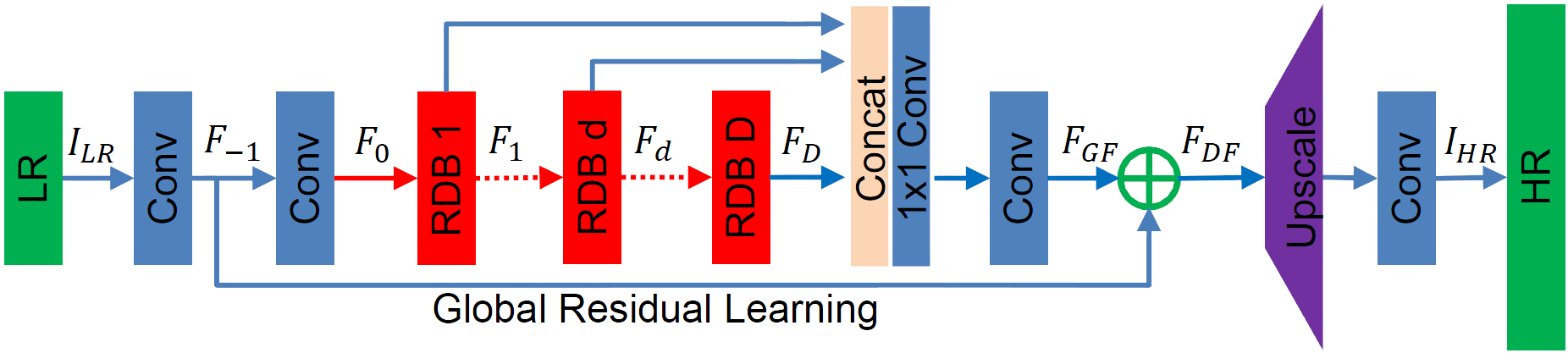

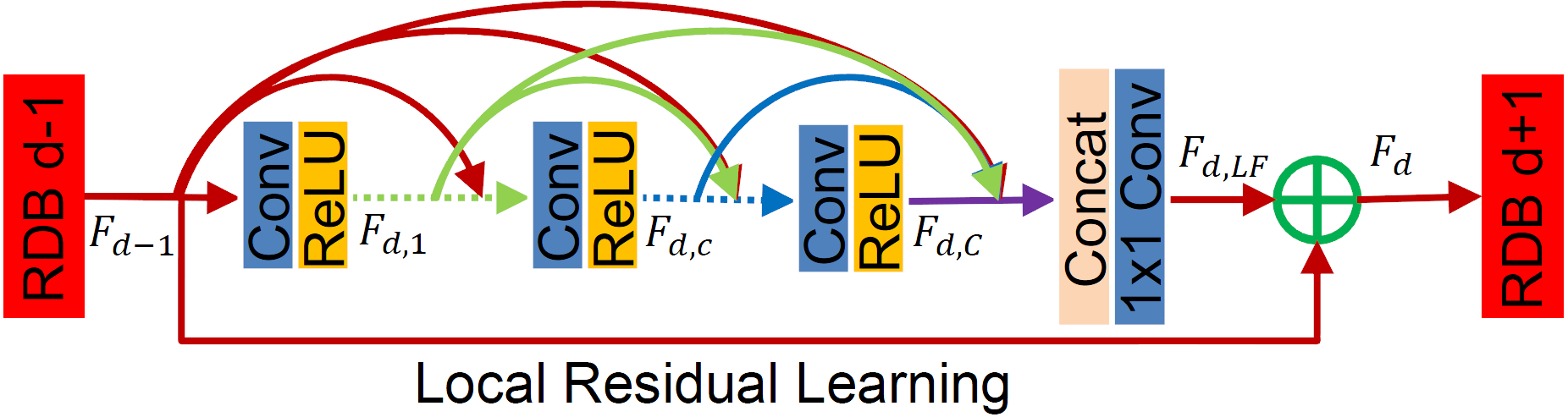

The main parameters of the architecture structure are:

- D - number of Residual Dense Blocks (RDB)

- C - number of convolutional layers stacked inside a RDB

- G - number of feature maps of each convolutional layers inside the RDBs

- G0 - number of feature maps for convolutions outside of RDBs and of each RBD output

source: Residual Dense Network for Image Super-Resolution

Pre-trained model weights on DIV2K dataset are available under weights/sample_weights.

The model was trained using --D=20 --G=64 --C=6 as parameters (see architecture for details) for 86 epochs of 1000 batches of 8 augmented patches (32x32) taken from LR images.

We welcome all kinds of contributions, models trained on different datasets, new model architectures and/or hyperparameters combinations that improve the performance of the currently published model.

Will publish the performances of new models in this repository.

- Francesco Cardinale, github: cfrancesco

- Zubin John, github: valiantone

See LICENSE for details.