This HCI (Human-Computer Interaction) application in Python(3.6) will allow you to control your mouse cursor with your facial movements, works with just your regular webcam. Its hands-free, no wearable hardware or sensors needed. It will become an open gesture control platform, with user definable functions that will be run when the user performs supported gestures, or gesture combinations.

Issues and feature requests welcome!

Delete data/bounds.json and run to enter the calibration routine. This will walk you through a series of facial movements to calibrate the appropriate thresholds and scales to recognise your particular forms of the following facial expressions:

- Looking around the screen, pointing with your nose. (Bound to cursor movement)

- Squinting your eyes. (Bound to fine mouse control i.e. slowing your cursor)

- Widening your eyes. (Bound to fast cursor movement)

- Blinking.

- Winking either eye. (Bound to left and right click)

- Raising your eyebrows.

- Making an o shape with your mouth. (Bound to scrolling mode, combined with head movement)

- Opening your mouth wide.

- Making an O shape with your mouth aka pog. (Bound to entering calibration mode)

- Smiling.

- Frowning.

- Raising either hand.

- Looking around the screen with your eyes

This is adapted and built upon the code written by Akshay Chandra Lagandula, original repo here.

Special thanks to Adrian Rosebrock for his amazing blog posts [2] [3], code snippets and his imutils library [7] that played an important role in making this idea a reality.

Note that the current function is different, with smooth mouse and scroll.Package Version

------------- --------

dlib 19.22.1

imutils 0.5.4

numpy 1.21.3

opencv-python 4.5.3.56

PyAutoGUI 0.9.53

Order of Execution is as follows:

- Follow these installation guides - Numpy, OpenCV, PyAutoGUI, Dlib, Imutils and install the right versions of the libraries (mentioned above).

- Make sure you have the model downloaded. Read the README.txt file inside the model folder for the link.

python mouse-cursor-control.py

Please raise an issue in case of any errors.

The control logic is defined in control.py, define actions to happen based on gestures here. If you do anything cool, please open a PR.

For the names and descriptions of the facial metrics see the definition of the Face class in facedetector.py.

For definitions, names and descriptions of threshold values and scale factors see the definition of Bounds in calibration.py

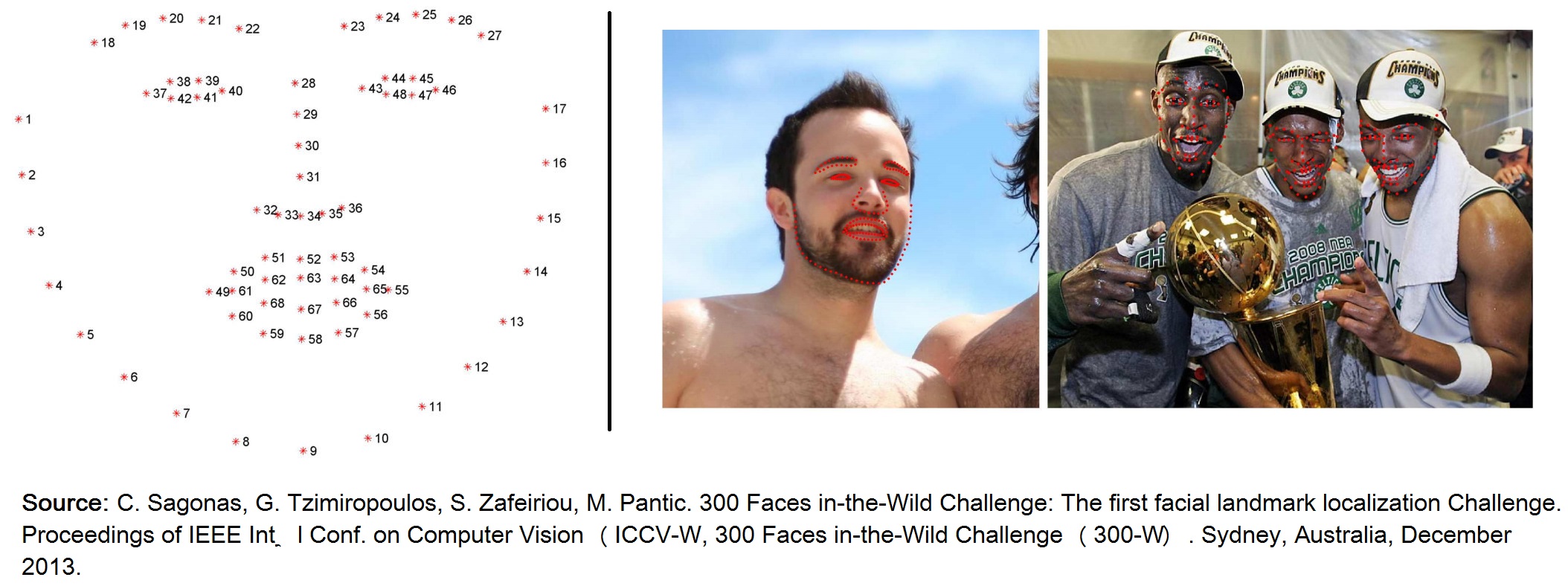

This project is deeply centered around predicting the facial landmarks of a given face. We can accomplish a lot of things using these landmarks. From detecting eye-blinks [3] in a video to predicting emotions of the subject. The applications, outcomes and possibilities of facial landmarks are immense and intriguing.

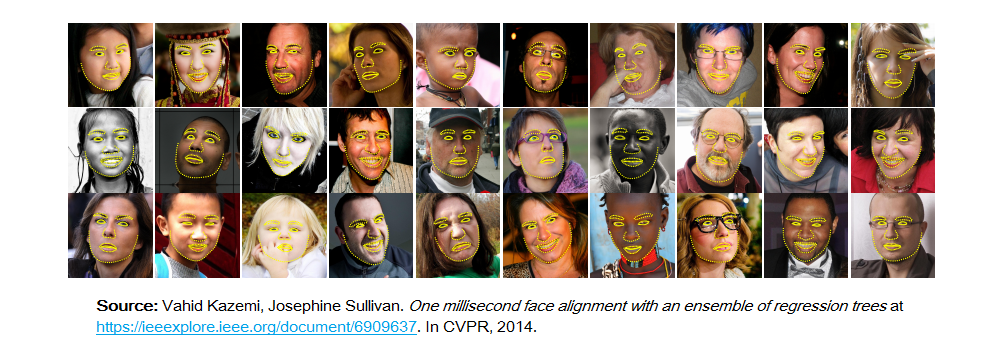

Dlib's prebuilt model, which is essentially an implementation of [4], not only does a fast face-detection but also allows us to accurately predict 68 2D facial landmarks. Very handy.

Using these predicted landmarks of the face, we can build appropriate features that will further allow us to detect certain actions, like using the eye-aspect-ratio (more on this below) to detect a blink or a wink, using the mouth-aspect-ratio to detect a yawn etc or maybe even a pout. In this project, these actions are programmed as triggers to control the mouse cursor. PyAutoGUI library was used to control the mouse cursor.

The model offers two important functions. A detector to detect the face and a predictor to predict the landmarks. The face detector used is made using the classic Histogram of Oriented Gradients (HOG) feature combined with a linear classifier, an image pyramid, and sliding window detection scheme.

The facial landmarks estimator was created by using dlib's implementation of the paper: One Millisecond Face Alignment with an Ensemble of Regression Trees by Vahid Kazemi and Josephine Sullivan, CVPR 2014. And was trained on the iBUG 300-W face landmark dataset: C. Sagonas, E. Antonakos, G, Tzimiropoulos, S. Zafeiriou, M. Pantic. 300 faces In-the-wild challenge: Database and results. Image and Vision Computing (IMAVIS), Special Issue on Facial Landmark Localisation "In-The-Wild". 2016.

You can get the trained model file from http://dlib.net/files, click on shape_predictor_68_face_landmarks.dat.bz2. The model dat file has to be in the model folder.

Note: The license for the iBUG 300-W dataset excludes commercial use. So you should contact Imperial College London to find out if it's OK for you to use this model file in a commercial product.

-

[1]. Tereza Soukupova´ and Jan Cˇ ech. Real-Time Eye Blink Detection using Facial Landmarks. In 21st Computer Vision Winter Workshop, February 2016.

-

[2]. Adrian Rosebrock. Detect eyes, nose, lips, and jaw with dlib, OpenCV, and Python.

-

[3]. Adrian Rosebrock. Eye blink detection with OpenCV, Python, and dlib.

-

[4]. Vahid Kazemi, Josephine Sullivan. One millisecond face alignment with an ensemble of regression trees. In CVPR, 2014.

-

[5]. S. Zafeiriou, G. Tzimiropoulos, and M. Pantic. The 300 videos in the wild (300-VW) facial landmark tracking in-the-wild challenge. In ICCV Workshop, 2015.

-

[6]. C. Sagonas, G. Tzimiropoulos, S. Zafeiriou, M. Pantic. 300 Faces in-the-Wild Challenge: The first facial landmark localization Challenge. Proceedings of IEEE Int’l Conf. on Computer Vision (ICCV-W), 300 Faces in-the-Wild Challenge (300-W). Sydney, Australia, December 2013

-

[7]. Adrian Rosebrock. Imutils. https://github.com/jrosebr1/imutils.

-

[8]. Akshay Chandra Lagandula. Mouse Cursor Control Using Facial Movements. https://towardsdatascience.com/c16b0494a971.