Click to expand

This is a collection with some, documented, basic utility shell scripts to help automate part of the process of fuzzing a target executionable using AFL++, AFL on steroids. If you're a beginner, there's not a lot of easy-to-digest resources on how to fuzz with afl/afl++. I hope this can give you a good starting point. Note that the scripts here are pieced together in a somewhat hacky way, and there's a lot of potential improvements (contributions welcome!). Also note that this only covers the most basic functionality of afl++, then you're on your own. There are some pointers on what can be improved over at the issues tab.

Make sure that you understand how fuzzing (with afl++) works before treading further. The afl++ README can serve as a good starting point.

To use this, you'll have to change and add the CMakeLists.txt to the one you already have, as well as modify the exclusion pattern inside /scripts/_cov.sh. After you get the building/instrumentation to work, you can start adding stuff in tests/ and inputs/.

A more experienced Linux user will get suspicious of --security-opt seccomp=unconfined. See this, this and the setup.sh script residing in aflplusplus/scripts/. Same goes for afl-system-config.

cd aflplusplus-util/statsddocker-compose up -dStarts Prometheus,statsd-exporterand Grafana in the same network- browse

localhost:3000(Grafana login page) - login with username:

admin, password:admin - Set up a password for

admin - Go to

Create->Import->Upload JSON fileand selectstatsd/grafana-afl++.json - In new terminal/pane:

sudo docker pull aflplusplus/aflplusplus curl -LJO https://raw.githubusercontent.com/AFLplusplus/AFLplusplus/stable/afl-system-configchmod +x afl-system-configsudo ./afl-system-config(re-run after system has rebooted between sessions)sudo docker run -ti --security-opt seccomp=unconfined -v $PWD/aflplusplus-util:/aflplusplus-util aflplusplus/aflplusplus(run from the parent dir ofaflplusplus-util, or change the$PWD/aflplusplus-utilpart)cd ../aflplusplus-util. ./scripts/setup.sh./scripts/instrument.sh./scripts/build-cov.sh./scripts/launch-screen.sh./scripts/tmin.sh./scripts/fuzz.sh./scripts/cov.sh./scripts/stop-fuzz.sh./scripts/cmin.sh./scripts/retmin.sh./scripts/refuzz.sh- Repeat 19-23 (

cov-refuzz) ./scripts/triage.sh./scripts/quit-screen.shdocker-compose downwhen you're done with StatsD/Prometheus/Grafana

A tldr alias is defined through setup.sh so that you can get a quick overview of the script execution order.

Check your (favorite) package repository. It is very likely that AFL++ is already packaged for your distribution, if you want to go down that road.

As per the AFLPlusPlus README, using Docker to grab AFL++ is highly recommended. Not only is it easy to get up and running, but, more importantly, it ensures you have recent GCC and CLang versions. It is recommended to use as bleeding edge gcc, clang and llvm-dev versions as you can get in your distro. Note that AFL++ comes with many different modes and glitter, so, provided you want to be fancy, using docker also saves you from going out in the wild and gathering the dependencies needed. Going on a tangent, Going on a tangent, check this for an example. You can use afl-clang-lto for instrumentation purposes, which we do here. If you click on the link, you'll understand that using afl-clang-lto is vastly better to any other option for a shitton of reasons. Well, it requires llvm 11+

In the official afl++ repository, using Docker was recommended but now this recommendation is removed. In fact, they recommend compiling and building everything you need on your own. I still think that it will be easier for the people to work on the nanosatellite codebase to just grab the big image and be done for. Imagine having to gather all these bleeding-edge version dependencies on Ubuntu 😛

That being said, the Dockerfile could see some improvements (mainly to reduce size). Plus, there's stuff in the final image we don't need. I plan on using Docker for a lot of AFL++-related things. So, TL;DR, when I do this, I'll roll my own image fit for our own usecase and it should be noticeably smaller (though probably still not small). The Dockerfile will reside in CI Tool Recipes, meaning that it will be built from a standard, traceable base, with the same base dependencies everywhere, will be published under the spacedot namespace on Docker Hub and be kept up to date (dependency-wise) along with the rest of the toolbase.

sudo pacman -Syu docker && sudo systemctl start docker && sudo systemctl enable dockerFor distros such as:

- ubuntu

- debian

- raspbian

- centos

- rhel

- sles

You can use the get-docker.sh shellscript, provided and maintained by Docker:

curl -fsSL https://get.docker.com -o get-docker.sh

sh get-docker.sh

# To verify that the install script works across the supported OSes:

make shellcheckIf you prefer to build the image locally, you can find their Dockerfile here.

You might have to run docker with root privileges. If you want to run it as a non-root user, you usually have to add your user in the docker group. Beware, since adding a user to the docker group is equivalent to giving root access, since they will be able to start containers with root privileges with docker run --privileged. From the Docker entry on the Arch Linux wiki:

If you want to be able to run the docker CLI command as a non-root user, add your user to the docker user group, re-login, and restart docker.service.

Warning: Anyone added to the docker group is root equivalent because they can use the docker run --privileged command to start containers with root privileges. For more information see 3 and 4.

Note that to be able to set up the StatsD, Prometheus and Grafana infrastracture, if you want to be able to use the docker-compose.yml configuration provided in statsd/, you have to have the docker-compose binary. If you didn't use the get-docker.sh script to install Docker, you might have to install the binary separately, using your distributions' package manager (e.g., in arch it's sudo pacman -S docker-compose). Alternatively, you can run docker compose up instead of docker-compose up -d, as seen in the Docker documentation page.

If you get a unknown option --dport iptables error, that might mean you have to install iptables-nft instead of iptables (legacy). Make sure to reboot afterwards.

After getting docker up and running, you can just pull the AFL++ image:

sudo docker pull aflplusplus/aflplusplusYou can then start the AFL++ container. It would be convenient if you could transfer (mount) the project file somewhere on the container, so that you don't have to copy the files over, or git clone again from inside the container. You can do that with docker volumes. For example, if you were on the parent directory of aflplusplus-util (otherwise, change the $PWD/aflplusplus-util part), you would run:

sudo docker run -ti --security-opt seccomp=unconfined -v $PWD/aflplusplus-util:/aflplusplus-util aflplusplus/aflplusplusand you can access the project structure with cd ../aflplusplus-util. Note that we use $PWD because docker wants you to use an absolute path when passing a host directory.

Just for the sake of completeness, the full process to grab the repository and play around with afl would be something like:

git clone https://github.com/xlxs4/aflplusplus-util.git

sudo docker run -ti --security-opt seccomp=unconfined -v $PWD/aflplusplus-util:/aflplusplus-util aflplusplus/aflplusplus

cd ../aflplusplus-utilFun fact: Since you mounted the volume, any changes you do in aflplusplus-util while inside the container will persist in the host directory even after closing the container. This is bidirectional: you can keep updating the aflplusplus-util directory from outside, and the changes will be immediately reflected inside the container

Fun fact #2: you can work inside the container, and sign your commits with git commit -S out of the box!

If you want to be able to work with the aflplusplus-util repository with git from inside the running aflplusplus mounted container, first run git config --global --add safe.directory /aflplusplus-util (as prompted).

If you want to build what you need yourself, you have to gather any dependencies you might want (e.g. flex, bison, llvm) beforehand. After you have everything at your disposal, you can follow the standard building routine:

git clone https://github.com/AFLplusplus/AFLplusplus

cd AFLplusplus

make distrib

sudo make installNote that the distrib build target will get you AFL++ with all batteries included. For other build targets and build options you can refer to the README.

Before going on, spend some time to read on what can go wrong.

Click to expand

Fuzzing, or fuzz testing, is a testing approach where the input is generated from the tester and passed into the program, to get a crash, a hang, a memory leak, and so on and so forth. The key ability of a good fuzzer software is its ability to adapt the generated input, based on how the codebase that is being fuzzed responds to all previous input. The input passed must be valid input, i.e. accepted by the parser. Fuzzing does not only serve as a tool to explore potentially faulty/insecure areas of the codebase, but also ends up providing the user with a good testing corpus to base other, more traditional, testing approaches like unit testing on.

Fuzzing happens in cycles. Repeat after me: cycles are the bread and butter of fuzzing. The user need only provide the initial testing corpus; then, the machine takes over. For some quick visual understanding of the fuzzing process (implemented here) at a high level, you can refer to the flowgraph below (TODO).

The fuzzer used is AFL++, a community-led supercharged, batteries-included version of afl.

First, the user must specify the initial input. This is an important step, since you want the initial input to be both as concise but also as complete as possible. By the input being complete, I mean including a variety of inputs that each propagates to a different part of the codebase and, ideally, evokes a different response; with the goal being covering the whole codebase. By the input being concise, I mean that the existence of each single input entry must be justified. Each must be different than the rest, bringing something different and important (i.e. a different execution branch(es)) into the table. The initial input can be found inside the inputs/ directory.

Since fuzzing is based on cycling, it is an iterative process at heart. This constant iteration allows for the input to also improve, based on the fuzzing results. There are two main utilities that come into play here, cmin and tmin. afl-cmin is used to minimize the input corpus while maintaining the same fuzzing results (i.e. making the input more concise). This script essentially goes over each identical result and selects the shortest input that led to this result. afl-tmin does something different: It doesn't select the shortest entries; instead, it shortens the entries you feed to it. The gist is the following: For each entry, it gradually removes data while ensuring that the initially observed behavior remains the same. cmin and tmin can be, and should be, run more than once. The process thus constitutes a neverending back and forth of fuzzing, collecting the observed execution behavior, minimizing the input cases generated by the fuzzer(s) with cmin, then trimming them further with tmin, then passing the shortened and improved input as initial input and starting the fuzzing process anew.

For more info, go over the docs/ in AFL++'s repository, read the rest of the README, and the documentation in each script in the aflplusplus folder.

For even more info, I suggest first going over the original AFL whitepaper, found here, and then the AFL++ publication, found here. 1

Assuming you can use afl-clang-lto and the like, and that you are inside aflplusplus/, you can simply:

-

. ./scripts/setup.shNote that this script was made to be ran inside the

aflplusplus/aflplusplusimage Docker container, as demonstrated above. For other distros and/or environments, you might have to inspect the script and tailor all steps to your own box accordingly. It can still serve as a good guideline on what you need to set up.After executing this script it would be a good idea to either

source ~/.bashrcor restart your terminal, so that the env changes caused by the script execution are reflected in your terminal session. Even better, you can justsource ./scripts/setup.shor, for a shortcut,. ./scripts/setup.sh. This ensures every env change is automatically passed to your terminal session.This makes sure you can run

screen,rsync,gdbandgo.screenis used to start detached sessions to run time-consuming commands that should not be aborted midway.rsyncis used to copy files instead ofcpto allow for overwrites.gdbis used to take advantage of theexploitableGDB plugin.gois needed to usecrashwalk.python2is needed to use afl-cov. -

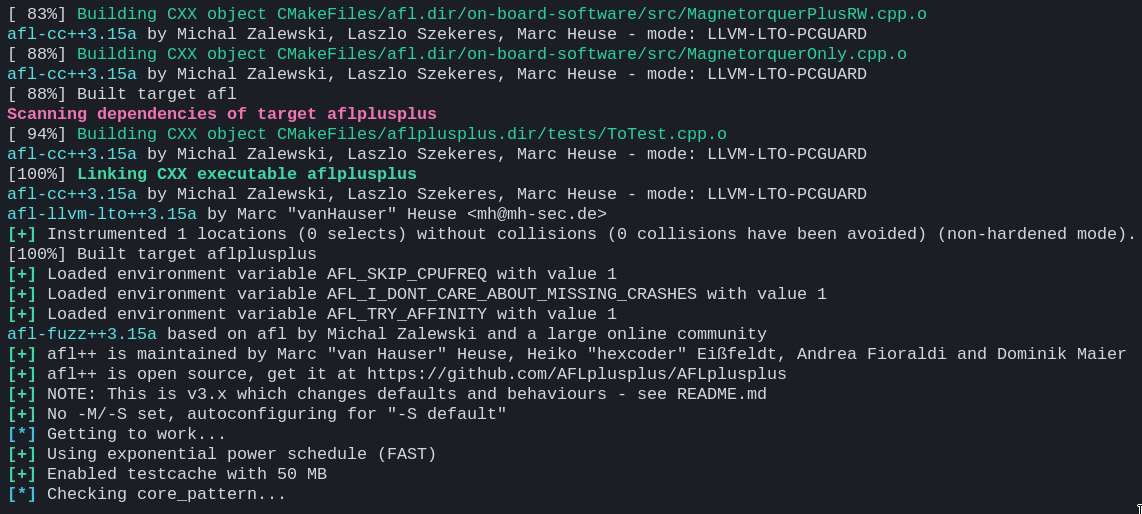

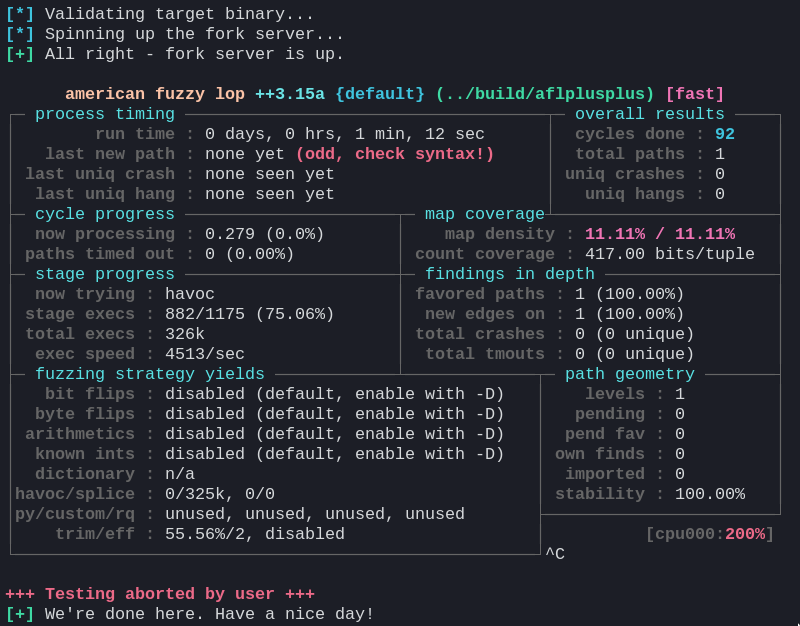

./scripts/instrument.shThe script also sets various environment variables to configure AFL++, for example mode, instrumentation strategy, sanitizer (optional). Then, it instruments the code and builds the instrumented executable. You can edit it to directly affect how AFL++ is configured.

-

./scripts/build-cov.shThis creates a spare copy of the project sources, and compiles the copy with gcov profiling support, as per the GitHub README.

-

./scripts/launch-screen.shThis starts

screensessions in detached mode, meaning it starts the sessions without attaching to them.screenis key for this pipeline to work. Usingscreen, we can spawn theafl-fuzzfuzzing instances inside each session, have them run there without throttling/blocking the terminal, be sure that there won't be any premature termination of the fuzzing due to common accidents, be able to hop back and forth between the fuzzer instances to inspect them as we like, etc. We also use it to runafl-cmin. We can use it to runafl-tminin the background where it spawns many processes to speed up the testcase minimization.screenis awesome. At any point in time, you can runscreen -lsto list all running sessions, if any. You can use this to manually verify that the sessions have started/stopped. Usescreen -xr fuzzer1to attach tofuzzer1orfuzzer2and do the same for the other sessions, respectively. To detach from a session, press the keyboard shortcutCTRL+A+D. If a session was not detached and you want to re-attach to it, use `screen -dr . -

./scripts/tmin.shThis uses

afl-tminto minimize each of the initial testcases to the bare minimum required to express the same code paths as the original testcase. It runsafl-tminin parallel, by spawning different processes. It determines how many by probing the available CPU cores withnproc. Feel free to change this as you see fit. This is ran in thetminscreensession. NOTE:afl-tminandafl-cminrun in a detached screen session. There are no scripts to stop these sessions likestop-fuzz.sh, because, unlikeafl-fuzz, bothafl-tminandafl-cminterminate on their own, and do not need to be aborted by the user. Make sure that the respective session command has terminated before running the next script. The scripts must be ran in the order specified here. If not, you will break things. -

./scripts/fuzz.shThis uses

screento tell bothscreensessions to start fuzzing withafl-fuzz. Specifically, it tells the session namedfuzzer1to spawn aMasterfuzzer instance which uses deterministic fuzzing strategies, and the sessionfuzzer2to spawn aSlavefuzzer instance which uses stochastic fuzzing strategies. These instances directly cooperate. The directoryinputs/is read for the initial testcases, andafl-fuzzoutputs tofindings/. -

./scripts/cov.shThis uses

screento runafl-cov.afl-covis a helper script to integrateafl-fuzzwithgcovandlcovto produce coverage results depending on the fuzzing process. After an initial sanity check,afl-covpatiently waits until it detects that the fuzzer instances have stopped; it then processes the data in eachqueue/dir.afl-covlooks at the sync dir specified in theafl-fuzzscript, namelyfindings/. While there is no script to stop this session, there's no reason to; and you can continue on with the next fuzzing cycle without having to wait forafl-covto finish what it's doing. You will need to re-start it before the next fuzzing iteration stops, though. To examine the final coverage report, wait forafl-covto finish, then simply point your browser toaflplusplus-util/findings/cov/web/index.html. A, albeit weaker, alternative for quick coverage would be to useafl-showmap. -

./scripts/stop-fuzz.shThis sends a

CTRL+Cto both thefuzzer1andfuzzer2runningscreensessions. This gracefully terminates theafl-fuzzinstances. It is required to stop the instances after a while, to minimize the testing corpus withafl-cmin. You should leave the fuzzer instances run for quite a while before stopping (and minimizing the corpus). It is highly advisable that you let them complete at least 1 cycle prior to terminating. -

./scripts/cmin.shThis gathers the

afl-fuzzoutput of bothfuzzerandfuzzer2, usesafl-cminto generate a minimized corpus, and passes the minimized corpus to both fuzzers. Note thatafl-cminfind the testcases that most efficiently express unique paths according to previous runs and is thus different fromafl-tmin.rsyncis used here instead ofcp, becausecpdoesn't want to overwrite the files, and it's very likely that some findings offuzzer1will also have been discovered byfuzzer2. This is ran in thecminscreensession. -

./scripts/retmin.shThis works like

tmin.sh. The difference is that we nowafl-tmineach testcase in the corpus that has been produced by the fuzzer instances and minimized withafl-cmin. This is ran in thetminscreensession. -

./scripts/refuzz.shSimilar to

./fuzz.sh, this re-runsafl-fuzz. Two important differences. First, there's no need to configure AFL++, instrument, etc. Second, the parameter-i inputsfromfuzz.shhas now been changed to-i-. This is necessary, since it tells the fuzzer instances to use the minimized corpus instead of looking at theinputs/initial testcases directory. -

Repeat 7-11 (

cov-refuzz) as you see fit. Note that fuzzing is an iterative process, and you will definitely want to repeat this process (6-9) at least once. Also, note that you should let allafl-fuzzinstances complete at least one full cycle before killing them withstop-fuzz.sh -

./scripts/triage.shThis uses

cwtriageto give you a databse containing results from triaging the fuzzer-found crashes, andcwdumpto summarize said results. Bothcwtriageandcwdumpare ran in thecrashwalkscreensession. -

./scripts/quit-screen.shThis gracefully kills all

screensessions.

All scripts were statically analyzed with and guided by shellcheck. For more info on a script, go through the actual file; there is further documentation in the form of comments.

Footnotes

-

Fioraldi, A., Maier, D., Eißfeldt, H., & Heuse, M. (2020). {AFL++}: Combining Incremental Steps of Fuzzing Research. In 14th USENIX Workshop on Offensive Technologies (WOOT 20). ↩