Ozeu is the toolkit to autolabal dataset for instance segmentation. You can generate datasets labaled with segmentation mask and bounding box from the recorded video files.

- ffmpeg

- torch

- mmcv-full

pip install torch==1.8.1+cu111 torchvision==0.9.1+cu111 torchaudio==0.8.1 -f https://download.pytorch.org/whl/torch_stable.html

pip install mmcv-full==1.3.5 -f https://download.openmmlab.com/mmcv/dist/cu102/torch1.8.0/index.html

pip install git+https://github.com/open-mmlab/mmdetection.git@v2.13.0

git clone git@github.com:xiong-jie-y/ozeu.git

cd ozeu

pip install -e .

I recommend record video with the camera where you want to run detector. For webcam, you can use command like this.

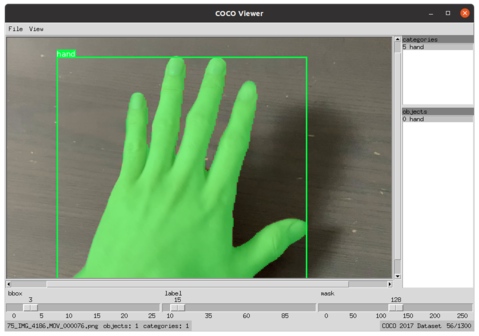

ffmpeg -f v4l2 -framerate 60 -video_size 1280x720 -i /dev/video0 output_file.mkvI recommend to place the object to record in a desk or somewhere on simple texture. That will reduce error rate. You can hold the object by your hand, because the dataset generator can recognize and remove hand like this.

You can write dataset definition file in yaml.

Please define class names and ids at categories, and please associate class id and video paths in the datasets. The class ids will be the label of the files. video_path is relative to the dataset definition file. Video files that are supported by ffmpeg can be used.

categories:

- id: 1

name: alchol sheet

- id: 2

name: ipad

datasets:

- category_id: 2

video_path: IMG_4194_2.MOV

- category_id: 2

video_path: IMG_4195_2.MOVYou can generate labaled coco dataset by giving the dataset definition file above. If you didn't hold object by hand while recording video, you can remove --remove-hand option.

python scripts/create_coco_dataset_from_videos.py --dataset-definition-file ${DATASET_DEFINITION_FILE} --model-name u2net --output-path ${OUTPUT_DATASET_FOLDER} --resize-factor 2 --fps 15 --remove-handPlease place background images at backgrounds_for_augmentation. The background augmentation script will use these files to replace background of datasets.

Here we use VOC images as background images

wget https://pjreddie.com/media/files/VOCtrainval_11-May-2012.tar

--2021-06-02 22:13:22-- https://pjreddie.com/media/files/VOCtrainval_11-May-2012.tar

tar xf VOCtrainval_11-May-2012.tar

mkdir backgrounds_for_augmentation

mv VOCdevkit/VOC2012/JPEGImages/* backgrounds_for_augmentation/After preparing background images, please generate background augmented dataset by running

python scripts/generate_background_augmented_dataset.py --input-dataset-path ${DATASET_FOLDER} --destination-root ${AUGMENTED_DATASET_FOLDER} --augmentation-mode different_backgroundYou can merge background augmented dataset and dataset.

python scripts/merge_coco_datasets.py --input-dirs ${AUGMENTED_DATASET_FOLDER} --input-dirs ${DATASET_FOLDER} --destination-root ${MERGED_DATASET}There is the annotation tool CVAT that can accept coco format dataset. So you can import dataset into your project and fix dataset.

TRAIN!!!

- I wish to thank my wife, Remilia Scarlet.

- This toolkit uses U^2 net for salient object detection. Thank you for nice model!