This repository provides a script and recipe to train Tacotron 2 and WaveRnn models to achieve state of the art accuracy.

This text-to-speech (TTS) system is a combination of two neural network models:

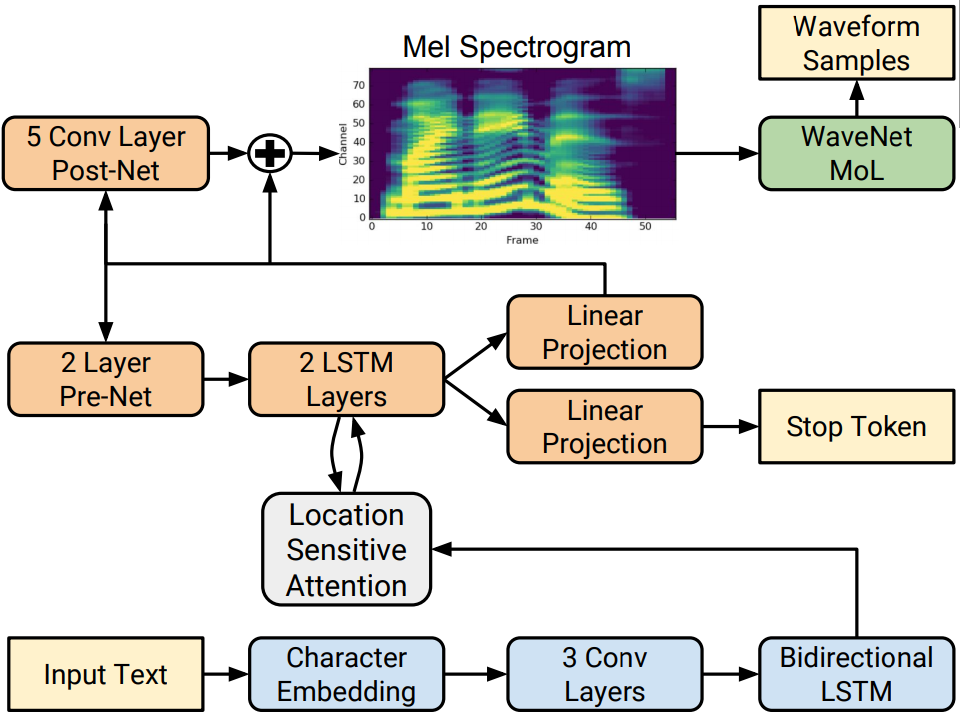

- a modified Tacotron 2 model from the Natural TTS Synthesis by Conditioning WaveNet on Mel Spectrogram Predictions paper

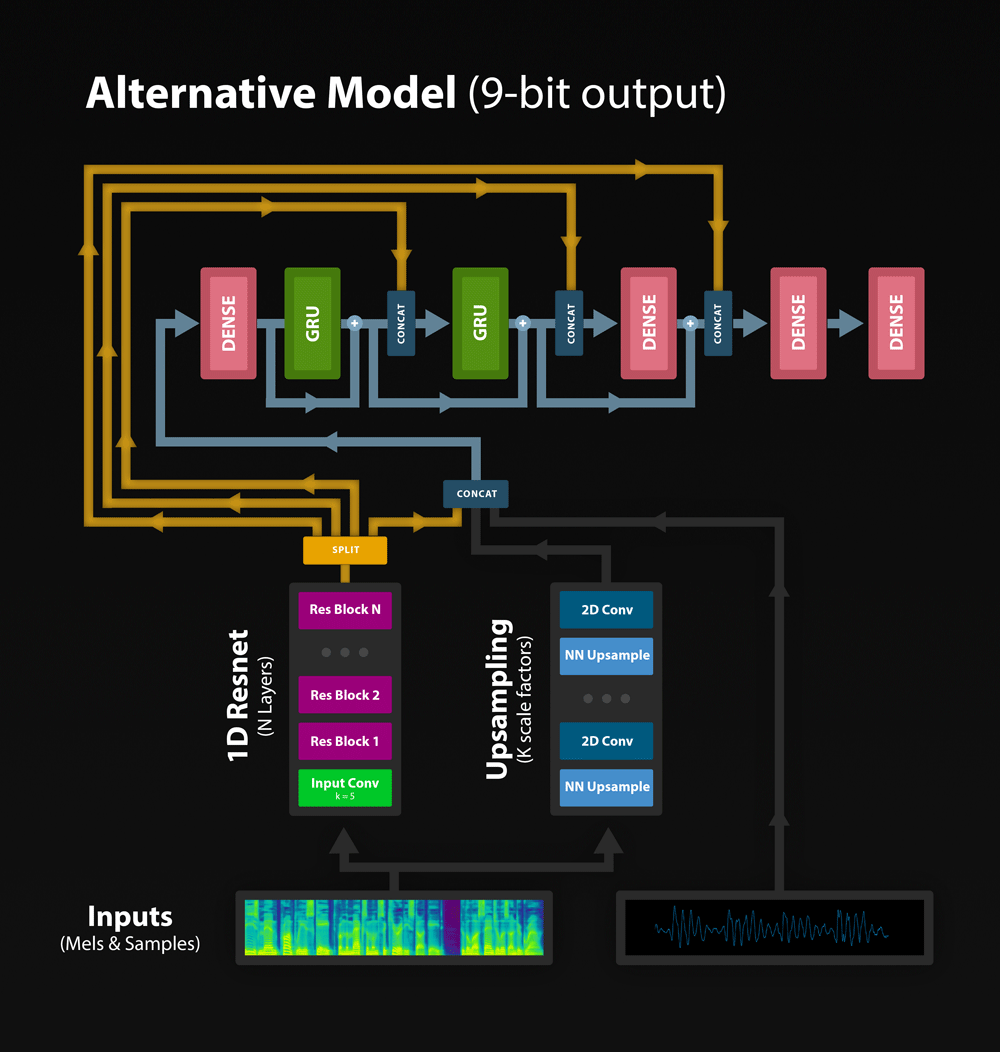

- a modified WaveRnn model from the Efficient Neural Audio Synthesis paper

The Tacotron 2 and WaveRnn models form a text-to-speech system that enables users to synthesize natural sounding speech from raw transcripts without any additional information such as patterns and/or rhythms of speech.

Figure 1. Architecture of the Tacotron 2 model. Taken from the Tacotron 2 paper.

Figure 2. Architecture of the WaveRnn model.

The following section lists the requirements in order to start training the Tacotron 2 and WaveRnn models.

Under your python3 env, install the dependencies.

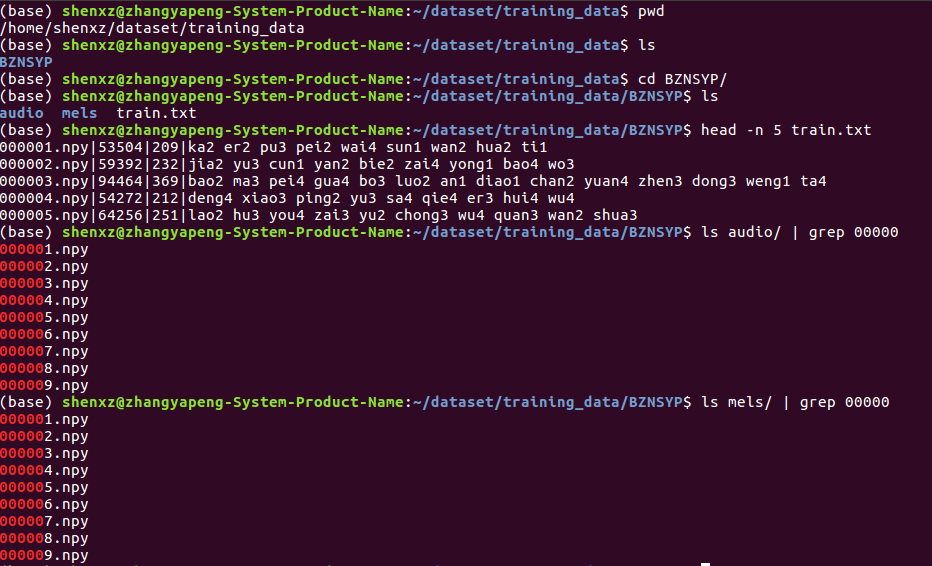

pip install -r requirement.txtDownload and preprocess the dataset, and then its structure should be as Fig. 3.

Figure 3. Structure of the dataset.

train_tacotron2.py -o logs --init-lr 1e-3 --final-lr 1e-5 --epochs 300 -bs 32 --weight-decay 1e-6 --log-file nvlog.json --dataset-path /home/shenxz/dataset/training_data --training-anchor-dirs BZNSYP(1) When tacotron2 is trained completely, to generate gta files, which are part of inputs for WaveRnn.

gta.py --checkpoint logs/checkpoint_0200.pt(2) Put the gta output to the below gta folder, and put the files in previously mentioned audio directory to below quant folder.

./data

--voc_mol

--gta

--quant(3) To train WaveRnn.

train_wavernn.py --gtaAfter you have trained the Tacotron 2 and WaveRnn models, you can perform

inference using the respective checkpoints that are passed as --tacotron2

and --WaveRnn arguments.

To run inference issue:

python inference.py --tacotron2 <Tacotron2_checkpoint> --WaveRnn <WaveRnn_checkpoint> -o output/ -i phrases/phrase.txtYou can also use default param and run as server, and input text base on GUI:

python manage.py

python start_gui.pyThe speech is generated from lines of text in the file that is passed with

-i argument. The number of lines determines inference batch size. The output

audio will be stored in the path specified by the -o argument.