This repository holds the codebase, dataset and models for the paper:

Multimodal Fusion via Teacher-Student Network for Indoor Action Recognition Bruce X.B. Yu, Yan Liu, Keith C.C. Chan, AAAI 2021 (PDF)

Presentation is available on SlidesLive

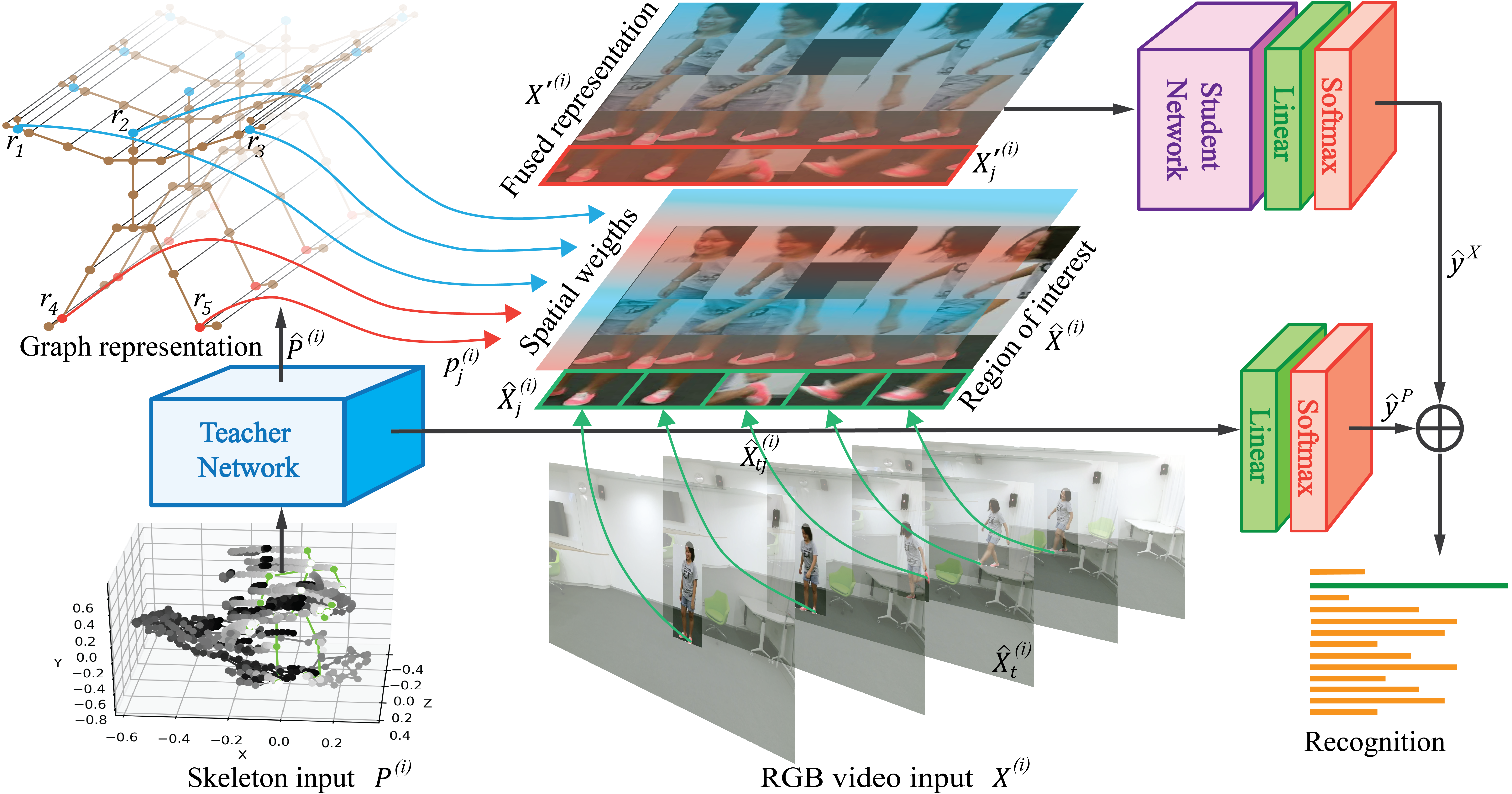

Indoor action recognition plays an important role in modern society, such as intelligent healthcare in large mobile cabin hospital. With the wide usage of depth sensors like Kinect, multimodal information including skeleton and RGB modalities brings a promising way to improve the performance. However, existing methods are either focusing on single data modality or failed to take the advantage of multiple data modalities. In this paper, we propose a Teacher-Student Multimodal Fusion (TSMF) model that fuses the skeleton and RGB modalities at model level for indoor action recognition. In our TSMF, we utilize a teacher network to transfer the structural knowledge of the skeleton modality to a student network for the RGB modality. With extensively experiments on two benchmarking datasets: NTU RGB+D and PKU-MMD, results show that the proposed TSMF consistently performs better than state-of-the-art single modal and multimodal methods. It also indicates that our TSMF could not only improve the accuracy of the student network but also significantly improve the ensemble accuracy.

- Python3 (>3.5)

- PyTorch

- Openpose with Python API. (Optional: for evaluation purpose)

- Other Python libraries can be installed by

pip install -r requirements.txt

cd torchlight; python setup.py install; cd ..Download pretrained models from GoogleDrive, and manually put them into ./trained_models.

NTU RGB+D can be downloaded from their website. The 3D skeletons(5.8GB) modality and the RGB modality are required in our experiments. After that, this command should be used to build the database for training or evaluation:

python tools/ntu_gendata.py --data_path <path to nturgbd+d_skeletons>

where the <path to nturgbd+d_skeletons> points to the 3D skeletons modality of NTU RGB+D dataset you download.

For evaluation, the processed data includes: val_data and val_label are available from GoogleDrive. Please manually put it in folder: ./data/NTU_RGBD

The dataset can be found in PKU-MMD. PKU-MMD is a large action recognition dataset that contains 1076 long video sequences in 51 action categories, performed by 66 subjects in three camera views. It contains almost 20,000 action instances and 5.4 million frames in total. We transfer the 3D skeleton modality to seperate action repetition files with the command:

python tools/utils/skeleton_to_ntu_format.py

After that, this command should be used to build the database for training or evaluation:

python tools/pku_gendata.py --data_path <path to pku_mmd_skeletons>

where the <path to nturgbd+d_skeletons> points to the 3D skeletons modality of PKU-MMD dataset you processed with the above command.

For evaluation, the processed data includes: val_data and val_label are available from GoogleDrive. Please manually put it in folder: ./data/PKU_MMD

After installed the Openpose tool, run

su

sh tools/2D_Retrieve_<dataset>.sh

where the <dataset> must be ntu_rgbd or pku_mmd, depending on the dataset you want to use. We also provide the retrieved OpenPose 2D skeleton data for both datasets, which could downloaded from GoogleDrive (OpenPose NTU RGB+D) and GoogleDrive (OpenPose PKU-MMD).

python tools/gen_fivefs_<dataset>

where the <dataset> must be ntu_rgbd or pku_mmd, depending on the dataset you want to use.

The processed ROI of NTU-RGB+D is available from GoogleDrive; The processed ROI of PKU-MMD is available from GoogleDrive.

For cross-subject evaluation in NTU RGB+D, run

python main_student.py recognition -c config/ntu_rgbd/xsub/student_test.yaml

Check the emsemble:

python ensemble.py --datasets ntu_xsub

For cross-view evaluation in NTU RGB+D, run

python main_student.py recognition -c config/ntu_rgbd/xview/student_test.yaml

Check the ensemble:

python ensemble.py --datasets ntu_xview

For cross-subject evaluation in PKU-MMD, run

python main_student.py recognition -c config/pku_mmd/xsub/student_test.yaml

Check the emsemble:

python ensemble.py --datasets pku_xsub

For cross-view evaluation in PKU-MMD, run

python main_student.py recognition -c config/pku_mmd/xview/student_test.yaml

Check the emsemble:

python ensemble.py --datasets pku_xview

To train a new TSMF model, run

python main_student.py recognition -c config/<dataset>/train_student.yaml [--work_dir <work folder>]

where the <dataset> must be ntu_rgbd/xsub, ntu_rgbd/xview, pku_mmd/xsub or pku_mmd/xview, depending on the dataset you want to use.

The training results, including model weights, configurations and logging files, will be saved under the ./work_dir by default or <work folder> if you appoint it.

You can modify the training parameters such as work_dir, batch_size, step, base_lr and device in the command line or configuration files. The order of priority is: command line > config file > default parameter. For more information, use main_student.py -h.

Finally, custom model evaluation can be performed by this command as we mentioned above:

python main_student.py recognition -c config/<dataset>/student_test.yaml --weights <path to model weights>

This repo is based on

Thanks to the original authors for their work!

Please cite the following paper if you use this repository in your reseach.

@inproceedings{bruce2021multimodal,

title={Multimodal Fusion via Teacher-Student Network for Indoor Action Recognition},

author={Bruce, XB and Liu, Yan and Chan, Keith CC},

booktitle={Proceedings of the AAAI Conference on Artificial Intelligence},

volume={35},

number={4},

pages={3199--3207},

year={2021}

}

For any question, feel free to contact Bruce Yu: b r u c e y o 2011 AT gmail.com(remove space)