Yang Liu*, Xiang Huang*, Minghan Qin, Qinwei Lin, Haoqian Wang (* indicates equal contribution)

| Webpage | Full Paper | Video

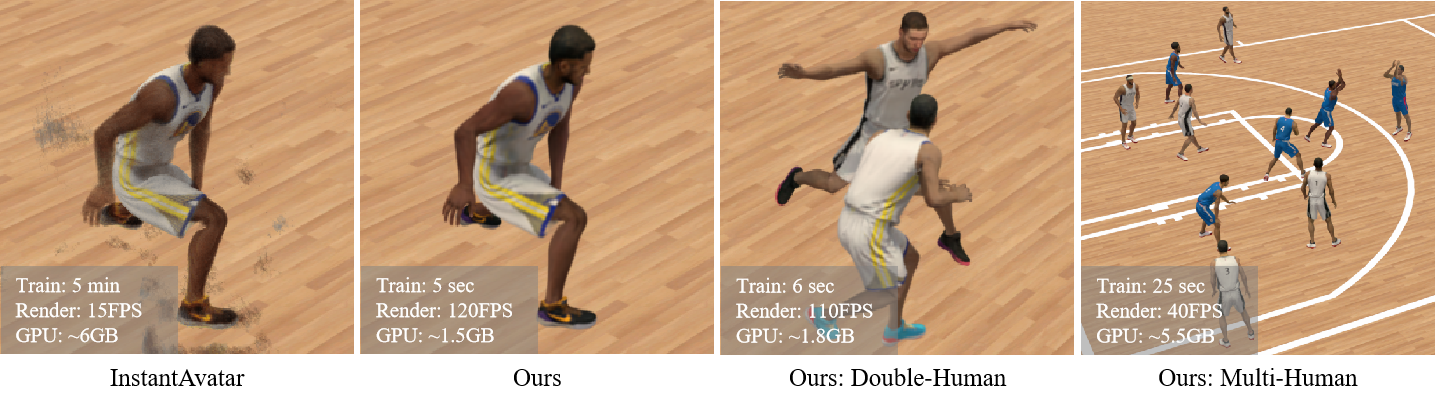

Abstract: Neural radiance fields are capable of reconstructing high-quality drivable human avatars but are expensive to train and render. To reduce consumption, we propose Animatable 3D Gaussian, which learns human avatars from input images and poses. We extend 3D Gaussians to dynamic human scenes by modeling a set of skinned 3D Gaussians and a corresponding skeleton in canonical space and deforming 3D Gaussians to posed space according to the input poses. We introduce hash-encoded shape and appearance to speed up training and propose time-dependent ambient occlusion to achieve high-quality reconstructions in scenes containing complex motions and dynamic shadows. On both novel view synthesis and novel pose synthesis tasks, our method outperforms existing methods in terms of training time, rendering speed, and reconstruction quality. Our method can be easily extended to multi-human scenes and achieve comparable novel view synthesis results on a scene with ten people in only 25 seconds of training.

Abstract: Neural radiance fields are capable of reconstructing high-quality drivable human avatars but are expensive to train and render. To reduce consumption, we propose Animatable 3D Gaussian, which learns human avatars from input images and poses. We extend 3D Gaussians to dynamic human scenes by modeling a set of skinned 3D Gaussians and a corresponding skeleton in canonical space and deforming 3D Gaussians to posed space according to the input poses. We introduce hash-encoded shape and appearance to speed up training and propose time-dependent ambient occlusion to achieve high-quality reconstructions in scenes containing complex motions and dynamic shadows. On both novel view synthesis and novel pose synthesis tasks, our method outperforms existing methods in terms of training time, rendering speed, and reconstruction quality. Our method can be easily extended to multi-human scenes and achieve comparable novel view synthesis results on a scene with ten people in only 25 seconds of training.

- Cuda 11.7

- Conda

- A C++14 capable compiler

- Windows: Visual Studio 2019 or 2022

- Linux: GCC/G++ 8 or higher

First make sure all the Prerequisites are installed in your operating system. Then, invoke

conda create --name anim-gaussian python=3.8

conda activate anim-gaussian

bash ./install.shWe use PeopleSnapshot and GalaBasketball datasets and correspoding template body model. Please download and organize as follows

|---data

| |---Gala

| |---PeopleSnapshot

| |---smplTo train a scene, run

python train.py --config-name <main config file name> dataset=<path to dataset config file>For the PeopleSnapshot dataset, run

python train.py --config-name peoplesnapshot.yaml dataset=peoplesnapshot/male-3-casualFor the GalaBasketball dataset, run

python train.py --config-name gala.yaml dataset=gala/idleTo test the trained model performance on the test set, run

python test.py --config-name <main config file name> dataset=<path to dataset config file>To animate the trained model at the novel poses, run

python test.py --config-name animate.yaml output_path=<path to train output> dataset=<path to animation dataset config file>For example, to animate the model trained on the idle dataset using poses on the dribble dataset, run

python test.py --config-name animate.yaml output_path=idle dataset=gala/dribble