📘Documentation | 🛠️Installation | 👀Model Zoo | 🆕Update News | 🚀Ongoing Projects | 🤔Reporting Issues

English | 简体中文

MMEditing is an open source image and video editing toolbox based on PyTorch. It is a part of the OpenMMLab project.

The master branch works with PyTorch 1.5+.

Video.Interpolation.Demo.mp4

-

Modular design

We decompose the editing framework into different components and one can easily construct a customized editor framework by combining different modules.

-

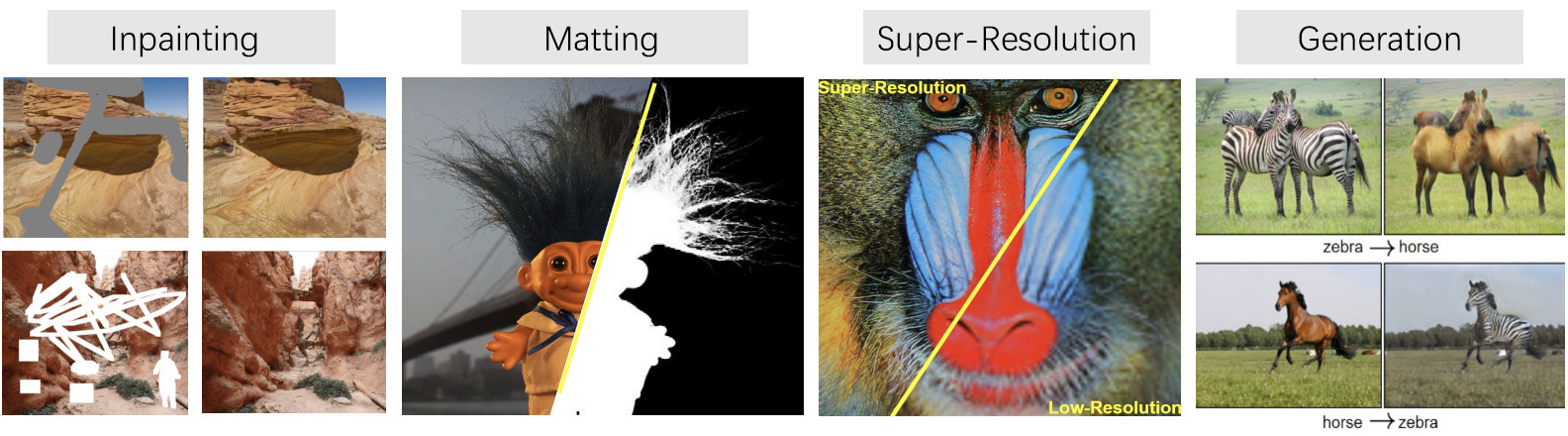

Support of multiple tasks in editing

The toolbox directly supports popular and contemporary inpainting, matting, super-resolution and generation tasks.

-

State of the art

The toolbox provides state-of-the-art methods in inpainting/matting/super-resolution/generation.

Note that MMSR has been merged into this repo, as a part of MMEditing. With elaborate designs of the new framework and careful implementations, hope MMEditing could provide better experience.

- [2022-03-01] v0.13.0 was released.

- Support CAIN

- Support EDVR-L

- Support running in Windows

- [2022-02-11] Switch to PyTorch 1.5+. The compatibility to earlier versions of PyTorch will no longer be guaranteed.

- [2022-01-21] Support video frame interplation: CAIN

Please refer to changelog.md for details and release history.

Please refer to install.md for installation.

Please see getting_started.md for the basic usage of MMEditing.

Supported algorithms:

Inpainting

- Global&Local (ToG'2017)

- DeepFillv1 (CVPR'2018)

- PConv (ECCV'2018)

- DeepFillv2 (CVPR'2019)

Image-Super-Resolution

Video-Super-Resolution

- TOF (IJCV'2019)

- TDAN (CVPR'2020)

- BasicVSR (CVPR'2021)

- BasicVSR++ (NTIRE'2021)

- IconVSR (CVPR'2021)

Video Interpolation

- CAIN (AAAI'2020)

Please refer to model_zoo for more details.

We appreciate all contributions to improve MMEditing. Please refer to CONTRIBUTING.md in MMCV for the contributing guideline.

MMEditing is an open source project that is contributed by researchers and engineers from various colleges and companies. We appreciate all the contributors who implement their methods or add new features, as well as users who give valuable feedbacks. We wish that the toolbox and benchmark could serve the growing research community by providing a flexible toolkit to reimplement existing methods and develop their own new methods.

If you find this project useful in your research, please consider cite:

@misc{mmediting2020,

title={OpenMMLab Editing Estimation Toolbox and Benchmark},

author={MMEditing Contributors},

howpublished = {\url{https://github.com/open-mmlab/mmediting}},

year={2020}

}This project is released under the Apache 2.0 license.

- MMCV: OpenMMLab foundational library for computer vision.

- MIM: MIM installs OpenMMLab packages.

- MMClassification: OpenMMLab image classification toolbox and benchmark.

- MMDetection: OpenMMLab detection toolbox and benchmark.

- MMDetection3D: OpenMMLab's next-generation platform for general 3D object detection.

- MMRotate: OpenMMLab rotated object detection toolbox and benchmark.

- MMSegmentation: OpenMMLab semantic segmentation toolbox and benchmark.

- MMOCR: OpenMMLab text detection, recognition, and understanding toolbox.

- MMPose: OpenMMLab pose estimation toolbox and benchmark.

- MMHuman3D: OpenMMLab 3D human parametric model toolbox and benchmark.

- MMSelfSup: OpenMMLab self-supervised learning toolbox and benchmark.

- MMRazor: OpenMMLab model compression toolbox and benchmark.

- MMFewShot: OpenMMLab fewshot learning toolbox and benchmark.

- MMAction2: OpenMMLab's next-generation action understanding toolbox and benchmark.

- MMTracking: OpenMMLab video perception toolbox and benchmark.

- MMFlow: OpenMMLab optical flow toolbox and benchmark.

- MMEditing: OpenMMLab image and video editing toolbox.

- MMGeneration: OpenMMLab image and video generative models toolbox.

- MMDeploy: OpenMMLab model deployment framework.