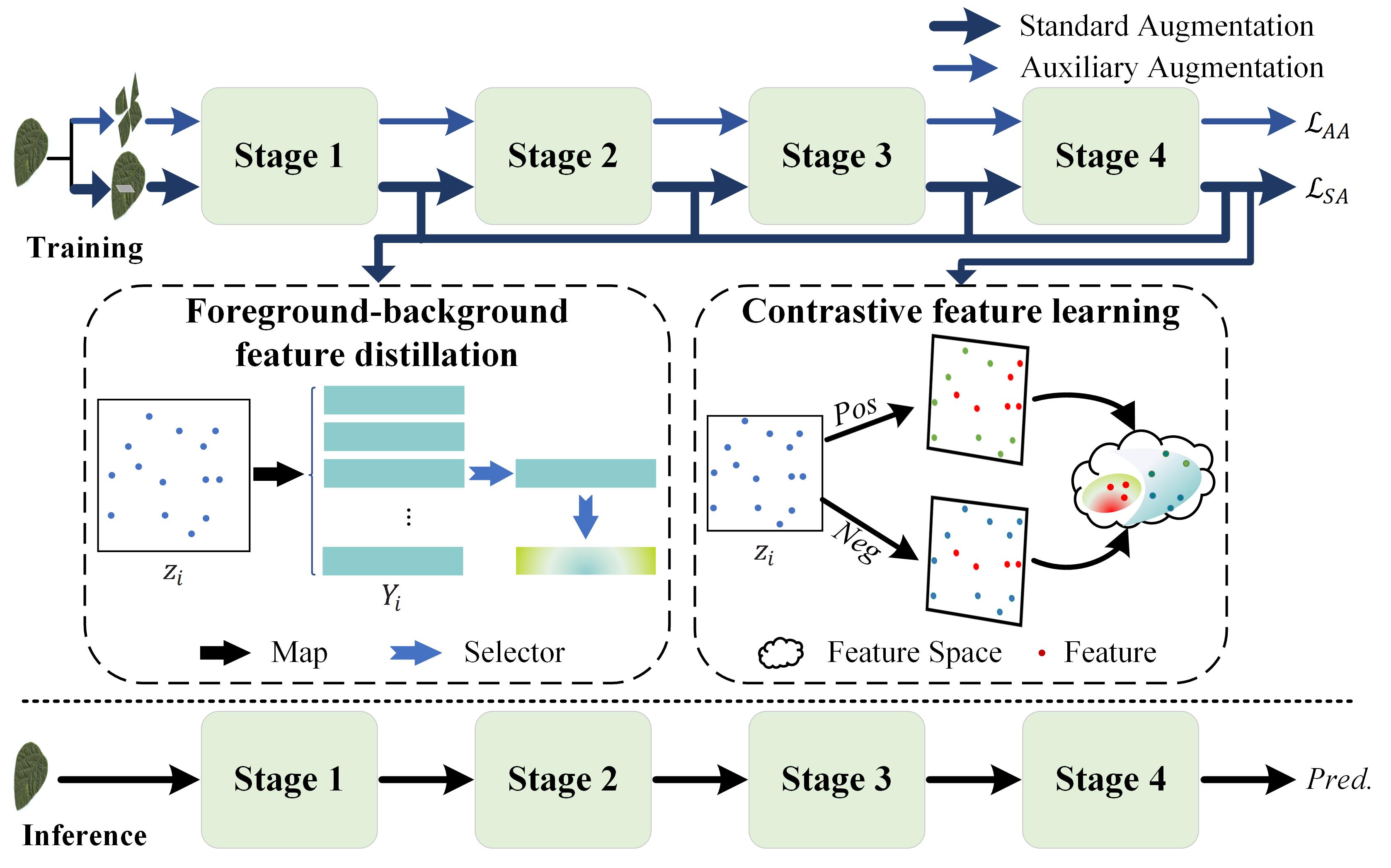

Integrating foreground–background feature distillation and contrastive feature learning for ultra-fine-grained visual classification

This repository is the official implementation of the paper Integrating foreground–background feature distillation and contrastive feature learning for ultra-fine-grained visual classification.

- CUDA==11.4

- Python 3.9.12

- pytorch==1.12.1

- torchvision==0.12.0+cu113

- tensorboard

- scipy

- ml_collections

- tqdm

- pandas

- matplotlib

- imageio

- timm

- yacs

- scikit-learn

- opencv-python

wget https://github.com/SwinTransformer/storage/releases/download/v1.0.0/swin_base_patch4_window7_224_22k.pth

You can download the datasets from the links below:

If the result is the directory below, use ImageDataset() in ./data/build.py to load the dataset

./datasets/soybean_gene/

├── classA

├── classA

├── ...

└── classN

If the result is the directory below, use Cultivar() in ./data/build.py to load the dataset

./datasets/soybean_gene/

└── anno

├── train.txt

└── test.txt

└──images

├── ImageA

├── ImageB

├── ...

└── ImageN

Using the scripts on scripts directory to train the model, e.g., train on SoybeanGene dataset.

sh ./scripts/run_gene.sh

Using the scripts on scripts directory to evaluate the model, e.g., evaluate on SoybeanGene dataset.

sh ./scripts/test_gene.sh