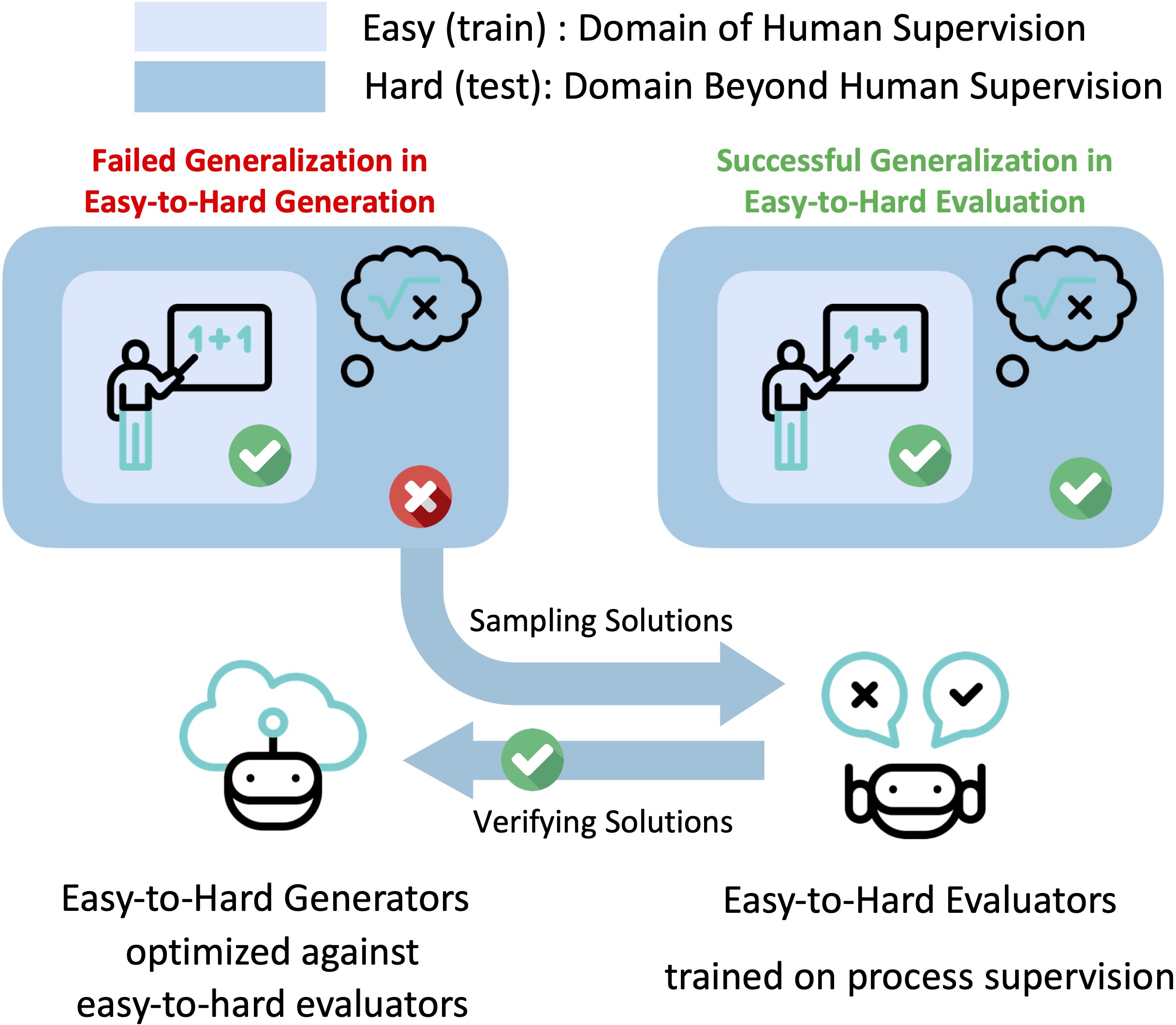

Guided by the observation that evaluation is easier than generation, we enabled large language models to excel on hard math problems beyond human evaluation capabilities through the easy-to-hard generalization of evaluators (e.g., process reward models). For comprehensive details and insights, we kindly direct you to our paper.

We provide model checkpoints for the supervised fine-tuned models and reward models. The current list of models includes:

-

SFT models:

-

Reward models:

Please check the examples for the training scripts and data for the data preparation.

Please consider citing our work if you use the data or code in this repo.

@article{sun2024easy,

title={Easy-to-Hard Generalization: Scalable Alignment Beyond Human Supervision},

author={Sun, Zhiqing and Yu, Longhui and Shen, Yikang and Liu, Weiyang and Yang, Yiming and Welleck, Sean and Gan, Chuang},

journal={arXiv preprint arXiv:2403.09472},

year={2024}

}

Simple and efficient pytorch-native transformer training and inference (batched).

gpt-accelera is a codebase based on gpt-fast -- the state-of-the-art pytorch-native tensor-parallel implementation of transformer text generation that minimizes latency (i.e. batch size=1) -- with the following improvements:

Featuring:

- Batched (i.e., batch size > 1) inference with compiled graph (i.e., torch.compile)

- 2-D parallelism (Tensor-Parallel (TP) + Fully Sharded Data Parallel (FSDP)) training with mixed precision (i.e., torch.cuda.amp)

- Supports for both LLaMA and DeepSeek models

- Supports training policy models with Supervised Fine-Tuning (SFT)

- Supports training reward models (RM) with pointwise and pairwise losses

- Supports on-policy (PPO) and off-policy (DPO) reinforcement learning (RL) training

- All the training can be performed with full fine-tuning for

7b-34b LLaMA/Llemmamodels

Shared features w/ gpt-fast:

- Very low latency (on inference, batched inference, SFT, and PPO)

- No dependencies other than PyTorch and sentencepiece

- int8/int4 quantization (for inference and ref_policy / reward_model in PPO)

- Supports Nvidia and AMD GPUs (?, TODO: test the codebase on AMD)

Following the spirit of gpt-fast, this repository is NOT intended to be a "framework" or "library", but to show off what kind of performance you can get with native PyTorch. Please copy-paste and fork as you desire.

Install torch==2.2.0, sentencepiece, and huggingface_hub:

pip install sentencepiece huggingface_hubModels tested/supported

meta-llama/Llama-2-7b-chat-hf

meta-llama/Llama-2-13b-chat-hf

meta-llama/Llama-2-70b-chat-hf

codellama/CodeLlama-7b-Python-hf

codellama/CodeLlama-34b-Python-hf

EleutherAI/llemma_7b

EleutherAI/llemma_34b

deepseek-ai/deepseek-llm-7b-base

deepseek-ai/deepseek-coder-6.7b-base

deepseek-ai/deepseek-math-7b-base

TODO: Add benchmarks

TODO: Add reference methods

Following gpt-fast, gpt-accelera is licensed under the BSD 3 license. See the LICENSE file for details.

The gpt-accelera codebase is developed during the research and development of the Easy-to-Hard Generalization project.

Please consider citing our work if you use the data or code in this repo.

@misc{gpt_accelera,

author = {Zhiqing Sun },

title = {GPT-Accelera: Simple and efficient pytorch-native transformer training and inference (batched)},

year = {2024},

publisher = {GitHub},

journal = {GitHub repository},

howpublished = {\url{https://github.com/Edward-Sun/gpt-accelera}}

}

We thank the authors of following works for their open-source efforts in democratizing large language models.

- The compiled generation part of

gpt-accelerais adopted fromgpt-fast - The RL part of

gpt-accelerais adopted fromSALMON, which is fromalpaca_farm. - The tokenization part of

gpt-accelerais adopted fromtransformers