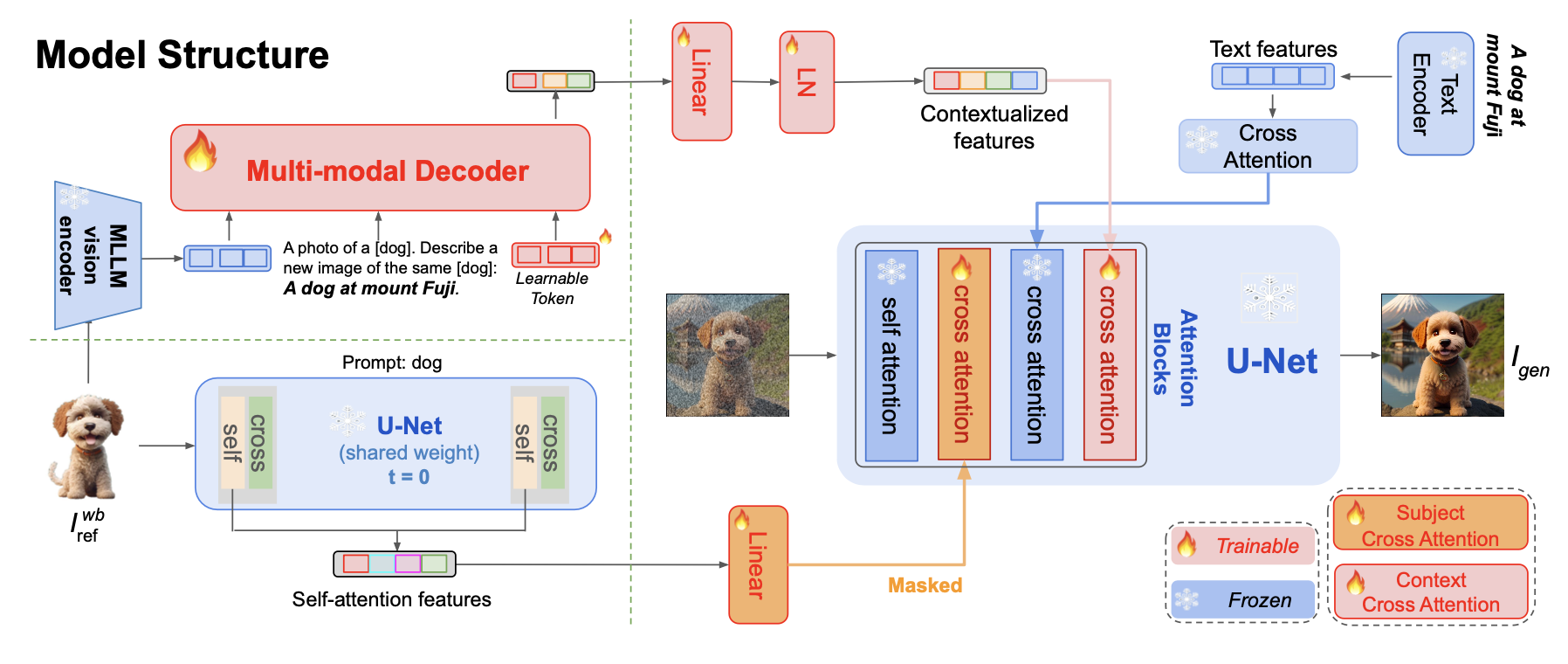

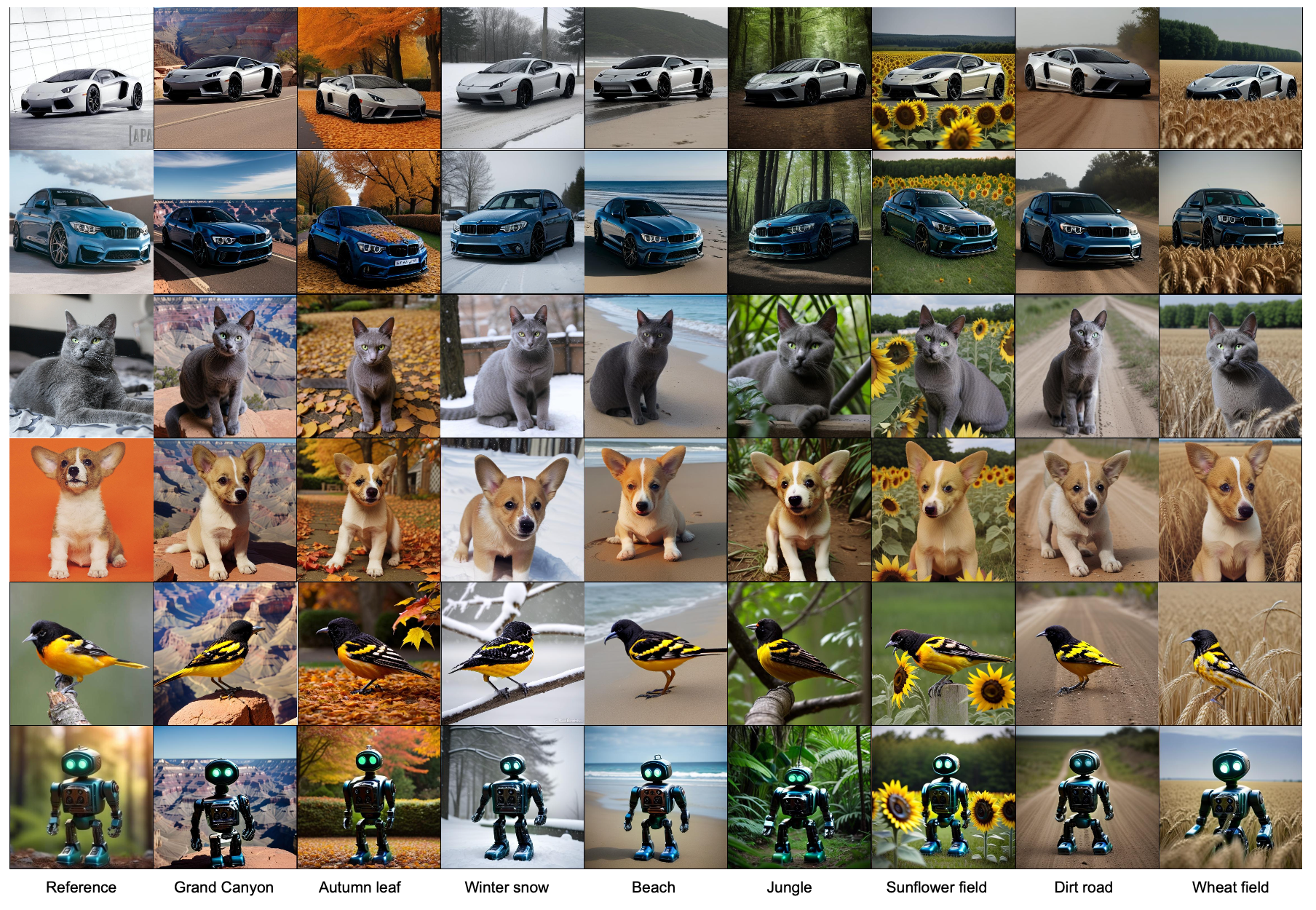

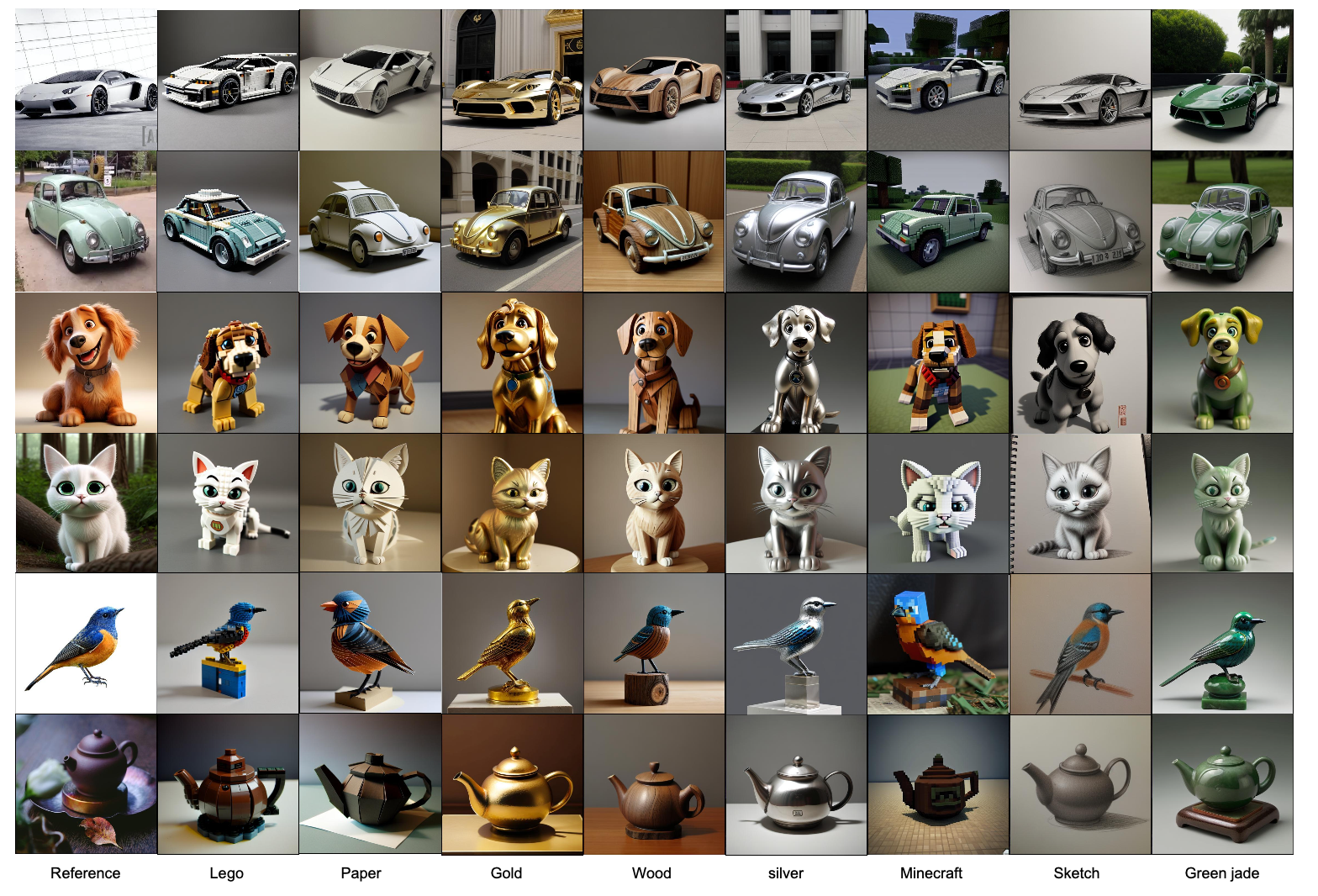

we present MoMA: an open-vocabulary, training-free personalized image model that boasts flexible zero-shot capabilities. As foundational text-to-image models rapidly evolve, the demand for robust image-to-image translation grows. Addressing this need, MoMA specializes in subject-driven personalized image generation. Utilizing an open-source, Multimodal Large Language Model (MLLM), we train MoMA to serve a dual role as both a feature extractor and a generator. This approach effectively synergizes reference image and text prompt information to produce valuable image features, facilitating an image diffusion model. To better leverage the generated features, we further introduce a novel self-attention shortcut method that efficiently transfers image features to an image diffusion model, improving the resemblance of the target object in generated images. Remarkably, as a tuning-free plug-and-play module, our model requires only a single reference image and outperforms existing methods in generating images with high detail fidelity, enhanced identity-preservation, and prompt faithfulness. We commit to making our work open-source, thereby providing universal access to these advancements.

- [2024/04/20] 🔥 We release the model code on GitHub.

- [2024/04/22] 🔥 We add HuggingFace repository and release the checkpoints.

-

Install LlaVA: Please install from its official repository

-

Download our MoMA repository

cd ..

git clone https://github.com/bytedance/MoMA.git

cd MoMA

pip install -r requirements.txt

(we also provide a requirements_freeze.txt, generated by pip freeze)

We support 8-bit and 4-bit inferences which reduce memory consumptions:

-

If you have 22 GB or more GPU memory:

args.load_8bit, args.load_4bit = False, False -

If you have 18 GB or more GPU memory:

args.load_8bit, args.load_4bit = True, False -

If you have 14 GB or more GPU memory:

args.load_8bit, args.load_4bit = False, True

You don't have to download any checkpoints, our code will automatically download them from HuggingFace repositories, which includes:

VAE: stabilityai--sd-vae-ft-mse

StableDiffusion: Realistic_Vision_V4.0_noVAE

MoMA:

Multi-modal LLM: MoMA_llava_7b (13 GB)

Attentions and mappings: attn_adapters_projectors.th (151 Mb)

run:

CUDA_VISIBLE_DEVICES=0 python run_evaluate_MoMA.py

(generated images will be saved in the output folder)

Hyper parameter:

- In "changing context", you can increase the

strengthto get more accurate details. Mostly,strength=1.0is the best. It's recommended thatstrengthis no greater than1.2. - In "changing texture", you can change the

strengthto balance between detail accuracy and prompt fidelity. To get better prompt fidelity, just decreasestrength. Mostly,strength=0.4is the best. It's recommended thatstrengthis no greater than0.6.

If you find our work useful for your research and applications, please consider citing us by:

@article{song2024moma,

title={MoMA: Multimodal LLM Adapter for Fast Personalized Image Generation},

author={Song, Kunpeng and Zhu, Yizhe and Liu, Bingchen and Yan, Qing and Elgammal, Ahmed and Yang, Xiao},

journal={arXiv preprint arXiv:2404.05674},

year={2024}

}