By Weiyang Liu*, Zhen Liu*, James Rehg, Le Song (* equal contribution)

NSL is released under the MIT License (refer to the LICENSE file for details).

- Code for image classification on CIFAR-10/100

- Code for Self-Attention SphereNet (Global Neural Similarity)

- Code for few-shot classification on Mini-ImageNet

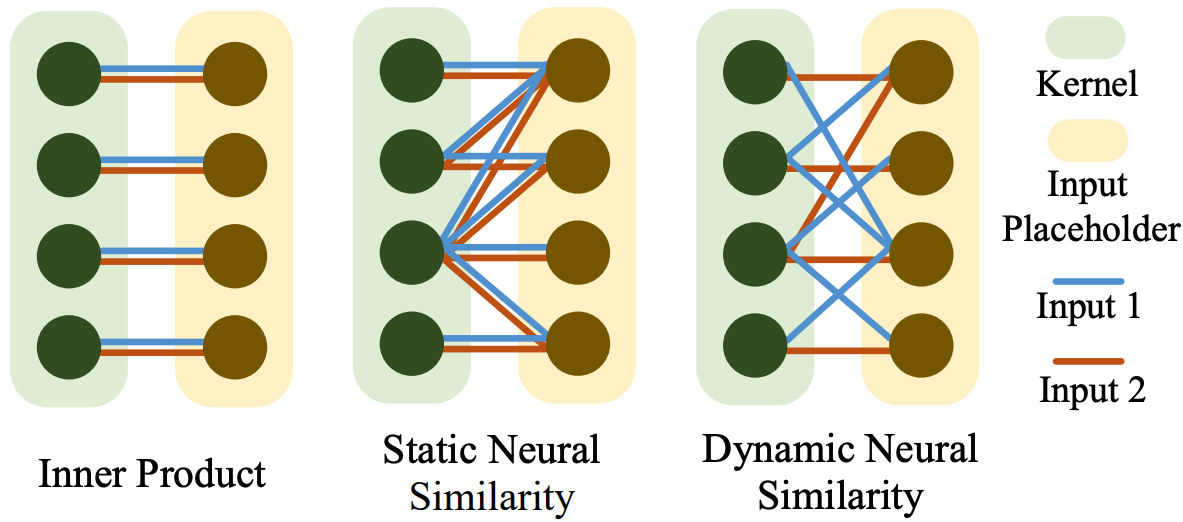

Inner product-based convolution has been the founding stone of convolutional neural networks (CNNs), enabling end-to-end learning of visual representation. By generalizing inner product with a bilinear matrix, we propose the neural similarity which serves as a learnable parametric similarity measure for CNNs. Neural similarity naturally generalizes the convolution and enhances flexibility. Further, we consider the neural similarity learning (NSL) in order to learn the neural similarity adaptively from training data. Specifically, we propose two different ways of learning the neural similarity: static NSL and dynamic NSL. Interestingly, dynamic neural similarity makes the CNN become a dynamic inference network. By regularizing the bilinear matrix, NSL can be viewed as learning the shape of kernel and the similarity measure simultaneously.

Our paper is accepted to NeurIPS 2019. The full paper is available at arXiv and here

If you find our work useful in your research, please consider to cite:

@InProceedings{LiuNIPS19,

title={Neural Similarity Learning},

author={Liu, Weiyang and Liu, Zhen and Rehg, James and Song, Le},

booktitle={NeurIPS},

year={2019}

}

Python 3.7TensorFlow(Tested on version 1.14)numpy

-

Clone the repositary.

git clone https://github.com/wy1iu/NSL.git

- Run the bash script.

./dataset_setup.sh

-

Training NSL on CIFAR-10 (e.g. dynamic neural similarity network with DNS)

# $NSL_ROOT is the directory for this repository cd $NSL_ROOT/nsl_image_recog/experiments_cifar10/dynamic_dns/ python train.py

-

To train the other models CIFAR-10, change the foler to the corresponding one.

-

Training NSL on CIFAR-100 (e.g. dynamic neural similarity network with DNS)

cd $NSL_ROOT/nsl_image_recog/experiments_cifar100/dynamic_dns/ python train.py

-

Best-performing model: NSL with full SphereNet as the neural similarity predictor

cd $NSL_ROOT/nsl_image_recog/nsl_fspherenet/ python train_nsl_fs.py

- We implement a self-attention SphereNet as an example of global neural similarity (described in Appendix B of our paper).

cd $NSL_ROOT/nsl_global_ns python train_sa.py