By Weiyang Liu, Yandong Wen, Zhiding Yu, Meng Yang

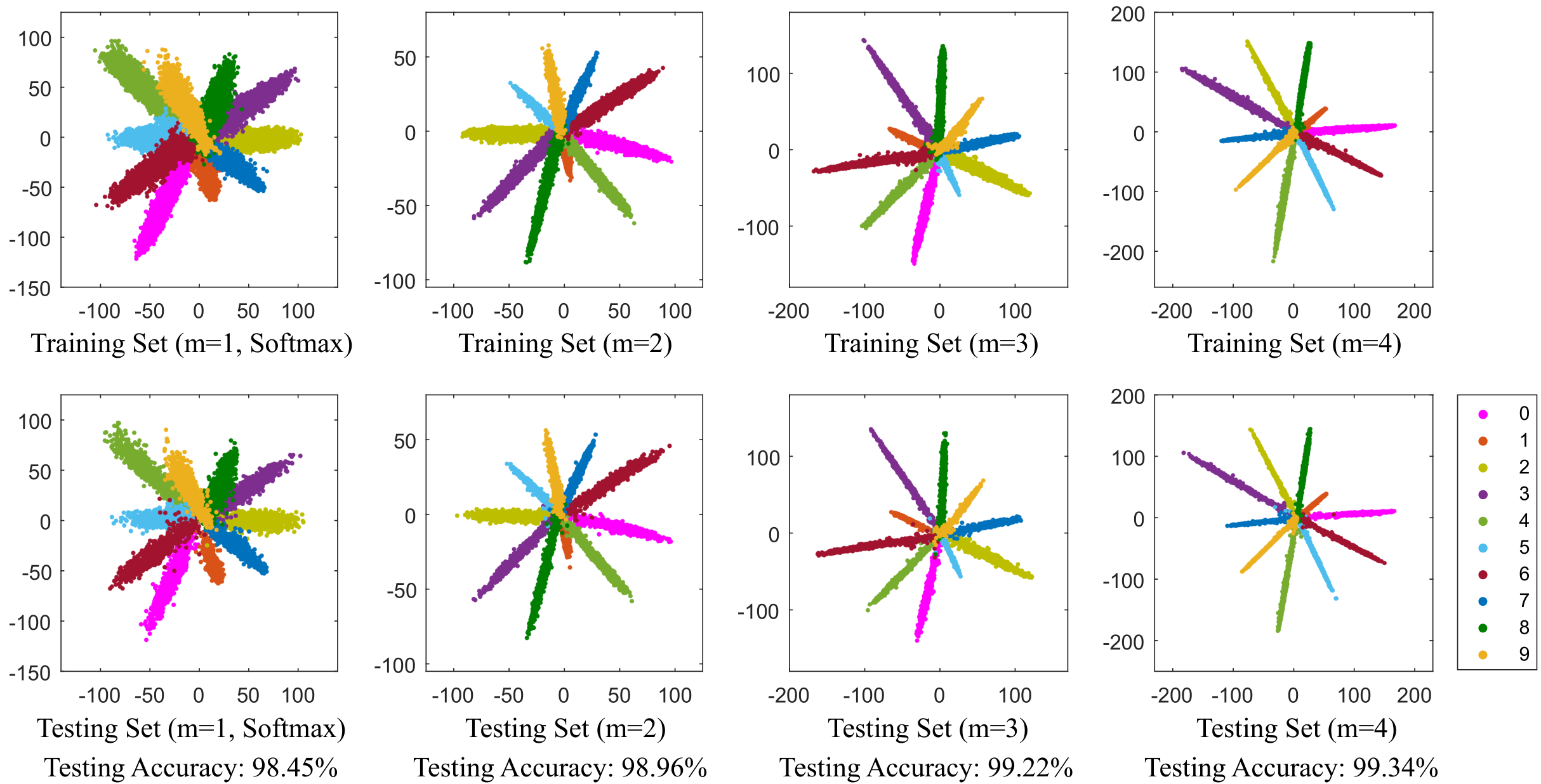

We introduce a large-margin softmax (L-Softmax) loss for convolutional neural networks. L-Softmax loss can greatly improve the generalization ability of CNNs, so it is very suitable for general classification, feature embedding and biometrics (e.g. face) verification. We give the 2D feature visualization on MNIST to illustrate our L-Softmax loss.

The paper is published in ICML 2016 and also available at arXiv.

If the code helps your research, please consider to cite our work:

Large-Margin Softmax Loss for Convolutional Neural Networks

Weiyang Liu, Yandong Wen, Zhiding Yu and Meng Yang

Proceedings of The 33rd International Conference on Machine Learning. 2016: 507-516.

@inproceedings{liu2016large,

title={Large-Margin Softmax Loss for Convolutional Neural Networks},

author={Liu, Weiyang and Wen, Yandong and Yu, Zhiding and Yang, Meng},

booktitle={Proceedings of The 33rd International Conference on Machine Learning},

pages={507--516},

year={2016}

}

- MXNet: code by luoyetx.

- TensorFlow (with C++ API): code by Changan Wang.

- TensorFlow: code by auroua

- Caffe2: code by tpys.

- PyTorch: code by Amir H. Farzaneh.

- PyTorch: code by jihunchoi.

We greatly appreciate the contributions for all the third-party re-implementations!

- 2017/1/23 Fix a bug that lambda_min may change during backprop. Thanks luoyetx!

- 2016/12/18 The repository is officially built.

- Caffe library

- L-Softmax Loss

- src/caffe/proto/caffe.proto

- include/caffe/layers/largemargin_inner_prodcut_layer.hpp

- src/caffe/layers/largemargin_inner_prodcut_layer.cpp

- src/caffe/layers/largemargin_inner_prodcut_layer.cu

- mnist example

- myexamples/mnist/mnist_test_lmdb

- myexamples/mnist/mnist_test_lmdb

- myexamples/mnist/model/mnist_train_test.prototxt

- myexamples/mnist/mnist_solver.prototxt

- cifar10 example

- myexamples/cifar10/model/cifar_train_test.prototxt

- myexamples/cifar10/cifar_solver.prototxt

- cifar10+ example

- myexamples/cifar10+/model/cifar_train_test.prototxt

- myexamples/cifar10+/cifar_solver.prototxt

-

The prototxt of LargeMarginInnerProduct layer is as follows:

layer { name: "ip2" type: "LargeMarginInnerProduct" bottom: "ip1" bottom: "label" top: "ip2" top: "lambda" param { name: "ip2" lr_mult: 1 } largemargin_inner_product_param { num_output: 10 //number of outputs type: QUADRUPLE //value of m //only SINGLE (m=1), DOUBLE (m=2), TRIPLE (m=3) and QUADRUPLE (m=4) are available. base: 1000 gamma: 0.000025 power: 35 iteration: 0 lambda_min: 0 //base, gamma, power and lambda_min are parameters of exponential lambda descent weight_filler { type: "msra" } } include { phase: TRAIN } } -

For specific examples, please refer to myexamples/mnist folder.

- L-Softmax loss is the combination of "LargeMarginInnerProduct" layer and "SoftmaxWithLoss" layer.

- If the type of the layer is SINGLE/DOUBLE/TRIPLE/QUADRUPLE, then m is set as 1/2/3/4 respectively.

- mnist example can be run directly after compilation. cifar10 and cifar10+ requires datasets to be downloaded first.

- base, gamma, power and lambda_min are parameters for exponential lambda descent. lambda represents the approximation level to the proposed L-Softmax loss (refer to the experimental details in the ICML'16 paper). lambda will be decreased by the equation: lambda = max(lambda_min,base*(1+gamma*iteration)^(-power)). It is strong recommended that the user first visualizes the lambda descent function before using the loss. The parameter selection is very flexible. Typically, when the optimization is finished, lambda should be a sufficiently small value. Also note that, lambda is not always necessary. For MNIST dataset, the L-Softmax loss can work perfectly without lambda. Setting base to 0 can remove the lambda.

- lambda_min can vary according to the difficulty of datasets. For easy datasets such as mnist and cifar10, lambda_min can be zero. For large and difficult datasets, you should first try to set lambda_min as 5 or 10. There is no specific rule to set lambda_min, but generally, it should be as small as possible.

- Both ReLU and PReLU work well with L-Softmax loss. Empirically, PReLU helps L-Softmax converge more easily.

- Batch normalization could help the L-Softmax network converge much easier. It is strong recommended to use it. However, there are cases you do not need to use batch normalization, e.g. SphereFace-20.

- Some users reported that using original softmax loss to train the network and then use L-Softmax loss or A-Softmax loss to finetune the network can eliminate the network divergence problem. It is essentially equivalent to setting an extremely large lambda first (i.e. training the network using the original softmax loss) and then set the lambda to a very small value in the last training stage (i.e. finetuning the network using L-Softmax loss or A-Softmax loss).

If you have any questions, feel free to contact:

- Weiyang Liu (wyliu@gatech.edu)

- Yandong Wen (yandongw@andrew.cmu.edu)

Copyright(c) Weiyang Liu and Yandong Wen All rights reserved.

MIT License

Permission is hereby granted, free of charge, to any person obtaining a copy of this software and associated documentation files (the "Software"), to deal in the Software without restriction, including without limitation the rights to use, copy, modify, merge, publish, distribute, sublicense, and/or sell copies of the Software, and to permit persons to whom the Software is furnished to do so, subject to the following conditions:

The above copyright notice and this permission notice shall be included in all copies or substantial portions of the Software.

THE SOFTWARE IS PROVIDED AS IS, WITHOUT WARRANTY OF ANY KIND, EXPRESS OR IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM, OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE SOFTWARE.