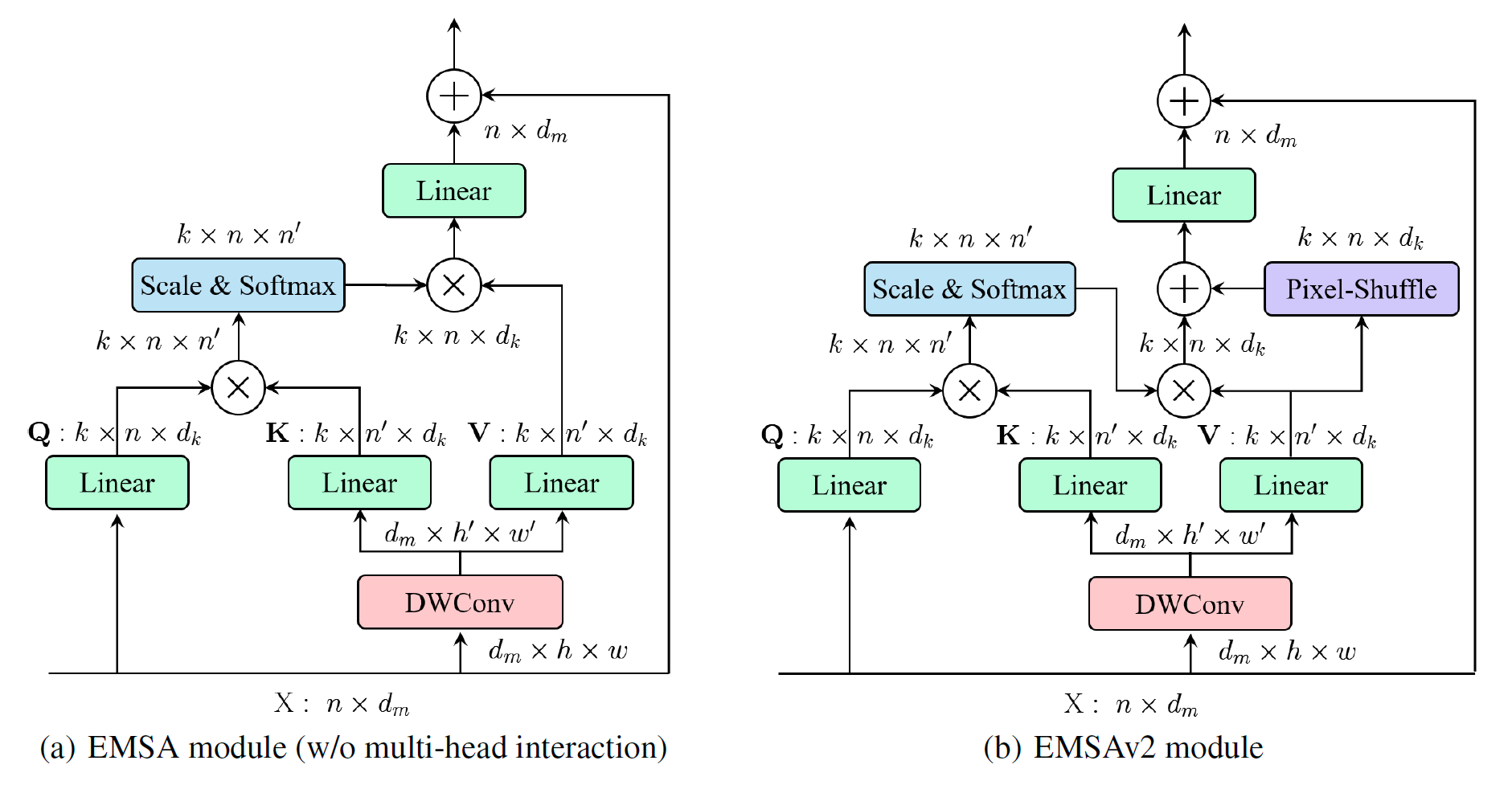

- (2022/05/10) Code of ResTV2 is released! ResTv2 simplifies the EMSA structure in ResTv1 (i.e., eliminating the multi-head interaction part) and employs an upsample operation to reconstruct the lost medium- and high-frequency information caused by the downsampling operation.

Official PyTorch implementation of ResTv1 and ResTv2, from the following paper:

ResT: An Efficient Transformer for Visual Recognition. NeurIPS 2021.

ResT V2: Simpler, Faster and Stronger. NeurIPS 2022.

By Qing-Long Zhang and Yu-Bin Yang

State Key Laboratory for Novel Software Technology at Nanjing University

ResTv1 is initially described in arxiv, which capably serves as a general-purpose backbone for computer vision. It can tackle input images with arbitrary size. Besides, ResT compressed the memory of standard MSA and model the interaction between multi-heads while keeping the diversity ability.

- ImageNet-1K Training Code

- ImageNet-1K Fine-tuning Code

- Downstream Transfer (Detection, Segmentation) Code

| name | resolution | acc@1 | #params | FLOPs | Throughput | model |

|---|---|---|---|---|---|---|

| ResTv1-Lite | 224x224 | 77.2 | 11M | 1.4G | 1246 | baidu |

| ResTv1-S | 224x224 | 79.6 | 14M | 1.9G | 1043 | baidu |

| ResTv1-B | 224x224 | 81.6 | 30M | 4.3G | 673 | baidu |

| ResTv1-L | 224x224 | 83.6 | 52M | 7.9G | 429 | baidu |

| ResTv2-T | 224x224 | 82.3 | 30M | 4.1G | 826 | baidu |

| ResTv2-T | 384x384 | 83.7 | 30M | 12.7G | 319 | baidu |

| ResTv2-S | 224x224 | 83.2 | 41M | 6.0G | 687 | baidu |

| ResTv2-S | 384x384 | 84.5 | 41M | 18.4G | 256 | baidu |

| ResTv2-B | 224x224 | 83.7 | 56M | 7.9G | 582 | baidu |

| ResTv2-B | 384x384 | 85.1 | 56M | 24.3G | 210 | baidu |

| ResTv2-L | 224x224 | 84.2 | 87M | 13.8G | 415 | baidu |

| ResTv2-L | 384x384 | 85.4 | 87M | 42.4G | 141 | baidu |

Note: Access code for baidu is rest. Pretrained models of ResTv1 is now available in google drive.

Please check INSTALL.md for installation instructions.

We give an example evaluation command for a ImageNet-1K pre-trained, then ImageNet-1K fine-tuned ResTv2-T:

Single-GPU

python main.py --model restv2_tiny --eval true \

--resume restv2_tiny_384.pth \

--input_size 384 --drop_path 0.1 \

--data_path /path/to/imagenet-1k

This should give

* Acc@1 83.708 Acc@5 96.524 loss 0.777

- For evaluating other model variants, change

--model,--resume,--input_sizeaccordingly. You can get the url to pre-trained models from the tables above. - Setting model-specific

--drop_pathis not strictly required in evaluation, as theDropPathmodule in timm behaves the same during evaluation; but it is required in training. See TRAINING.md or our paper for the values used for different models.

See TRAINING.md for training and fine-tuning instructions.

This repository is built using the timm library.

This project is released under the Apache License 2.0. Please see the LICENSE file for more information.

If you find this repository helpful, please consider citing:

ResTv1

@inproceedings{zhang2021rest,

title={ResT: An Efficient Transformer for Visual Recognition},

author={Qinglong Zhang and Yu-bin Yang},

booktitle={Advances in Neural Information Processing Systems},

year={2021},

url={https://openreview.net/forum?id=6Ab68Ip4Mu}

}

ResTv2

@article{zhang2022rest,

title={ResT V2: Simpler, Faster and Stronger},

author={Zhang, Qing-Long and Yang, Yu-Bin},

journal={arXiv preprint arXiv:2204.07366},

year={2022}

[2022/05/26] ResT and ResT v2 have been integrated into PaddleViT, checkout here for the 3rd party implementation on Paddle framework!