This repository is the implementation of RECAP: Towards Precise Radiology Report Generation via Dynamic Disease Progression Reasoning. Before running the code, please install the prerequisite libraries, and follow our instructions to replicate the experiments.

- [2024/01/13] Checkpoints (Stage 1 and Stage 2) for the MIMIC-ABN dataset are available at Google Drive

- [2024/01/12] Checkpoints (Stage 1 and Stage 2) for the MIMIC-CXR dataset are available at Google Drive

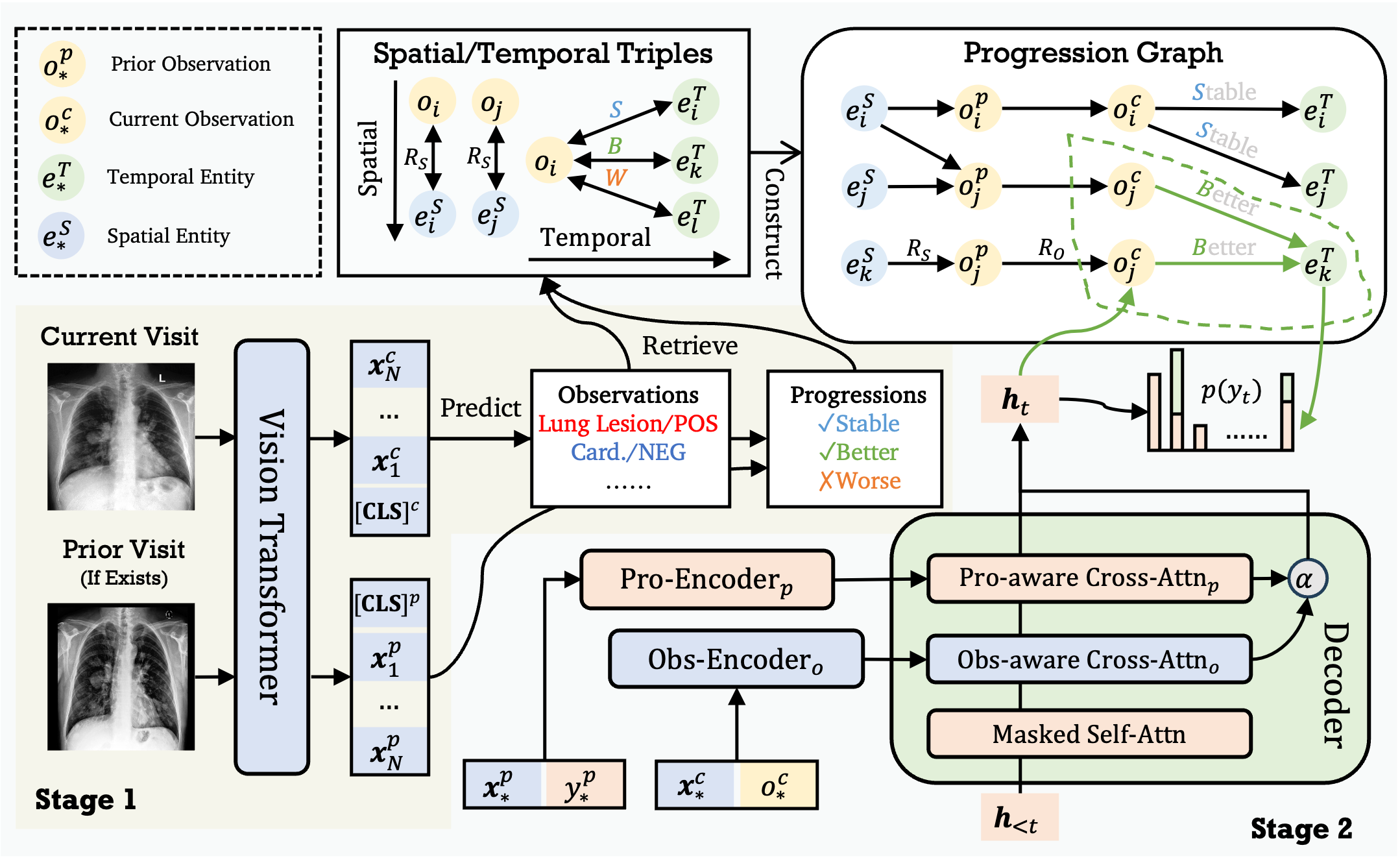

Automating radiology report generation can significantly alleviate radiologists' workloads. Previous research has primarily focused on realizing highly concise observations while neglecting the precise attributes that determine the severity of diseases (e.g., small pleural effusion). Since incorrect attributes will lead to imprecise radiology reports, strengthening the generation process with precise attribute modeling becomes necessary. Additionally, the temporal information contained in the historical records, which is crucial in evaluating a patient's current condition (e.g., heart size is unchanged), has also been largely disregarded. To address these issues, we propose Recap, which generates precise and accurate radiology reports via dynamic disease progression reasoning. Specifically, Recap first predicts the observations and progressions (i.e., spatiotemporal information) given two consecutive radiographs. It then combines the historical records, spatiotemporal information, and radiographs for report generation, where a disease progression graph and dynamic progression reasoning mechanism are devised to accurately select the attributes of each observation and progression. Extensive experiments on two publicly available datasets demonstrate the effectiveness of our model.

torch==1.9.1transformers==4.24.0

Please download the two datasets: MIMIC-ABN and MIMIC-CXR, and put the annotation files into the data folder.

- For observation preprocessing, we use CheXbert to extract relevant observation information. Please follow the instruction to extract the observation tags.

- For progression preprocessing, we adopt Chest ImaGenome to extract relevant observation information.

- For entity preprocessing, we use RadGraph to extract relevant entities.

- For CE evaluation, please clone CheXbert into the folder and download the checkpoint chexbert.pth into CheXbert:

git clone https://github.com/stanfordmlgroup/CheXbert.git

We share the encrypted reports for the MIMIC-CXR and MIMIC-ABN datasets. To decrypt the reports, you will need to download the mimic-cxr-2.0.0-split.csv.gz from here.

We recover the data-split of MIMIC-ABN according to study_id provided by the MIMIC-CXR dataset. We provide an example code as reference. Please run the following code and change the data location accordingly for preprocessig:

python src_preprocessing/run_abn_preprocess.py \

--mimic_cxr_annotation data/mimic_cxr_annotation.json \

--mimic_abn_annotation data/mimic_abn_annotation.json \

--image_path data/mimic_cxr/images/ \

--output_path data/mimic_abn_annotation_processed.json

Model weights trained on two datasets are available at:

- MIMIC-ABN: Google Drive

- MIMIC-CXR: Google Drive

Recap is a two-stage framework as shown the figure above. Here are snippets for training and testing Recap.

chmod +x script_stage1/run_mimic_abn.sh

./script_stage1/run_mimic_abn.sh 1

chmod +x script_stage2/run_mimic_abn.sh

./script_stage2/run_mimic_abn.sh 1

If you use the Recap, please cite our paper:

@inproceedings{hou-etal-2023-recap,

title = "{RECAP}: Towards Precise Radiology Report Generation via Dynamic Disease Progression Reasoning",

author = "Hou, Wenjun and Cheng, Yi and Xu, Kaishuai and Li, Wenjie and Liu, Jiang",

booktitle = "Findings of the Association for Computational Linguistics: EMNLP 2023",

month = dec,

year = "2023",

address = "Singapore",

publisher = "Association for Computational Linguistics",

url = "https://aclanthology.org/2023.findings-emnlp.140",

doi = "10.18653/v1/2023.findings-emnlp.140",

pages = "2134--2147",

}