The official repository for the paper: Evaluation of Retrieval-Augmented Generation: A Survey Arxiv.

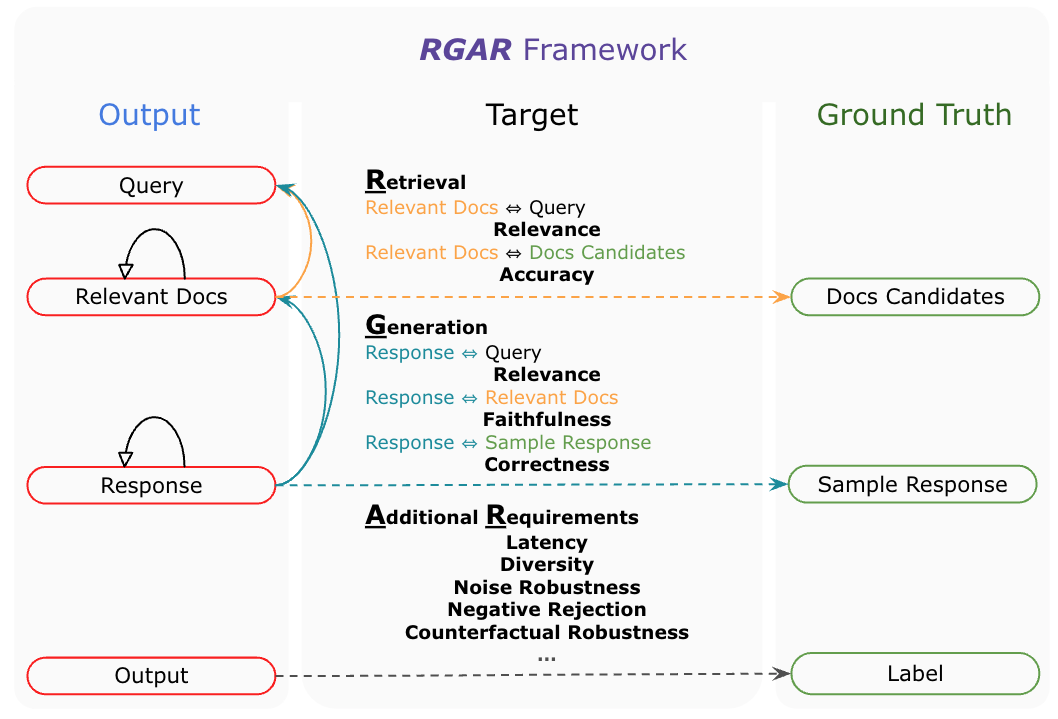

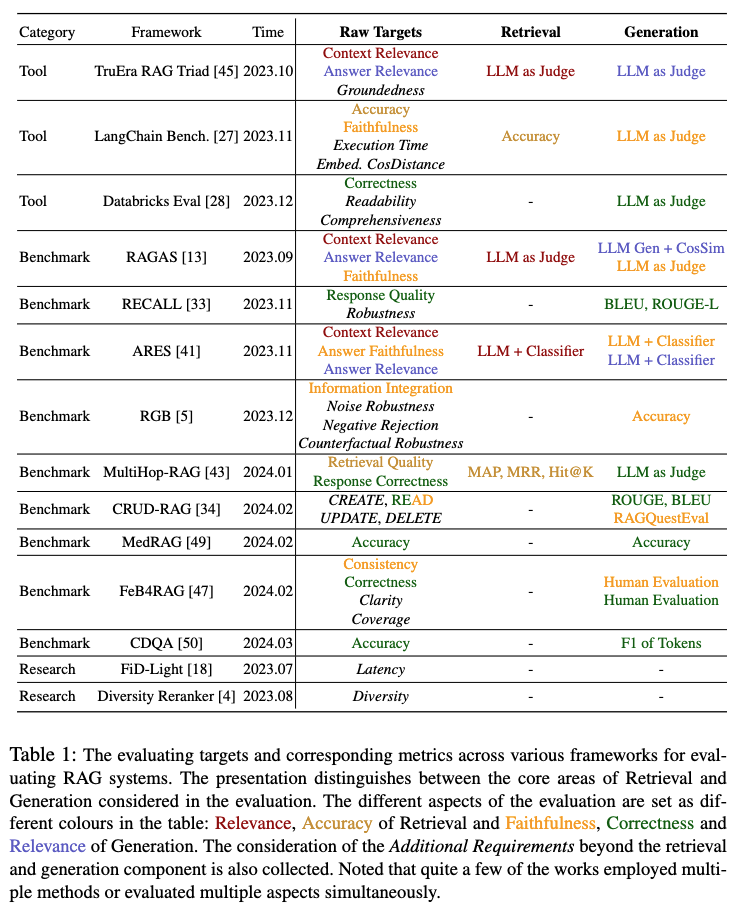

Retrieval-Augmented Generation (RAG) has emerged as a pivotal innovation in natural language processing, enhancing generative models by incorporating external information retrieval. Evaluating RAG systems, however, poses distinct challenges due to their hybrid structure and reliance on dynamic knowledge sources. We consequently enhanced an extensive survey and proposed an analysis framework for benchmarks of RAG systems, RGAR (Retrieval, Generation, Additional Requirement), designed to systematically analyze RAG benchmarks by focusing on measurable outputs and established truths. Specifically, we scrutinize and contrast multiple quantifiable metrics of the Retrieval and Generation component, such as relevance, accuracy, and faithfulness, of the internal links within the current RAG evaluation methods, covering all the possible output and ground truth pairs. We also analyze the integration of additional requirements of different works. Additionally, we discuss the limitations of current benchmarks and propose potential directions for further research to address these shortcomings and advance the field of RAG evaluation.

- The Target modular of RGAR framework. The retrieval and generation components are highlighted in red and green, respectively.