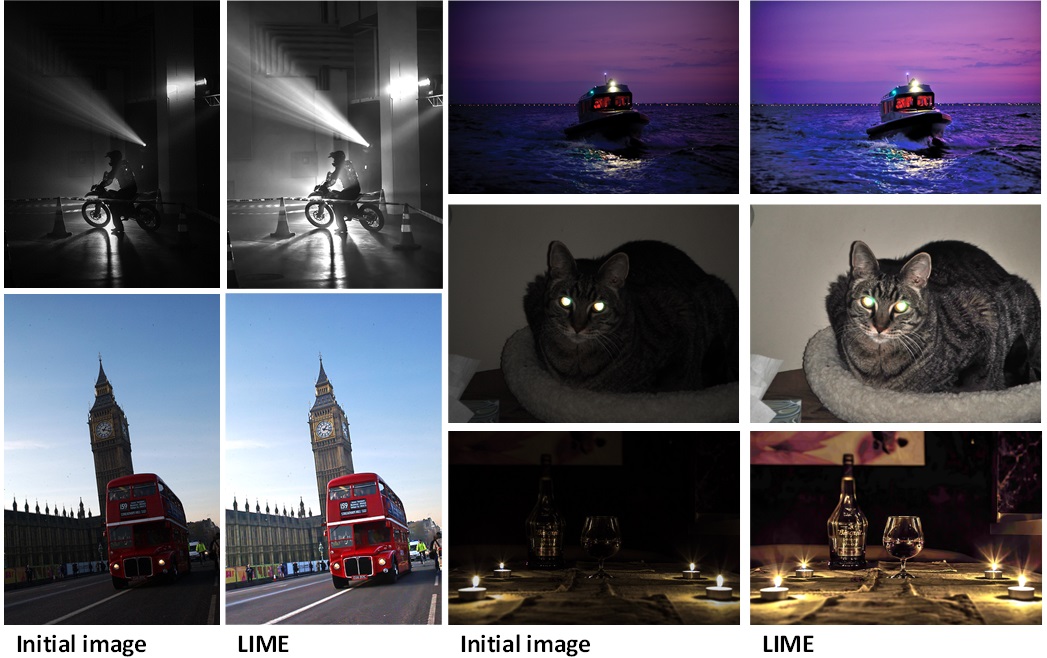

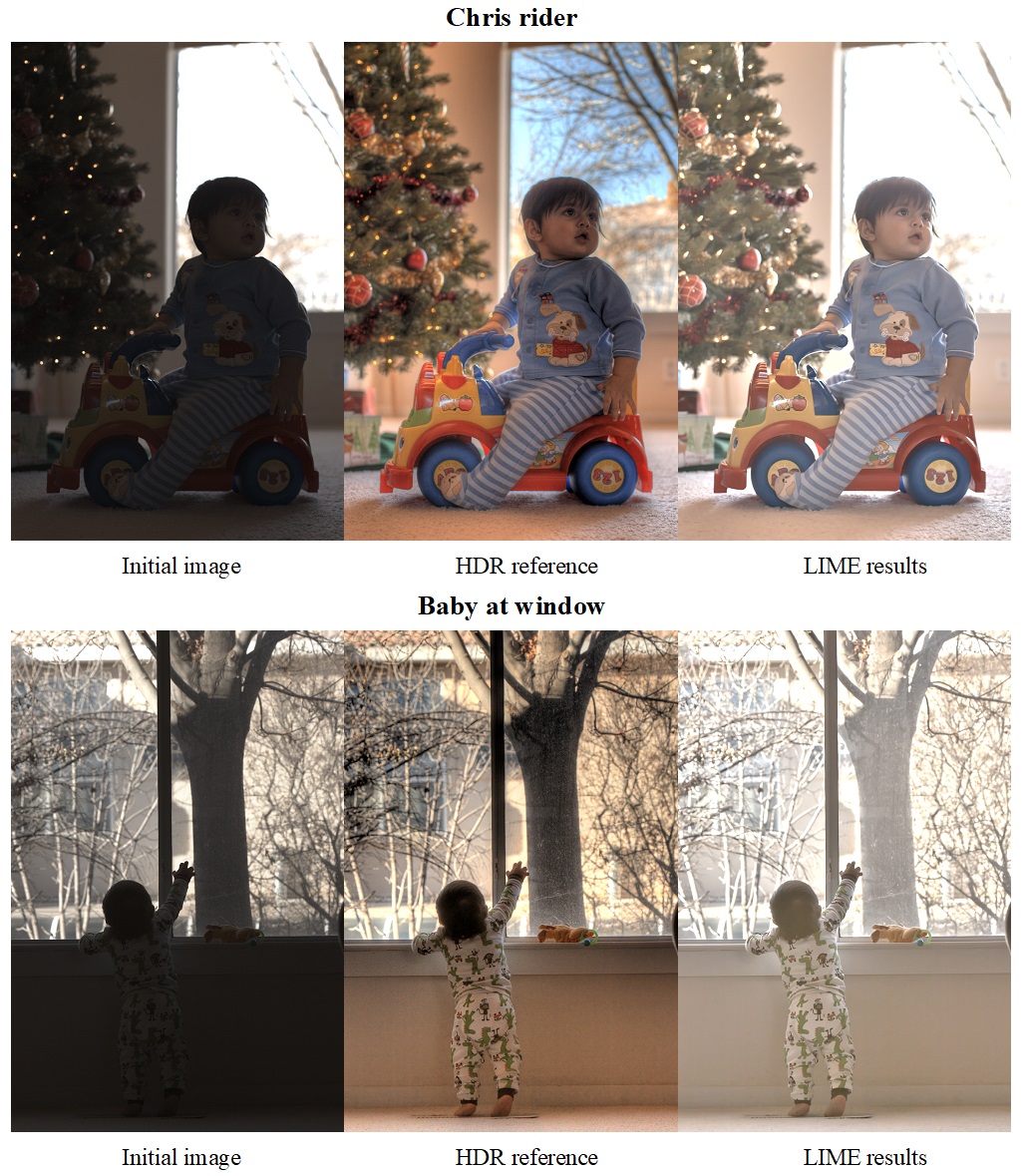

Implementation of the sped-up solver of the LIME image enhancement algorithm from the paper "LIME: Low-Light Image Enhancement via Illumination Map Estimation" [1] applied to images from the Exclusively Dark Image Dataset [2] and quantitatively evaluated on the HDR Dataset [3].

Images captured in low-light conditions typically suffer from reduced visibility, impacting their overall quality and visual appeal. Moreover, such conditions can result in decreased performance for computer vision and multimedia algorithms that heavily rely on high-quality inputs.

The code implements the Low-Light Image Enhancement via illumination map estimation (LIME) method, designed to enhance dark images. This method entails estimating the illumination of each pixel by identifying the maximum value in the red, green, and blue channels. Subsequently, the initial illumination map undergoes further refinement through the resolution of an optimization problem formulated by the authors of the original paper. The enhancement of lighting is achieved by applying the updated illumination map to a low-light input. The underlying concept of the method is based on the idea that every image can be represented by a pixelwise product of an illumination map and an "ideal" image.

When a low-light image is used as input, the method estimates its illumination map and solves the following minimization problem to recover a desired version of the low-light image.

where

Making certain assumptions, the authors distill the aforementioned minimization problem into the following equation:

where

Weight matrices are initialized by one of three strategies and then scaled with gradient. Toeplitz matrices look as follows

The vectorization is implemented by vertical stacking of transposed row vectors, but it could be done by stacking column of vectors one after the other either. Therewith, Toeplitz matrices for the discrete gradient would be different in these two cases. Their appearance is also dependent on which side of the Toeplitz matrix the vectorized term is located. The general structure of these matrices is given above. However, rows of these matrices, corresponding to elements located on the boundaries of an image, could deviate from the general structure. In essence, their form depends on the intended padding. To prevent the introduction of extra gradients on the boundaries of images, the corresponding rows consist of zeros.

The code formulates the problem for given images and automatically calculates a refined illumination map, presenting the exact solution of the equation derived by the authors.

As observed, the LIME method involves operations with matrices of large sizes. Consequently, the sped-up version of the method requires solving a massive system of linear equations. While solving a system of linear equations is a relatively straightforward task, the large dimensions of the matrices necessitate the application of specific techniques for efficient results. If the size of the input image is M by N pixels, the size of the vector would be M∙N by 1. Therefore, the corresponding size of the Toeplitz matrices would be M∙N by M∙N. Fortunately, these large matrices are sparse, and operations with sparse matrices require fewer computational resources with a proper approach. Hence, the code utilizes specific tools from the SciPy library to leverage the sparsity of matrices.

TThe performance of the LIME method is demonstrated on several images from the ExDark dataset. The algorithm is applied with fixed parameters:

For quantitative performance evaluation is a challenging problem the lightness order error (LOE) metric employed by the authors is used.

In my personal opinion, this metric cannot be deemed a perfect solution. The reason is that the LOE value would be equal to zero for simple enhancement techniques like gamma correction or even in the absence of any illumination enhancement. Consequently, qualitatively inferior results could quantitatively outperform better ones. Another drawback of this evaluation method is its reliance on a ground truth reference, which, in the case of illumination enhancement, is a nominal and blurry term. Moreover, computation of this metric is relatively expensive due to its complexity. Therefore, it is recommended to down-sample images before computation, with the suggested value of the down-sample factor being 100/min(h, w), where h and w represent the height and width of the image.

To reproduce the paper results for a fair comparison, this metric is employed. As the ExDark dataset lacks illumination ground truth, the LOE value for images mentioned in the original paper is not available. The authors use the HDR dataset to evaluate their algorithm.

In the following table, LOE values are presented (every value has a factor of

| BabyAtWin | BabyOnGrass | ChrisRider | HighChair | LadyEating | SantaHelper |

|---|---|---|---|---|---|

| 1.277 | 1.040 | 0.952 | 2.368 | 2.199 | 2.374 |

- To implement an accelerated technique for calculating the exact solution of the equation from the formulation. Although the equation represents a sped-up version of the problem, the straightforward solution of this equation is still relatively slow, even leveraging the sparsity of matrices. Some algorithms could guarantee better rates of computations..

- To propose another metric for the quantitative evaluation of the method, which would help alleviate the skepticism associated with LOE.

[1] X. Guo, Y. Li and H. Ling, "LIME: Low-Light Image Enhancement via Illumination Map Estimation," in IEEE Transactions on Image Processing, vol. 26, no. 2, pp. 982-993, Feb. 2017, doi: 10.1109/TIP.2016.2639450.

[2] Y.P. Loh, C.S. Chan, "Getting to know low-light images with the Exclusively Dark dataset", Computer Vision and Image Understanding, vol. 178, pp. 30-42, 2019, doi: 10.1016/j.cviu.2018.10.010.

[3] P. Sen, N. K. Kalantari, M. Yaesoubi, S. Darabi, D. B. Goldman, and E. Shechtman, “Robust patch-based HDR reconstruction of dynamic scenes,” ACM Trans. Graph., vol. 31, no. 6, pp. 203:1–203:11, 2012.

[4] K. Dabov, A. Foi, V. Katkovnik, and K. Egiazarian, “Image denoising by sparse 3D transform-domain collaborative filtering,” IEEE Trans. Image Process., vol. 16, no. 8, pp. 2080–2095, Aug. 2007.