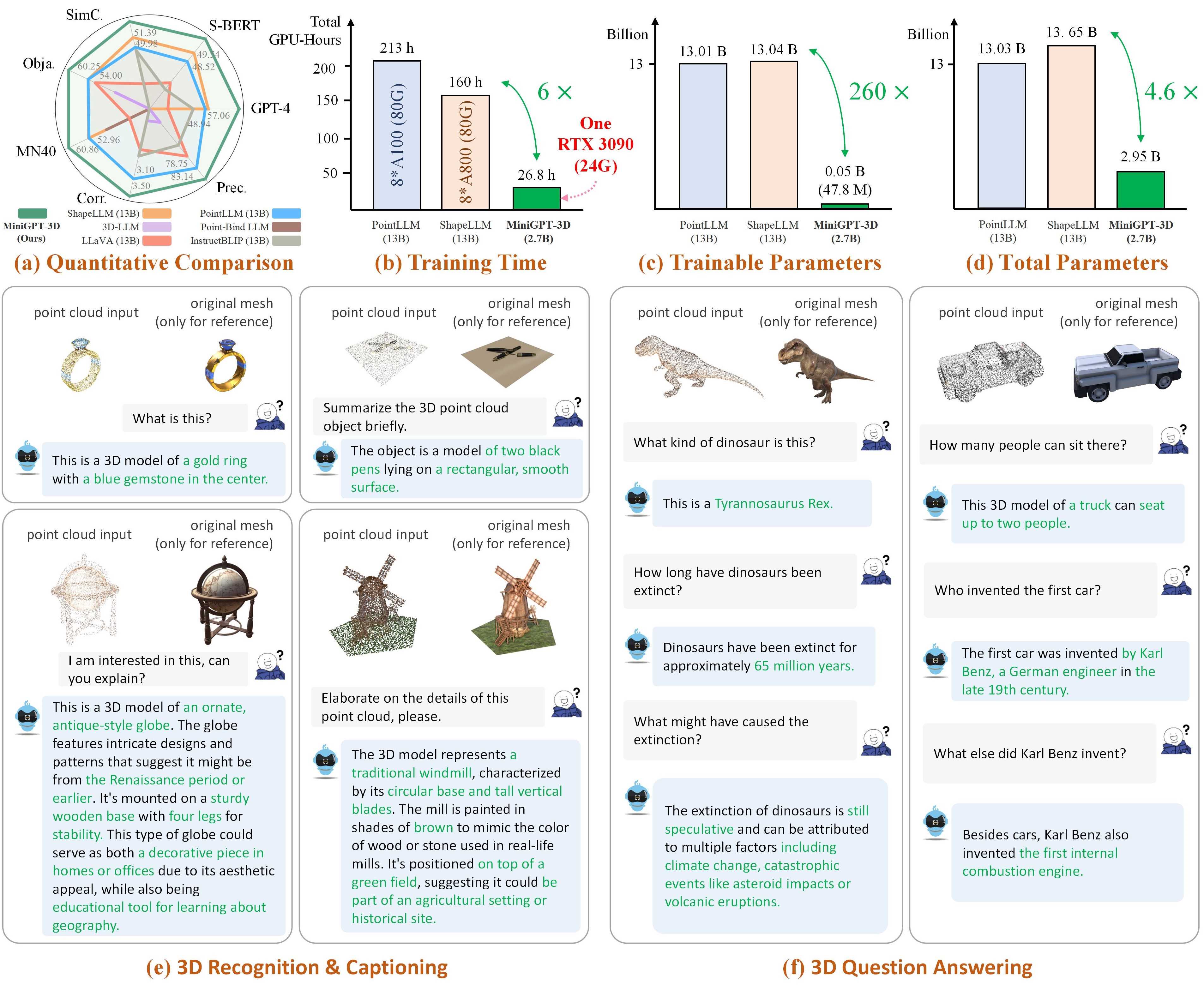

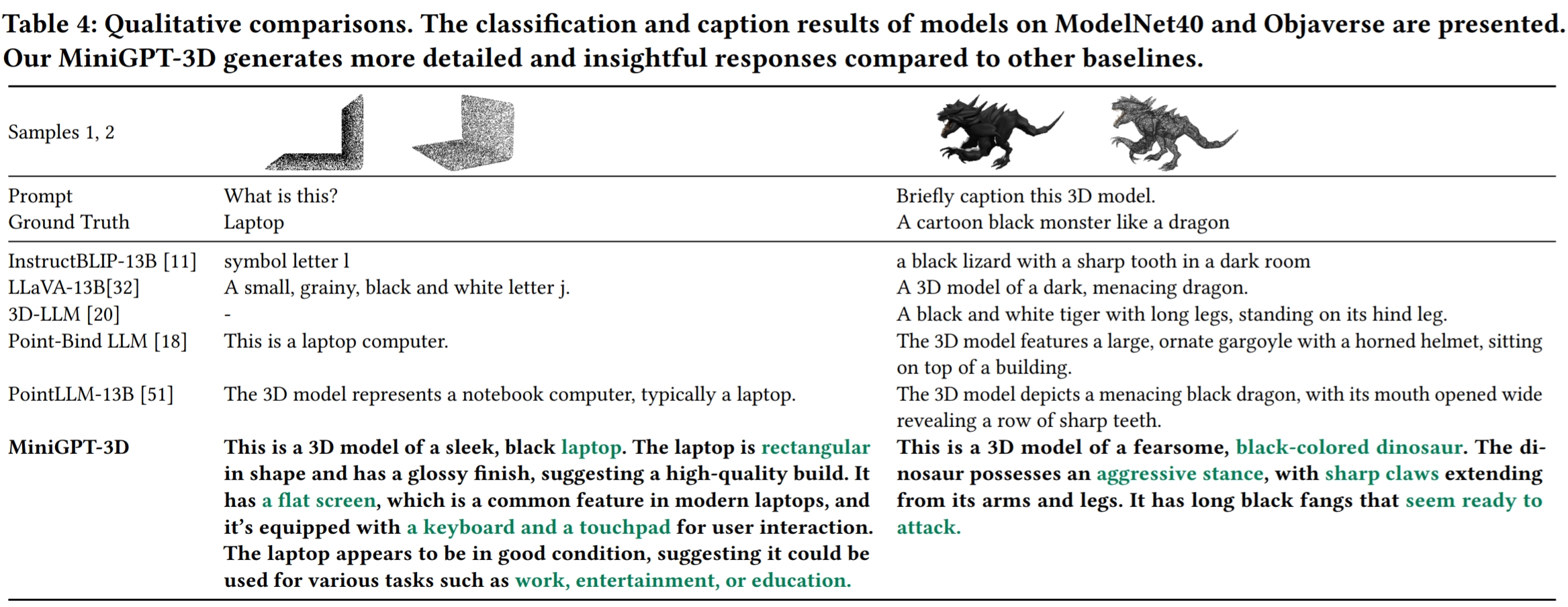

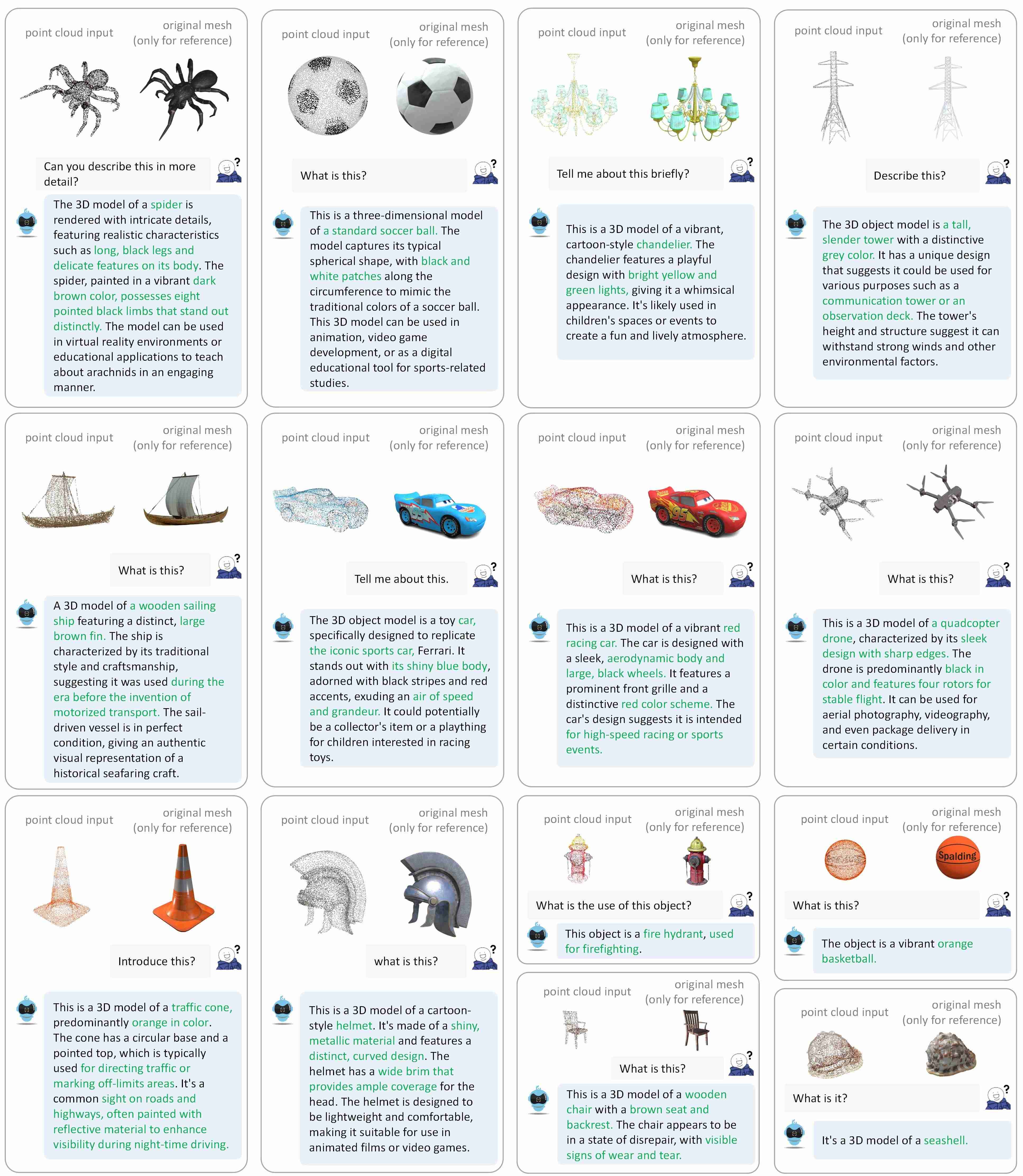

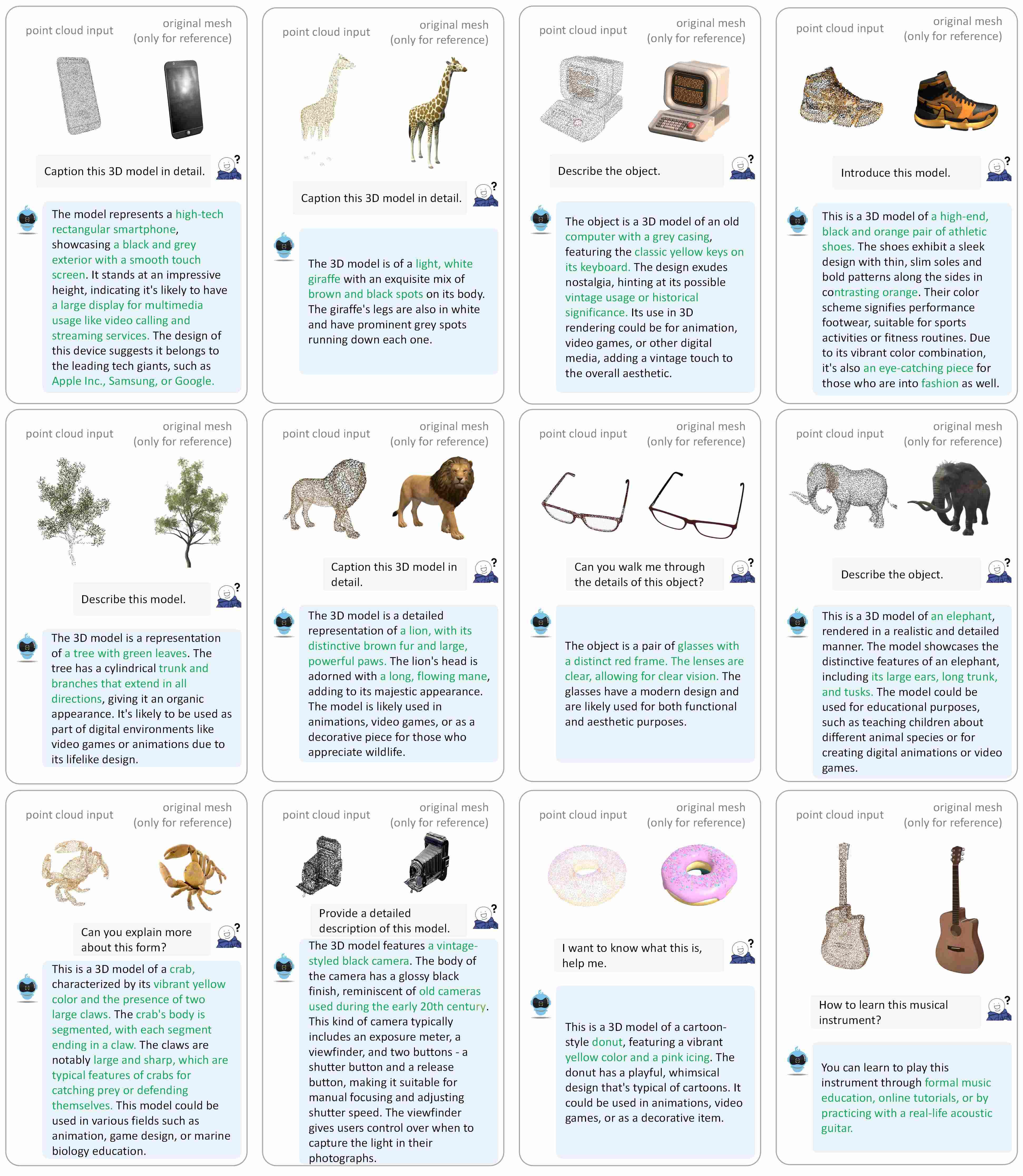

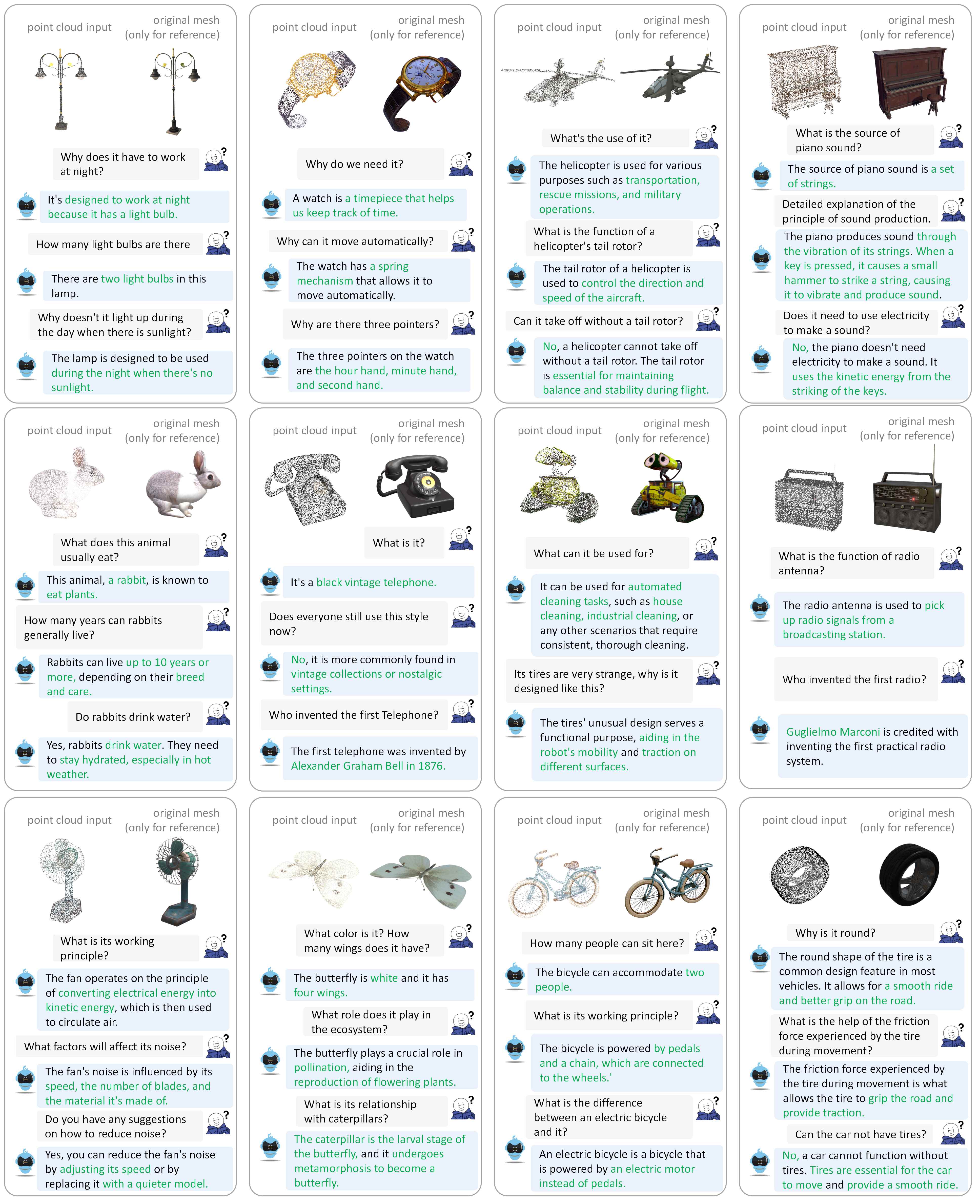

- We present MiniGPT-3D, an efficient and powerful 3D-LLM that aligns 3D points with LLMs using 2D priors. It is trained with 47.8 M learnable parameters in just 26.8 hours on a single RTX 3090 GPU.

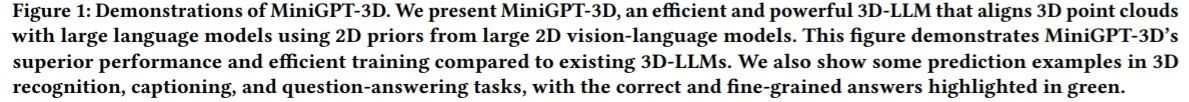

- We propose an efficient four-stage training strategy in a cascaded way, gradually transferring the knowledge from 2D-LLMs.

- We design the mixture of query experts to aggregate multiple features from different experts with only 0.4M parameters.

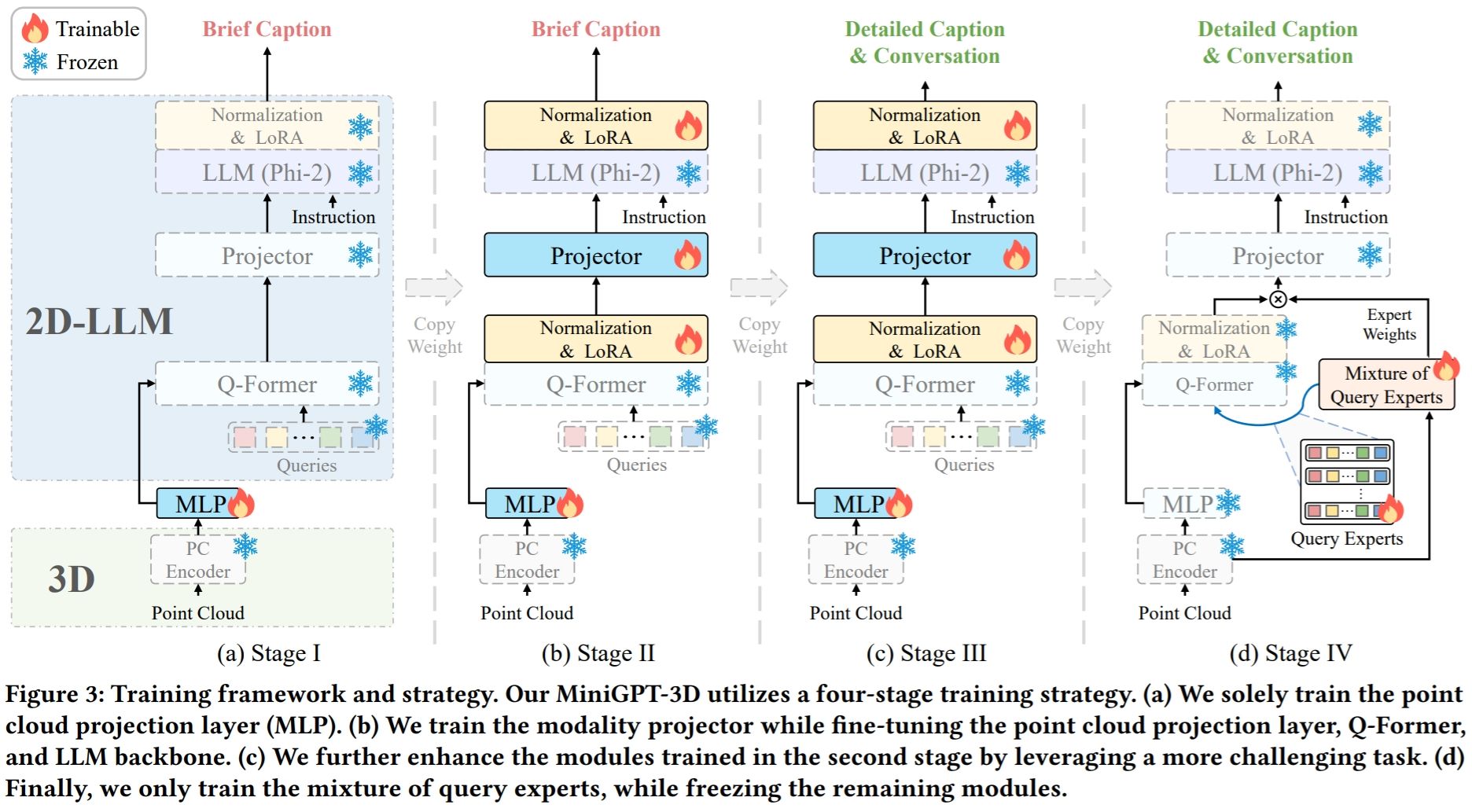

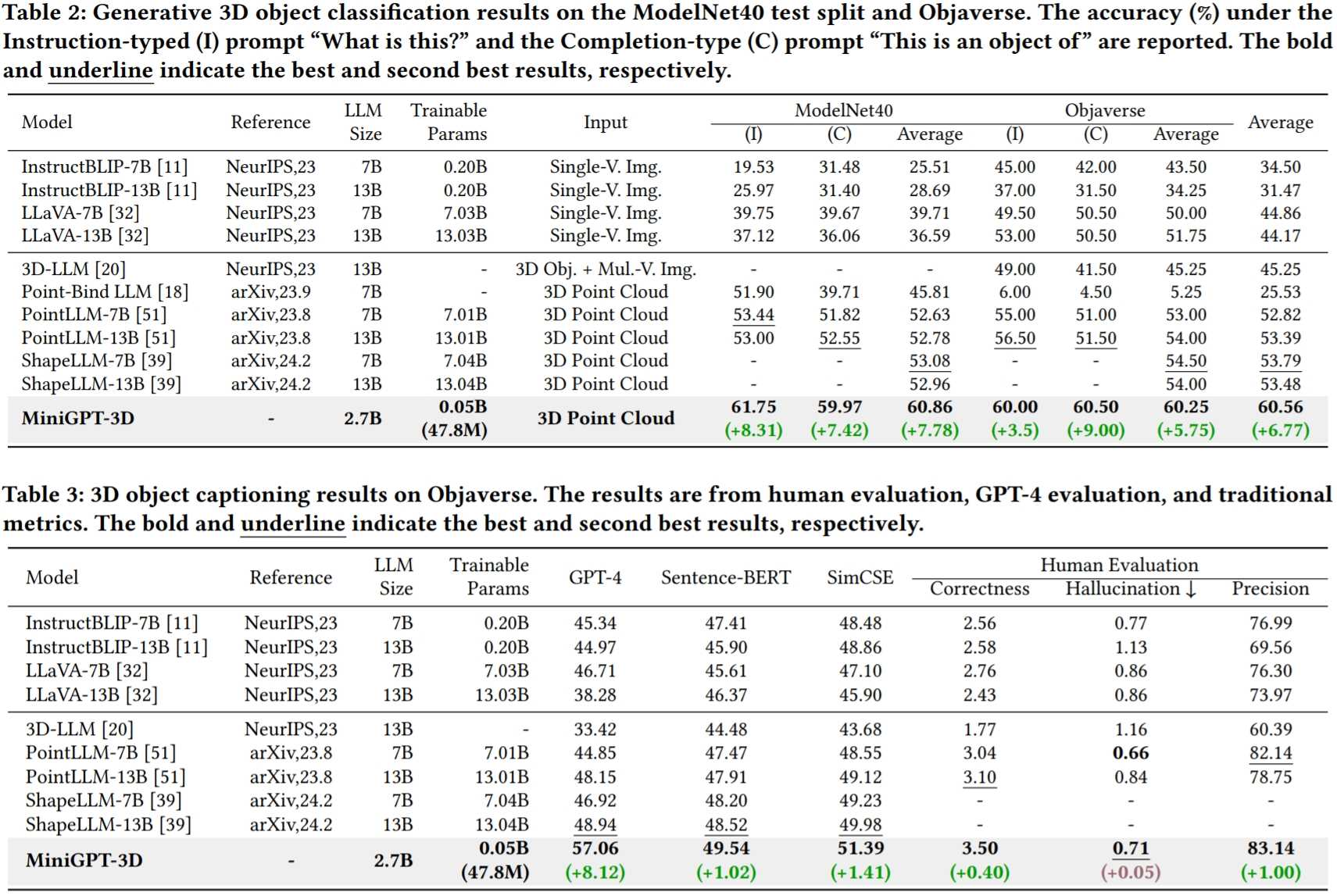

- Extensive experiments show the superior performance of MiniGPT-3D on multiple tasks while reducing the training time and parameters by up to 6x and 260x, respectively.

Note: MiniGPT-3D takes the first step in efficient 3D-LLM, we hope that MiniGPT-3D can bring new insights to this community.

- Release inferencing codes with checkpoints.

- Release training codes.

- Release evaluation codes.

- Release gradio demo codes.

- Add online demo.

If you find our work helpful, please consider citing:

@article{tang2024minigpt_3d,

title={MiniGPT-3D: Efficiently Aligning 3D Point Clouds with Large Language Models using 2D Priors},

author={Tang, Yuan and Han, Xu and Li, Xianzhi and Yu, Qiao and Hao, Yixue and Hu, Long and Chen, Min},

journal={https://arxiv.org/abs/2405.01413},

year={2024}

}

This work is under the Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License.

Together, Let's make LLM for 3D great!

- Point-Bind & Point-LLM: It aligns point clouds with Image-Bind to reason multi-modality input without 3D-instruction data training.

- 3D-LLM: employs 2D foundation models to encode multi-view images of 3D point clouds.

- PointLLM: employs 3D point clouds with LLaVA.

- ShapeLLM: Combine a powerful point cloud encoder with LLM for embodied scenes.

We would like to thank the authors of PointLLM, Objaverse and TinyGPT-V for their great works and repos.