Because Caesar.jl was taken

Gaius.jl is a multi-threaded BLAS-like library using a divide-and-conquer strategy to parallelism, and built on top of the fantastic LoopVectorization.jl. Gaius.jl spawns threads using Julia’s depth first parallel task runtime and so Gaius.jl’s routines may be fearlessly nested inside multi-threaded julia programs.

Gaius is not stable or well tested. Only use it if you’re adventurous.

Note: Gaius.jl is not actively maintained and I do not anticipate doing further work on it. There are other, more promising projects that may result in a scalable, multi-threaded pure julia BLAS library such as Tullio.jl.

Currently, fast, native matrix-multiplication is only implemented

between matrices of types Matrix{<:Union{Float64, Float32, Int64,

Int32, Int16}}, and StructArray{Complex}. Support for other other

commonly encountered numeric struct types such as Rational and

Dual numbers is planned.

Click me!

Gaius.jl exports the functions blocked_mul and

blocked_mul!. blocked_mul is to be used like the regular *

operator between two matrices whereas bloked_mul! takes in three

matrices C, A, B and stores A*B in C overwriting the contents of

C.

julia> using Gaius, BenchmarkTools, LinearAlgebra

julia> A, B, C = rand(104, 104), rand(104, 104), zeros(104, 104);

julia> @btime mul!($C, $A, $B); # from LinearAlgebra

68.529 μs (0 allocations: 0 bytes)

julia> @btime blocked_mul!($C, $A, $B); #from Gaius

31.220 μs (80 allocations: 10.20 KiB)julia> using Gaius, BenchmarkTools

julia> A, B = rand(104, 104), rand(104, 104);

julia> @btime $A * $B;

68.949 μs (2 allocations: 84.58 KiB)

julia> @btime let * = Gaius.blocked_mul # Locally use Gaius.blocked_mul as * operator.

$A * $B

end;

32.950 μs (82 allocations: 94.78 KiB)

julia> versioninfo()

Julia Version 1.4.0-rc2.0

Commit b99ed72c95* (2020-02-24 16:51 UTC)

Platform Info:

OS: Linux (x86_64-pc-linux-gnu)

CPU: AMD Ryzen 5 2600 Six-Core Processor

WORD_SIZE: 64

LIBM: libopenlibm

LLVM: libLLVM-8.0.1 (ORCJIT, znver1)

Environment:

JULIA_NUM_THREADS = 6Multi-threading in Gaius.jl works by recursively splitting matrices

into sub-blocks to operate on. You can change the matrix sub-block

size by calling mul! with the block_size keyword argument. If left

unspecified, Gaius will use a (very rough) heuristic to choose a good

block size based on the size of the input matrices.

The size heuristics I use are likely not yet optimal for everyone’s machines.

Click me!

Gaius.jl supports the multiplication of matrices of complex numbers, but they must first by converted explicity to structs of arrays using StructArrays.jl (otherwise the multiplication will be done by OpenBLAS):

julia> using Gaius, StructArrays

julia> begin

n = 150

A = randn(ComplexF64, n, n)

B = randn(ComplexF64, n, n)

C = zeros(ComplexF64, n, n)

SA = StructArray(A)

SB = StructArray(B)

SC = StructArray(C)

@btime blocked_mul!($SC, $SA, $SB)

@btime mul!($C, $A, $B)

SC ≈ C

end

515.587 μs (80 allocations: 10.53 KiB)

546.481 μs (0 allocations: 0 bytes)

trueClick me!

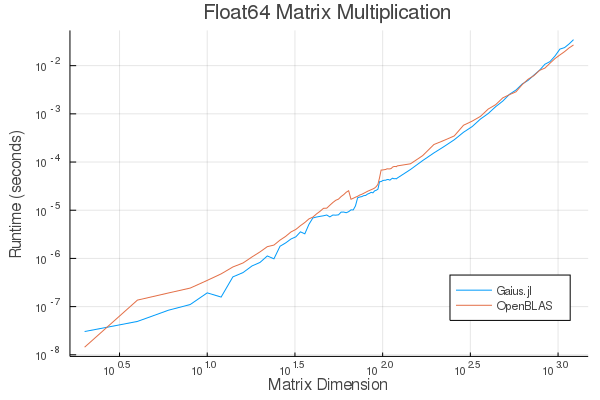

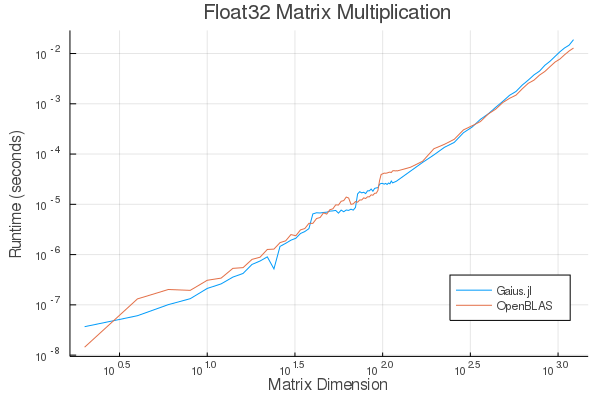

The following benchmarks were run on this

julia> versioninfo()

Julia Version 1.4.0-rc2.0

Commit b99ed72c95* (2020-02-24 16:51 UTC)

Platform Info:

OS: Linux (x86_64-pc-linux-gnu)

CPU: AMD Ryzen 5 2600 Six-Core Processor

WORD_SIZE: 64

LIBM: libopenlibm

LLVM: libLLVM-8.0.1 (ORCJIT, znver1)

Environment:

JULIA_NUM_THREADS = 6and compared to OpenBLAS running with 6 threads

(BLAS.set_num_threads(6)). I would be keenly interested in seeing

analogous benchmarks on a machine with an AVX512 instruction set and / or Intel’s MKL.

Note that these are log-log plots

Gaius.jl outperforms OpenBLAS over a large range of matrix sizes, but

does begin to appreciably fall behind around 800 x 800 matrices for

Float64 and 650 x 650 matrices for Float32. I believe there is a

large amount of performance left on the table in Gaius.jl and I look

forward to beating OpenBLAS for more matrix sizes.

Click me!

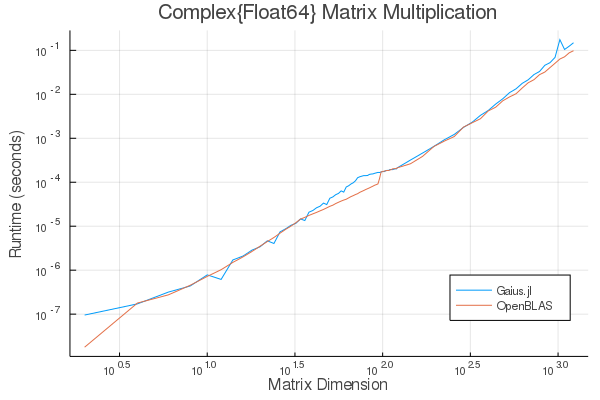

Here is Gaius operating on Complex{Float64} structs-of-arrays

competeing relatively evenly against OpenBLAS operating on Complex{Float64} arrays-of-structs:

I think with some work, we can do much better.

Click me!

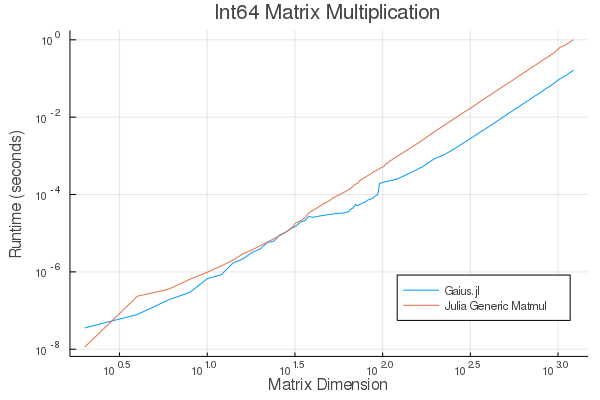

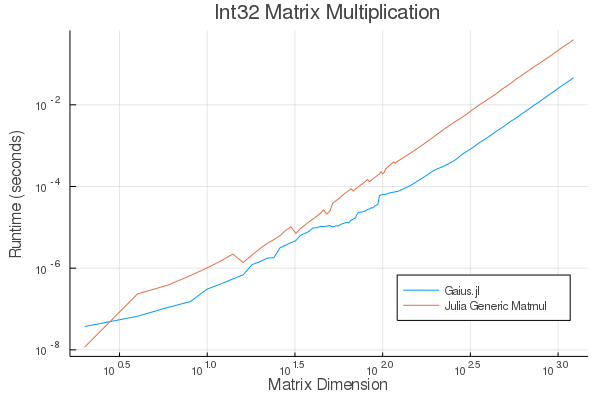

These benchmarks compare Gaius.jl (on the same machine as above) and compare against julia’s generic matrix multiplication implementation (OpenBLAS does not provide integer mat-mul) which is not multi-threaded.

Note that these are log-log plots

Benchmarks performed on am achine with the AVX512 instruction set show an even greater performance gain.

If you find yourself in a high performance situation where you want to multiply matrices of integers, I think this provides a compelling use-case for Gaius.jl since it will outperform it’s competition at any matrix size and for large matrices will benefit from multi-threading.

I have not yet worked on implementing other standard BLAS routines with this strategy, but doing so should be relatively straightforward.

If you must break the law, do it to seize power; in all other cases observe it.

-Gaius Julius Caesar

If you use only the functions Gaius.blocked_mul! and

Gaius.blocked_mul, automatic array size-checking will occur before

the matrix multiplication begins. This can be turned off in

blocked_mul! by calling Gaius.mul!(C, A, B, sizecheck=false), in

which case no sizechecks will occur on the arrays before the matrix

multiplication occurs and all sorts of bad, segfaulty things can

happen.

All other functions in this package are to be considered internal

and should not be expected to check for safety or obey the law. The

functions Gaius.gemm_kernel! and Gaius.add_gemm_kernel! may be of

utility, but be warned that they do not check array sizes.