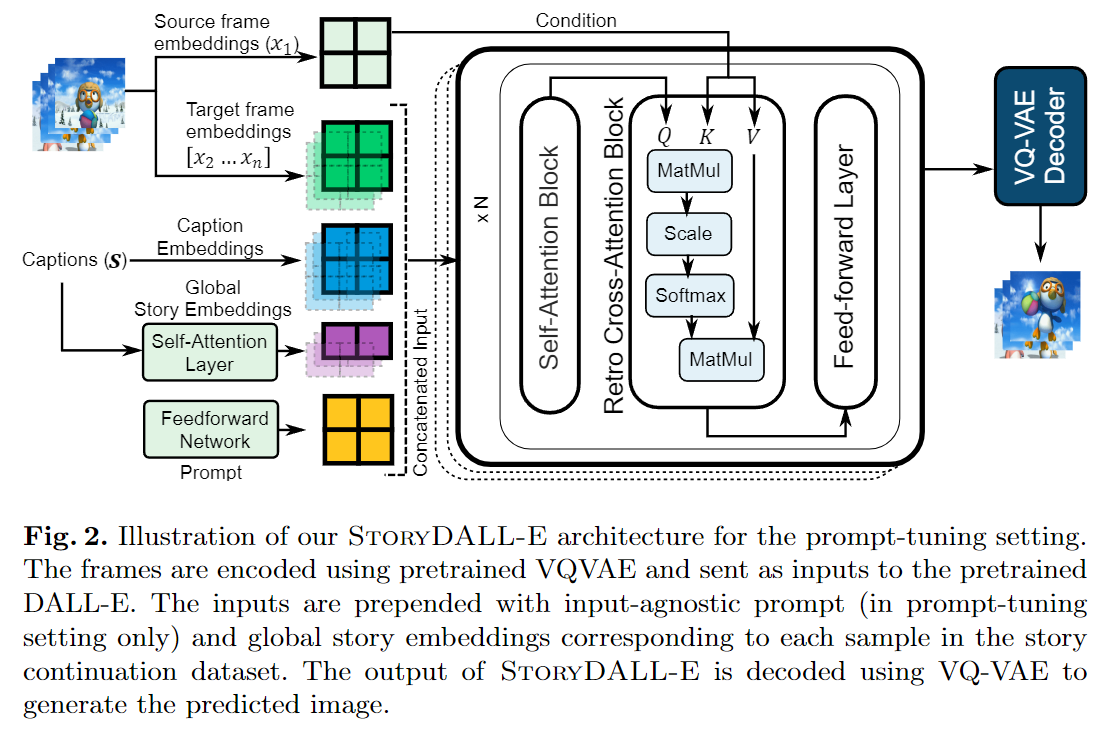

PyTorch code for the ECCV 2022 paper "StoryDALL-E: Adapting Pretrained Text-to-Image Transformers for Story Continuation".

[Paper] [Model Card] [Demo]

Download the PororoSV dataset and associated files from here and save it as ./data/pororo/.

Download the FlintstonesSV dataset and associated files from here and save it as ./data/flintstones

DiDeMoSV dataset is coming soon.

This repository contains separate folders for training StoryDALL-E based on minDALL-E and DALL-E Mega models i.e. the ./story_dalle/ and ./mega-story-dalle models respectively.

- To finetune the minDALL-E model for story continuation, first migrate to the corresponding folder:

cd story-dalle - Set the environment variables in

train_story.shto point to the right locations in your system. Specifically, change the$DATA_DIR,$OUTPUT_ROOTand$LOG_DIRif different from the default locations. - Download the pretrained checkpoint from hereand save it in

./1.3B - Run the following command:

bash train_story.sh <dataset_name>

- To finetune the DALL-E Mega model for story continuation, first migrate to the corresponding folder:

cd mega-story-dalle - Set the environment variables in

train_story.shto point to the right locations in your system. Specifically, change the$DATA_DIR,$OUTPUT_ROOTand$LOG_DIRif different from the default locations. - Pretrained checkpoints are automatically downloaded upon initialization

- Run the following command:

bash train_story.sh <dataset_name>

Links to pretrained checkpoints and inference instructions coming soon!