VLMEvalKit (the python package name is vlmeval) is an open-source evaluation toolkit of large vision-language models (LVLMs). It enables one-command evaluation of LVLMs on various benchmarks, without the heavy workload of data preparation under multiple repositories. In VLMEvalKit, we adopt generation-based evaluation for all LVLMs, and provide the evaluation results obtained with both exact matching and LLM-based answer extraction.

- [2024-07-08] We have supported InternLM-XComposer-2.5, thanks to LightDXY 🔥🔥🔥

- [2024-07-08] We have supported InternVL2, thanks to czczup 🔥🔥🔥

- [2024-06-27] We have supported Cambrian 🔥🔥🔥

- [2024-06-27] We have supported AesBench, thanks to Yipo Huang and Quan Yuan🔥🔥🔥

- [2024-06-26] We have supported the evaluation of CongRong, it ranked 3rd on the Open VLM Leaderboard 🔥🔥🔥

- [2024-06-26] We firstly support a video understanding benchmark: MMBench-Video, Image LVLMs that accept multiple images as inputs can be evaluated on the video understanding benchmarks. Check QuickStart to learn how to perform the evaluation 🔥🔥🔥

- [2024-06-24] We have supported the evaluation of Claude3.5-Sonnet, it ranked the 2nd on the Open VLM Leaderboard 🔥🔥🔥

- [2024-06-22] Since GPT-3.5-Turbo-0613 is no longer supported yet, we switch to GPT-3.5-Turbo-0125 for choice extraction

- [2024-06-18] We have supported SEEDBench2, thanks to Bohao-Lee🔥🔥🔥

- [2024-06-18] We have supported MMT-Bench, thanks to KainingYing🔥🔥🔥

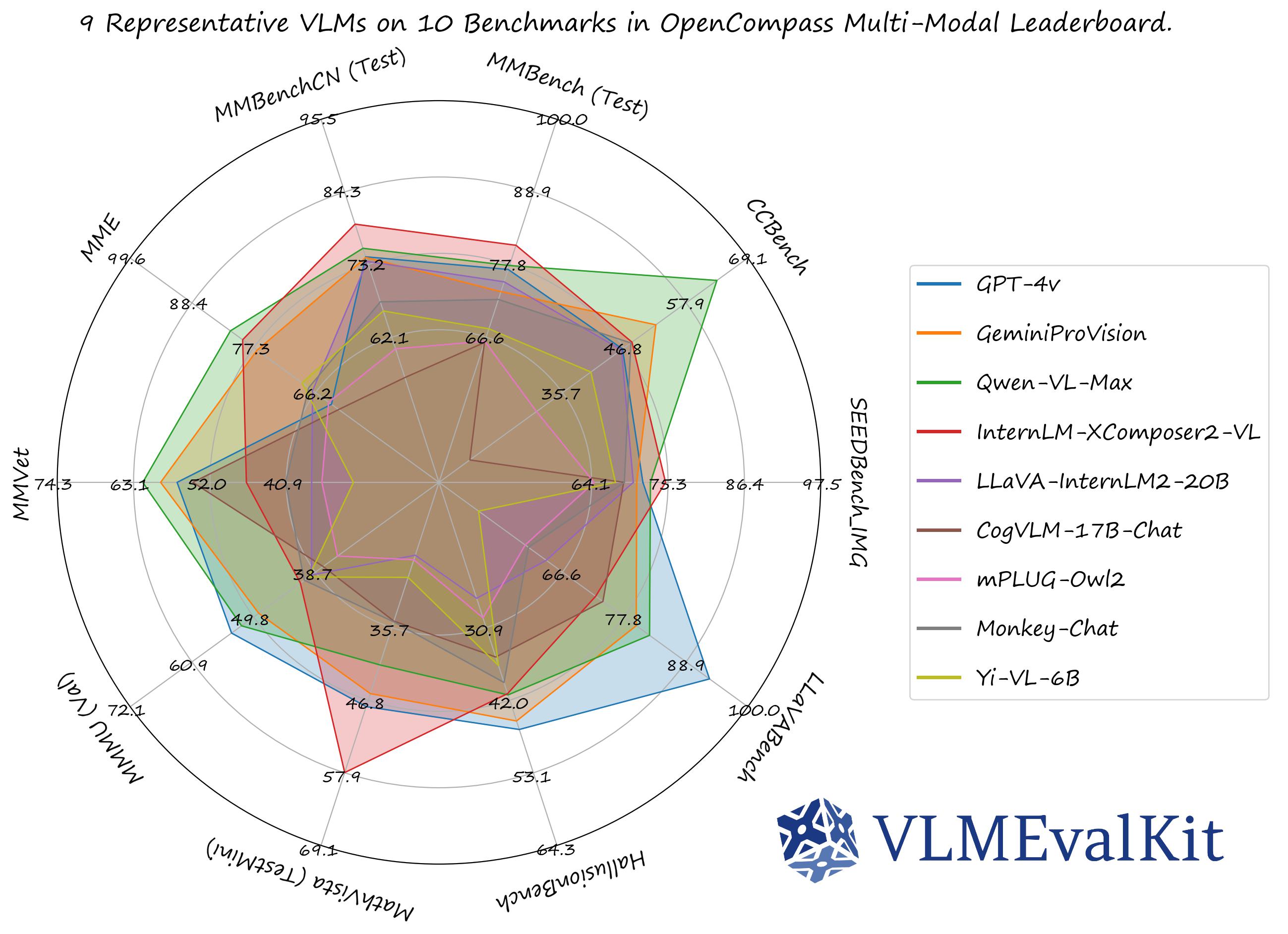

The performance numbers on our official multi-modal leaderboards can be downloaded from here!

OpenVLM Leaderboard: Download All DETAILED Results.

Supported Image Understanding Dataset

- By default, all evaluation results are presented in OpenVLM Leaderboard.

| Dataset | Dataset Names (for run.py) | Task | Dataset | Dataset Names (for run.py) | Task |

|---|---|---|---|---|---|

| MMBench Series: MMBench, MMBench-CN, CCBench |

MMBench_DEV_[EN/CN] MMBench_TEST_[EN/CN] MMBench_DEV_[EN/CN]_V11 MMBench_TEST_[EN/CN]_V11 CCBench |

Multi-choice Question (MCQ) |

MMStar | MMStar | MCQ |

| MME | MME | Yes or No (Y/N) | SEEDBench Series | SEEDBench_IMG SEEDBench2 SEEDBench2_Plus |

MCQ |

| MM-Vet | MMVet | VQA | MMMU | MMMU_[DEV_VAL/TEST] | MCQ |

| MathVista | MathVista_MINI | VQA | ScienceQA_IMG | ScienceQA_[VAL/TEST] | MCQ |

| COCO Caption | COCO_VAL | Caption | HallusionBench | HallusionBench | Y/N |

| OCRVQA* | OCRVQA_[TESTCORE/TEST] | VQA | TextVQA* | TextVQA_VAL | VQA |

| ChartQA* | ChartQA_TEST | VQA | AI2D | AI2D_TEST | MCQ |

| LLaVABench | LLaVABench | VQA | DocVQA+ | DocVQA_[VAL/TEST] | VQA |

| InfoVQA+ | InfoVQA_[VAL/TEST] | VQA | OCRBench | OCRBench | VQA |

| RealWorldQA | RealWorldQA | MCQ | POPE | POPE | Y/N |

| Core-MM- | CORE_MM | VQA | MMT-Bench | MMT-Bench_[VAL/VAL_MI/ALL/ALL_MI] | MCQ |

| MLLMGuard - | MLLMGuard_DS | VQA | AesBench | AesBench_[VAL/TEST] | MCQ |

* We only provide a subset of the evaluation results, since some VLMs do not yield reasonable results under the zero-shot setting

+ The evaluation results are not available yet

- Only inference is supported in VLMEvalKit

VLMEvalKit will use an judge LLM to extract answer from the output if you set the key, otherwise it uses the exact matching mode (find "Yes", "No", "A", "B", "C"... in the output strings). The exact matching can only be applied to the Yes-or-No tasks and the Multi-choice tasks.

Supported Video Understanding Dataset

| Dataset | Dataset Names (for run.py) | Task | Dataset | Dataset Names (for run.py) | Task |

|---|---|---|---|---|---|

| MMBench-Video | MMBench-Video | VQA |

Supported API Models

| GPT-4v (20231106, 20240409) 🎞️🚅 | GPT-4o 🎞️🚅 | Gemini-1.0-Pro 🎞️🚅 | Gemini-1.5-Pro 🎞️🚅 | Step-1V 🎞️🚅 |

|---|---|---|---|---|

| Reka-[Edge / Flash / Core]🚅 | Qwen-VL-[Plus / Max] 🎞️🚅 | Claude3-[Haiku / Sonnet / Opus] 🎞️🚅 | GLM-4v 🚅 | CongRong 🎞️🚅 |

| Claude3.5-Sonnet 🎞️🚅 |

Supported PyTorch / HF Models

🎞️: Support multiple images as inputs.

🚅: Models can be used without any additional configuration/operation.

Transformers Version Recommendation:

Note that some VLMs may not be able to run under certain transformer versions, we recommend the following settings to evaluate each VLM:

- Please use

transformers==4.33.0for:Qwen series,Monkey series,InternLM-XComposer Series,mPLUG-Owl2,OpenFlamingo v2,IDEFICS series,VisualGLM,MMAlaya,ShareCaptioner,MiniGPT-4 series,InstructBLIP series,PandaGPT,VXVERSE,GLM-4v-9B. - Please use

transformers==4.37.0for:LLaVA series,ShareGPT4V series,TransCore-M,LLaVA (XTuner),CogVLM Series,EMU2 Series,Yi-VL Series,MiniCPM-[V1/V2],OmniLMM-12B,DeepSeek-VL series,InternVL series,Cambrian Series. - Please use

transformers==4.40.0for:IDEFICS2,Bunny-Llama3,MiniCPM-Llama3-V2.5,LLaVA-Next series,360VL-70B,Phi-3-Vision,WeMM. - Please use

transformers==latestfor:PaliGemma-3B.

# Demo

from vlmeval.config import supported_VLM

model = supported_VLM['idefics_9b_instruct']()

# Forward Single Image

ret = model.generate(['assets/apple.jpg', 'What is in this image?'])

print(ret) # The image features a red apple with a leaf on it.

# Forward Multiple Images

ret = model.generate(['assets/apple.jpg', 'assets/apple.jpg', 'How many apples are there in the provided images? '])

print(ret) # There are two apples in the provided images.See [QuickStart | 快速开始] for a quick start guide.

To develop custom benchmarks, VLMs, or simply contribute other codes to VLMEvalKit, please refer to [Development_Guide | 开发指南].

The codebase is designed to:

- Provide an easy-to-use, opensource evaluation toolkit to make it convenient for researchers & developers to evaluate existing LVLMs and make evaluation results easy to reproduce.

- Make it easy for VLM developers to evaluate their own models. To evaluate the VLM on multiple supported benchmarks, one just need to implement a single

generate_inner()function, all other workloads (data downloading, data preprocessing, prediction inference, metric calculation) are handled by the codebase.

The codebase is not designed to:

- Reproduce the exact accuracy number reported in the original papers of all 3rd party benchmarks. The reason can be two-fold:

- VLMEvalKit uses generation-based evaluation for all VLMs (and optionally with LLM-based answer extraction). Meanwhile, some benchmarks may use different approaches (SEEDBench uses PPL-based evaluation, eg.). For those benchmarks, we compare both scores in the corresponding result. We encourage developers to support other evaluation paradigms in the codebase.

- By default, we use the same prompt template for all VLMs to evaluate on a benchmark. Meanwhile, some VLMs may have their specific prompt templates (some may not covered by the codebase at this time). We encourage VLM developers to implement their own prompt template in VLMEvalKit, if that is not covered currently. That will help to improve the reproducibility.

If you find this work helpful, please consider to star🌟 this repo. Thanks for your support!

If you use VLMEvalKit in your research or wish to refer to published OpenSource evaluation results, please use the following BibTeX entry and the BibTex entry corresponding to the specific VLM / benchmark you used.

@misc{2023opencompass,

title={OpenCompass: A Universal Evaluation Platform for Foundation Models},

author={OpenCompass Contributors},

howpublished = {\url{https://github.com/open-compass/opencompass}},

year={2023}

}- Opencompass: An LLM evaluation platform, supporting a wide range of models (LLaMA, LLaMa2, ChatGLM2, ChatGPT, Claude, etc) over 50+ datasets.

- MMBench: Official Repo of "MMBench: Is Your Multi-modal Model an All-around Player?"

- BotChat: Evaluating LLMs' multi-round chatting capability.

- LawBench: Benchmarking Legal Knowledge of Large Language Models.

- Ada-LEval: Length Adaptive Evaluation, measures the long-context modeling capability of language models.