This code is part of my master thesis at the VUB, Brussels.

Different algorithms have already been implemented:

- Cross-Entropy Method

- Sarsa with with function approximation and eligibility traces

- REINFORCE (convolutional neural network part has not been tested yet)

- Karpathy's policy gradient algorithm (version using convolutional neural networks has not been tested yet)

- Advantage Actor Critic

- Asynchronous Advantage Actor Critic (A3C)

- (Sequential) knowledge transfer

- Asynchronous knowledge transfer

The following parts are combined to learn to act in the Mountain Car environment:

- Sarsa

- Eligibility traces

- epsilon-greedy action selection policy

- Function approximation using tile coding

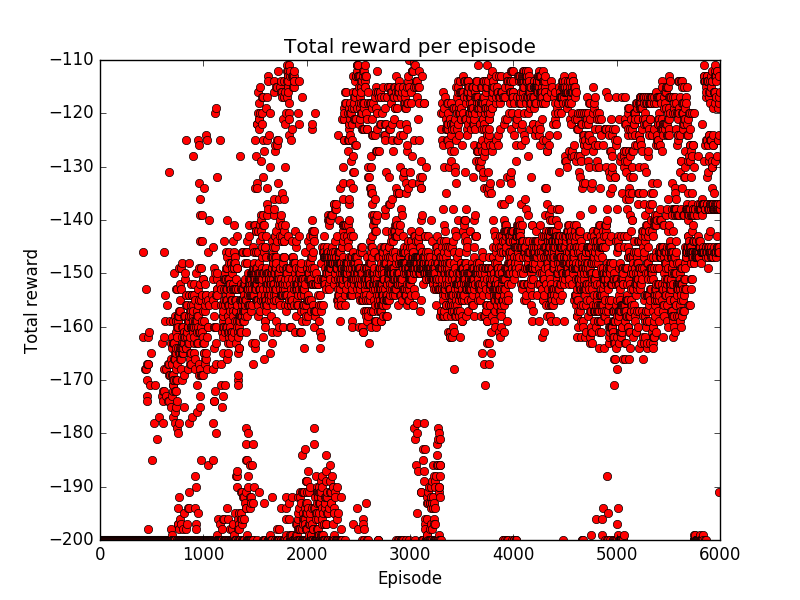

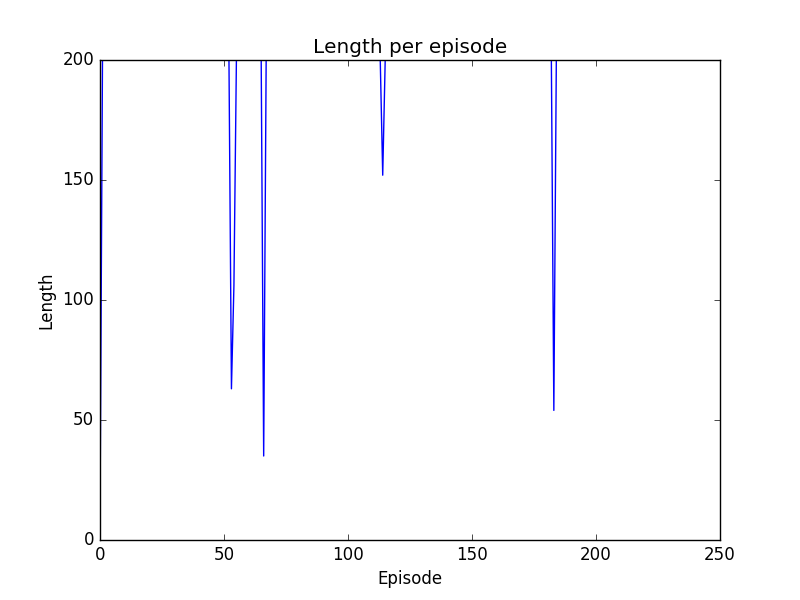

Example of a run after training with a total greedy action selection policy for 729 episodes of each 200 steps:

Note that, after a few thousand episodes, the algorithm still isn't capable of consistently reaching the goal in less than 200 steps.

Adapted version of this code in order to work with Tensorflow.

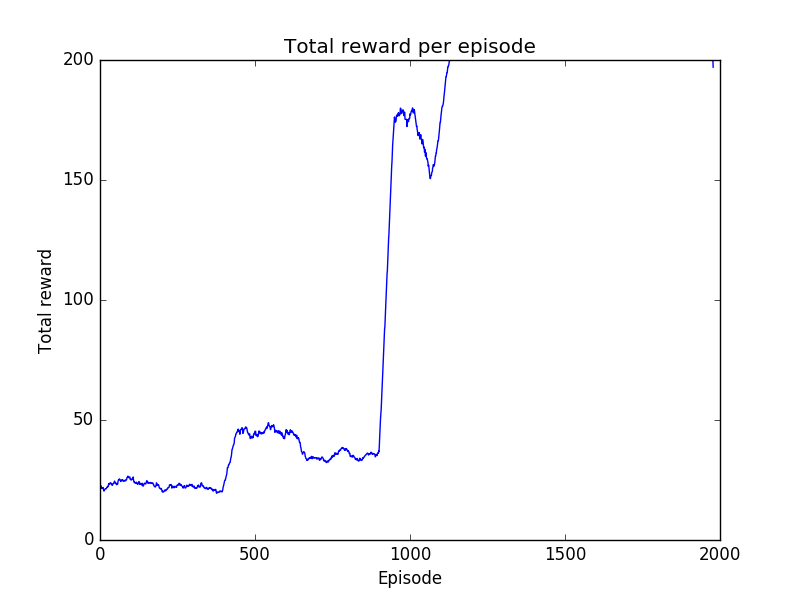

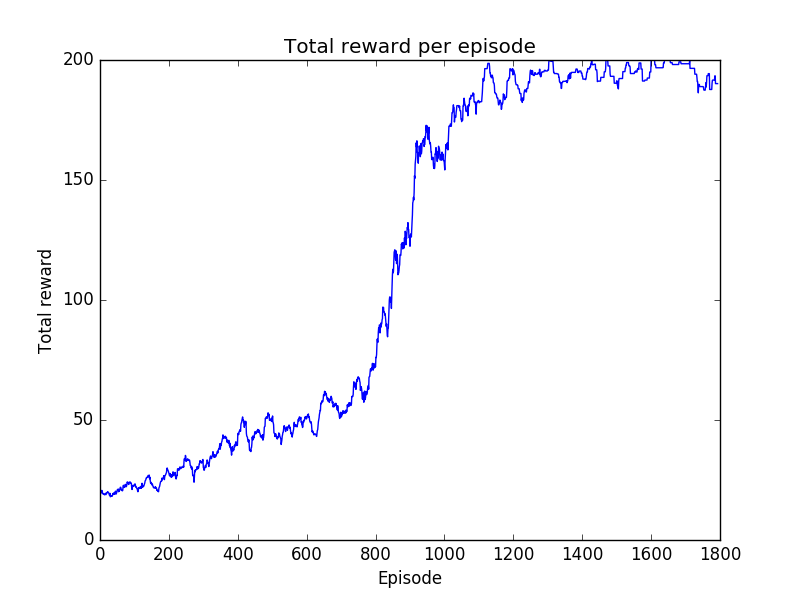

Total reward per episode when applying this algorithm on the CartPole-v0 environment:

Adapted version of the code of this article of Andrej Karpathy.

Total reward per episode when applying this algorithm on the CartPole-v0 environment:

How quickly the optimal reward is reached and kept heavily varies however because of randomness. Results of an earlier execution are also posted on the OpenAI Gym.

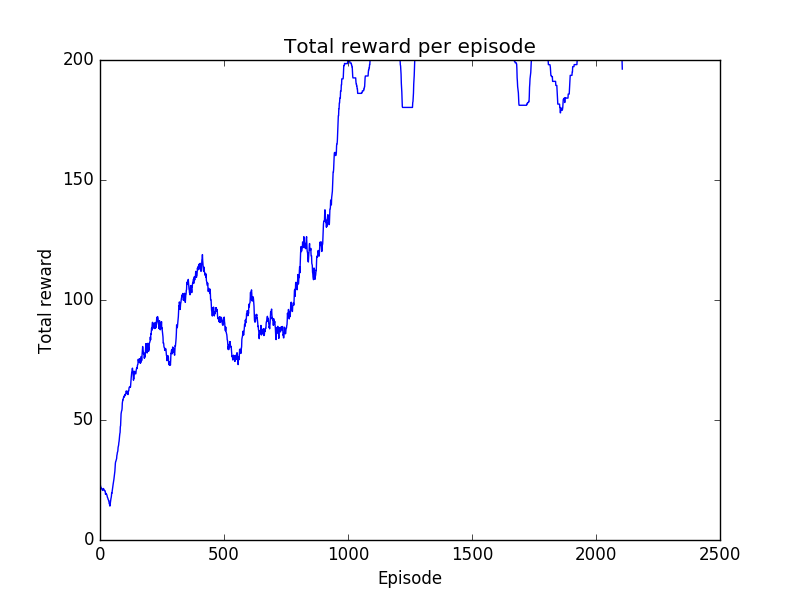

Total reward per episode when applying this algorithm on the CartPole-v0 environment:

Total reward per episode when applying this algorithm on the CartPole-v0 environment:

This only shows the results of one of the A3C threads. Results of another execution are also posted on the OpenAI Gym. Results of an execution using the Acrobot-v1 environment can also be found on OpenAI Gym.

First, install the requirements using pip:

pip install -r requirements.txt

Then you can run the Sarsa + Function approximation algorithm using:

python SarsaFA.py <episodes_to_run> <monitor_target_directory>

You can run the CEM, REINFORCE, Karpathy, Karpathy_CNN, A2C and A3C algorithm using:

python <algorithm_name>.py <environment_name> <monitor_target_directory>

You can plot the episode lengths and total reward per episode graphs using:

python plot_statistics.py <path_to_stats.json> <moving_average_window>